Top 35 big data interview questions with answers for 2025

Big data is a hot field and organizations are looking for talent at all levels. Get ahead of the competition for that big data job with these top interview questions and answers.

Increasingly, organizations worldwide see the wisdom of embracing big data. The careful analysis and synthesis of massive data sets provide invaluable insights to help them make informed and timely strategic business decisions.

For example, big data analytics help determine what new products to develop based on a deep understanding of customer behaviors, preferences and buying patterns. Analytics can also reveal untapped potential, such as new territories or nontraditional market segments.

As organizations race to augment their big data capabilities and skills, the demand for qualified candidates in the field is reaching new heights. If you aspire to pursue a career path in this domain, a world of opportunity awaits. Today's most challenging -- yet rewarding and in-demand -- big data roles include data analysts, data scientists, database administrators, big data engineers and Hadoop specialists. Knowing what big data questions an interviewer is likely to ask -- and how to answer -- is essential to success.

This article offers direction for your next big data interview, whether you are a recent graduate in data science or information management or you already have experience working in big data-related roles or other technology fields. This piece also provides some of the most common big data interview questions that prospective employers might ask.

How to prepare for a big data interview

Before delving into the specific big data interview questions and answers, here are the basics of interview preparation.

- Create a tailored and compelling resume. Ideally, you should tailor your resume -- and cover letter -- to the particular role and position for which you apply. These documents demonstrate your qualifications and experience, and they show your prospective employer that you've researched the organization's history, financials, strategy, leadership, culture and vision. Also, don't be shy to highlight what you believe to be your strongest soft skills that are relevant to the role. These might include communication and presentation capabilities; tenacity and perseverance; an eye for detail and professionalism; and respect, teamwork and collaboration.

- Remember, an interview is a two-way street. Of course, it is essential to provide correct and articulate answers to an interviewer's technical questions, but don't overlook the value of asking your own questions. Build a shortlist of these questions before the appointment to ask at opportune moments.

- The Q&A: prepare, prepare, prepare. Invest the time necessary to research and determine your answers to the most commonly asked questions, then rehearse your answers before the interview. Be yourself during the interview. Look for ways to show your personality and convey your responses authentically and thoughtfully. Monosyllabic, vague or bland answers won't serve you well.

Now, here are the top 35 big data interview questions. These include a specific focus on the widely adopted Hadoop framework, given its ability to solve the most difficult big data challenges, thereby delivering on core business requirements.

Top 35 big data interview questions and answers

Each of the following 35 big data interview questions includes an answer. Don't rely solely on these answers when preparing for your interview. Instead, use them as a launching point for digging more deeply into each topic.

1. What is big data?

As basic as this question is, have a clear and concise answer that demonstrates your understanding of this term and its full scope, making it clear that big data includes just about any type of data from any number of sources, including the following:

- Emails.

- Server logs.

- Social media.

- User files.

- Medical records.

- Temporary files.

- Databases.

- Machinery sensors.

- Automobiles.

- Industrial equipment.

- Internet of things (IoT) devices.

Big data can include structured, semi-structured and unstructured data -- in any combination -- collected from a range of heterogeneous sources. Once collected, the data must be carefully managed so it can be mined for information and transformed into actionable insights. When mining data, data scientists and other professionals often use advanced technologies such as machine learning, deep learning, predictive modeling or other advanced analytics to gain a deeper understanding of the data.

2. How can big data analytics benefit business?

There are a number of ways that big data can benefit organizations, as long as they can extract value from the data, gain actionable insights and put those insights to work. Although you won't be expected to list every possible outcome of a big data project, be ready to cite several examples that demonstrate an effective big data project achievement. Include any of the following:

- Improve customer service.

- Personalize marketing campaigns.

- Increase worker productivity.

- Improve daily operations and service delivery.

- Reduce operational expenses.

- Identify new revenue streams.

- Improve products and services.

- Gain a competitive advantage in your industry.

- Gain deeper insights into customers and markets.

- Optimize supply chains and delivery routes.

Big data analytics aids organizations within specific industries. For example, a utility company might use big data to better track and manage electrical grids. Or governments might use big data to improve emergency response, reduce crime and support smart city initiatives.

3. What are your experiences in big data?

If you have had previous roles in the field of big data, outline your title, functions, responsibilities and career path. Include any specific challenges and how you met those challenges. Also mention any highlights or achievements related either to a specific big data project or to big data in general. Be sure to include any programming languages you've worked with, especially as they pertain to big data.

4. What are some of the challenges that come with a big data project?

No big data project is without its challenges. Some of those challenges might be specific to the project itself or to big data in general. You should be aware of what some of these challenges are -- even if you haven't experienced them yourself. Below are some of the more common challenges:

- Many organizations don't have the in-house skill sets they need to plan, deploy, manage and mine big data.

- Managing a big data environment is a complex and time-consuming undertaking that must consider both the infrastructure and data, while ensuring that all the pieces fit together.

- Securing data and protecting personally identifiable information is complicated by the types of data, amounts of data and the diverse origins of that data.

- Scaling infrastructure to meet performance and storage requirements can be a complex and costly process.

- Ensuring data quality and integrity can be difficult to achieve when working with large quantities of heterogeneous data.

- Analyzing large sets of heterogeneous data can be time-consuming and resource-intensive, and it does not always lead to actionable insights or predictable outcomes.

- Ensuring that you have the right tools in place and that they all work together brings its own set of challenges.

- The cost of infrastructure, software and personnel can quickly add up, and those costs can be difficult to keep under control.

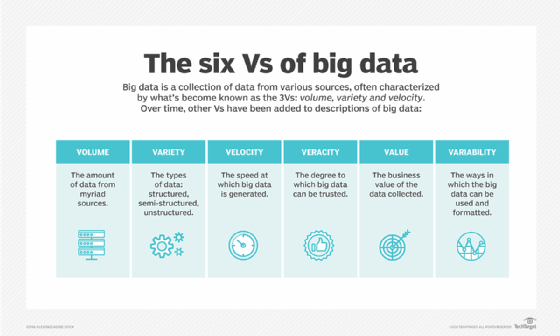

5. What are the five Vs of big data?

Big data is often discussed in terms of the following five Vs:

- The vast amounts of data that are collected from multiple heterogeneous sources.

- The various formats of structured, semi-structured and unstructured data, from social media, IoT devices, database tables, web applications, streaming services, machinery, business software and other sources.

- The ever-increasing rate at which data is being generated on all fronts in all industries.

- The degree of accuracy of collected data, which can vary significantly from one source to the next.

- The potential business value of the collected data.

Interviewers might ask for only four Vs, rather than five. In which case, they're usually looking for the first four (volume, variety, velocity and veracity). If this happens in your interview, you might also mention that there is sometimes a fifth V: value. To impress your interviewer even further, you can mention yet another V: variability, which refers to the ways in which the data can be used and formatted.

6. What are the key steps in deploying a big data platform?

There is no one formula that defines exactly how a big data platform should be implemented. However, it's generally accepted that rolling out a big data platform follows these three basic steps:

- Data ingestion. Start out by collecting data from multiple sources, such as social media platforms, log files or business documentation. Data ingestion might be an ongoing process in which data is continuously collected to support real-time analytics, or it might be collected at defined intervals to meet specific business requirements.

- Data storage. After extracting the data, store it in a database, which might be the Hadoop Distributed File System (HDFS), Apache HBase or another NoSQL database.

- Data processing. The final step is to prepare the data so it is readily available for analysis. For this, you'll need to implement one or more frameworks that have the capacity handle massive data sets, such as Hadoop, Apache Spark, Flink, Pig or MapReduce, to name a few.

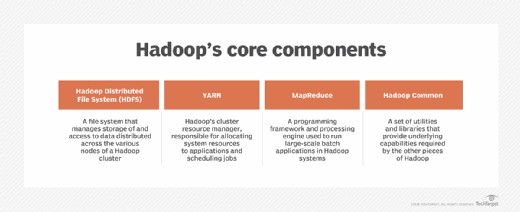

7. What is Hadoop and what are its main components?

Hadoop is an open source distributed processing framework for handling large data sets across computer clusters. It can scale up to thousands of machines, each supporting local computation and storage. Hadoop can process large amounts of different data types and distribute the workloads across multiple nodes, which makes it a good fit for big data initiatives.

The Hadoop platform includes the following four primary modules (components):

- Hadoop Common. A collection of utilities that support the other modules.

- Hadoop Distributed File System (HDFS). A key component of the Hadoop ecosystem that serves as the platform's primary data storage system, while providing high-throughput access to application data.

- Hadoop YARN (Yet Another Resource Negotiator). A resource management framework that schedules jobs and allocates system resources across the Hadoop ecosystem.

- Hadoop MapReduce. A YARN-based system for parallel processing large data sets.

8. Why is Hadoop so popular in big data analytics?

Hadoop is effective in dealing with large amounts of structured, unstructured and semi-structured data. Analyzing unstructured data isn't easy, but Hadoop's storage, processing and data collection capabilities make it less onerous. In addition, Hadoop is open source and runs on commodity hardware, so it is less costly than systems that rely on proprietary hardware and software.

One of Hadoop's biggest selling points is that it can scale up to support thousands of hardware nodes. Its use of HDFS facilitates rapid data access across all nodes in a cluster, and its inherent fault tolerance makes it possible for applications to continue to run even if individual nodes fail. Hadoop also stores data in its raw form, without imposing any schemas. This allows each team to decide later how to process and filter the data, based on their specific requirements at the time.

As a follow-on from this question, please define the following four terms, specifically in the context of Hadoop:

9. Open source

Hadoop is an Open Source platform. As a result, users can access and modify the source code to meet their specific needs. Hadoop is licensed under Apache License 2.0, which grants users a "perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable copyright license to reproduce, prepare Derivative Works of, publicly display, publicly perform, sublicense, and distribute the Work and such Derivative Works in Source or Object form." Because Hadoop is open source and has been so widely implemented, it has a large and active user community for helping to resolve issues and improving the product.

10. Scalability

Hadoop can be scaled out to support thousands of hardware nodes, using only commodity hardware. Organizations can start out with smaller systems and then scale out by adding more nodes to their clusters. They can also scale up by adding resources to the individual nodes. This scalability makes it possible to ingest, store and process the vast amounts of data typical of a big data initiative.

11. Data recovery

Hadoop replication provides built-in fault tolerance capabilities that protect against system failure. Even if a node fails, applications can keep running while avoiding any loss of data. HDFS stores files in blocks that are replicated to ensure fault tolerance, helping to improve both reliability and performance. Administrators can configure block sizes and replication factors on a per-file basis.

12. Data locality

Hadoop moves the computation close to where data resides, rather than moving large sets of data to computation. This helps to reduce network congestion while improving the overall throughput.

13. What are some vendor-specific distributions of Hadoop?

Several vendors now offer Hadoop-based products. Some of the more notable products include the following:

- Cloudera.

- MapR.

- Amazon EMR (Elastic MapReduce).

- Microsoft Azure HDInsight.

- IBM InfoSphere Information Server.

- Hortonworks Data Platform.

14. What are some of the main configuration files used in Hadoop?

The Hadoop platform provides multiple configuration files for controlling cluster settings, including the following:

- 7adoop-env.sh. Site-specific environmental variables for controlling Hadoop scripts in the bin directory.

- yarn-env.sh. Site-specific environmental variables for controlling YARN scripts in the bin directory.

- mapred-site.xml. Configuration settings specific to MapReduce, such as the MapReduce.framework.name setting.

- core-site.xml. Core configuration settings, such as the I/O configurations common to HDFS and MapReduce.

- yarn-site.xml. Configuration settings specific to YARN's ResourceManager and NodeManager.

- hdfs-site.xml. Configuration settings specific to HDFS, such as the file path where the NameNode stores the namespace and transactions logs.

15. What is HDFS and what are its main components?

HDFS is a distributed file system that serves as Hadoop's default storage environment. It can run on low-cost commodity hardware, while providing a high degree of fault tolerance. HDFS stores the various types of data in a distributed environment that offers high throughput to applications with large data sets. HDFS is deployed in a primary/secondary architecture, with each cluster supporting the following two primary node types:

- NameNode. A single primary node that manages the file system namespace, regulates client access to files and processes the metadata information for all the data blocks in the HDFS.

- DataNode. A secondary node that manages the storage attached to each node in the cluster. A cluster typically contains many DataNode instances, but there is usually only one DataNode per physical node. Each DataNode serves read and write requests from the file system's clients.

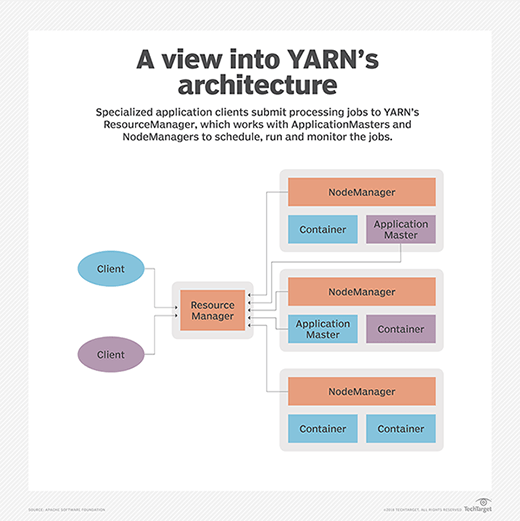

16. What is Hadoop YARN and what are its main components?

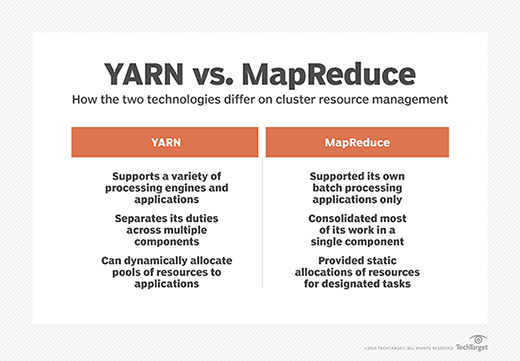

Hadoop YARN manages resources and provides an execution environment for required processes, while allocating system resources to the applications running in the cluster. It also handles job scheduling and monitoring. YARN decouples resource management and scheduling from the data processing component in MapReduce.

YARN separates resource management and job scheduling into the following two daemons:

- ResourceManager. This daemon arbitrates resources for the cluster's applications. It includes two main components: Scheduler and ApplicationsManager. The Scheduler allocates resources to running applications. The ApplicationsManager has multiple roles: accepting job submissions, negotiating the execution of the application-specific ApplicationMaster and providing a service for restarting the ApplicationMaster container on failure.

- NodeManager. This daemon launches and manages containers on a node and uses them to run specified tasks. NodeManager also runs services that determine the health of the node, such as performing disk checks. Moreover, NodeManager can execute user-specified tasks.

17. What are Hadoop's primary operational modes?

Hadoop supports three primary operational nodes.

- Standalone. Also referred to as Local mode, the Standalone mode is the default mode. It runs as a single Java process on a single node. It also uses the local file system and requires no configuration changes. The Standalone mode is used primarily for debugging purposes.

- Pseudo-distributed. Also referred to as a single-node cluster, the Pseudo-distributed mode runs on a single machine, but each Hadoop daemon runs in a separate Java process. This mode also uses HDFS, rather than the local file system, and it requires configuration changes. This mode is often used for debugging and testing purposes.

- Fully distributed. This is the full production mode, with all daemons running on separate nodes in a primary/secondary configuration. Data is distributed across the cluster, which can range from a few nodes to thousands of nodes. This mode requires configuration changes but offers the scalability, reliability and fault tolerance expected of a production system.

18. What are three common input formats in Hadoop?

Hadoop supports multiple input formats, which determine the shape of the data when it is collected into the Hadoop platform. The following input formats are three of the most common:

- Text. This is the default input format. Each line within a file is treated as a separate record. The records are saved as key/value pairs, with the line of text treated as the value.

- Key-value text. This input format is similar to the text format, breaking each line into separate records. Unlike the text format, which treats the entire line as the value, the key-value text format breaks the line itself into a key and a value, using the tab character as a separator.

- Sequence file. This format reads binary files that store sequences of user-defined key-value pairs as individual records.

Hadoop supports other input formats as well, so maintain a good understanding of them as well.

19. What makes an HDFS environment fault-tolerant?

HDFS easily replicates data to different DataNodes. HDFS breaks files down into blocks that are distributed across nodes in the cluster. Each block is also replicated to other nodes. If one node fails, the other nodes take over, letting applications to access the data through one of the backup nodes.

20. What is rack awareness in Hadoop clusters?

Rack awareness is one of the mechanisms used by Hadoop to optimize data access when processing client read and write requests. When a request comes in, the NameNode identifies and selects the nearest DataNodes, preferably those on the same rack or on nearby racks. Rack awareness can help improve performance and reliability, while reducing network traffic. Rack awareness can also play a role in fault tolerance. For example, the NameNode places data block replicas on separate racks to ensure continued access in case a network switch fails or a rack becomes unavailable for other reasons.

21. How does Hadoop protect data against unauthorized access?

Hadoop uses the Kerberos network authentication protocol to protect data from unauthorized access. Kerberos uses secret-key cryptography to provide strong authentication for client/server applications. A client must undergo the following three basic steps -- each of which involves message exchanges with the server -- to prove its identity to that server:

- Authentication. The client sends an authentication request to the Kerberos authentication server. The server verifies the client and sends the client a ticket granting ticket (TGT) and a session key.

- Authorization. Once authenticated, the client requests a service ticket from the ticket granting server (TGS). The TGT must be included with the request. If the TGS authenticates the client, it sends the service ticket and credentials necessary to access the requested resource.

- Service request. The client sends its request to the Hadoop resource it is trying to access. The request must include the service ticket issued by the TGS.

22. What is speculative execution in Hadoop?

Speculative execution is an optimization technique that Hadoop uses when it detects that a DataNode is executing a task too slowly. There can be many reasons for a slowdown, and it can be difficult to determine its actual cause. Rather than trying to diagnose and fix the problem, Hadoop identifies the task in question and launches an equivalent task -- the speculative task -- as a backup. If the original task completes before the speculative task, Hadoop kills that speculative task.

23. What is the purpose of the JPS command in Hadoop?

JPS, which is short for Java Virtual Machine Process Status, is a command used to check the status of the Hadoop daemons, specifically NameNode, DataNode, ResourceManager and NodeManager. Administrators can use the command to verify whether the daemons are up and running. The tool returns the process ID and process name of each Java Virtual Machine (JVM) running on the target system.

24. What commands can you use to start and stop all the Hadoop daemons at one time?

You can use the following command to start all the Hadoop daemons:

./sbin/start-all.sh

You can use the following command to stop all the Hadoop daemons:

./sbin/stop-all.sh

25. What is an edge node in Hadoop?

An edge node is a computer that acts as an end-user portal for communicating with other nodes in a Hadoop cluster. An edge node provides an interface between the Hadoop cluster and an outside network. For this reason, it is also referred to as a gateway node or edge communication node. Edge nodes are often used to run administration tools or client applications. They typically do not run any Hadoop services.

26. What are the key differences between NFS and HDFS?

NFS, which stands for Network File System, is a widely implemented distributed file system protocol used extensively in network-attached storage (NAS) systems. It is one of the oldest distributed file storage systems and is well-suited to smaller data sets. NAS makes data available over a network but accessible like files on a local machine.

HDFS is a more recent technology. It is designed for handling big data workloads, providing high throughput and high capacity, far beyond the capabilities of an NFS-based system. HDFS also offers integrated data protections that safeguard against node failures. NFS is typically implemented on single systems that do not include the inherent fault tolerance that comes with HDFS. However, NFS-based systems are usually much less complicated to deploy and maintain than HDFS-based systems.

27. What is commodity hardware?

Commodity hardware is a device or component that is widely available, relatively inexpensive and can typically be used interchangeably with other components. Commodity hardware is sometimes referred to as off-the-shelf hardware because of its ready availability and ease of acquisition. Organizations often choose commodity hardware over proprietary hardware because it is cheaper, simpler and faster to acquire, and it is easier to replace all or some of the components in the event of hardware failure. Commodity hardware might include servers, storage systems, network equipment or other components.

28. What is MapReduce?

MapReduce is a software framework in Hadoop that's used for processing large data sets across a cluster of computers in which each node includes its own storage. MapReduce can process data in parallel on these nodes, making it possible to distribute input data and collate the results. In this way, Hadoop can run jobs split across a massive number of servers. MapReduce also provides its own level of fault tolerance, with each node periodically reporting its status to a primary node. In addition, MapReduce offers native support for writing Java applications, although you can also write MapReduce applications in other programming languages.

29. What are the two main phases of a MapReduce operation?

A MapReduce operation can be divided into the following two primary phases:

- Map phase. MapReduce processes the input data, splits it into chunks and maps those chunks in preparation for analysis. MapReduce runs these processes in parallel.

- Reduce phase. MapReduce processes the mapped chunks, aggregating the data based on the defined logic. The output of these phases is then written to HDFS.

MapReduce operations are sometimes divided into phases other than these two. For example, the Reduce phase might be split into the Shuffle phase and the Reduce phase. In some cases, you might also see a Combiner phase, which is an optional phase used to optimize MapReduce operations.

30. What is feature selection in big data?

Feature selection refers to the process of extracting only specific information from a data set. This can reduce the amount of data that needs to be analyzed, while improving the quality of that data used for analysis. Feature selection makes it possible for data scientists to refine the input variables they use to model and analyze the data, leading to more accurate results, while reducing the computational overhead.

Data scientists use sophisticated algorithms for feature selection, which usually fall into one of the following three categories:

- Filter methods. A subset of input variables is selected during a preprocessing stage by ranking the data based on such factors as importance and relevance.

- Wrapper methods. This approach is a resource-intensive operation that uses machine learning and predictive analytics to try to determine which input variables to keep, usually providing better results than filter methods.

- Embedded methods. Embedded methods combine attributes of both the file and wrapper methods, using fewer computational resources than wrapper methods, while providing better results than filter methods. However, embedded methods are not always as effective as wrapper methods.

31. What is an "outlier" in the context of big data?

An outlier is a data point that's abnormally distant from others in a group of random samples. The presence of outliers can potentially mislead the process of machine learning and result in inaccurate models or substandard outcomes. In fact, an outlier can potentially bias an entire result set. That said, outliers can sometimes contain nuggets of valuable information.

32. What are two common techniques for detecting outliers?

Analysts often use the following two techniques to detect outliers:

- Extreme value analysis. This is the most basic form of outlier detection and is limited to one-dimensional data. Extreme value analysis determines the statistical details of the data distribution. The Altman Z-score is a good example of extreme value analysis.

- Probabilistic and statistical models. The models determine the unlikely instances from a probabilistic model of data. Data points with a low probability of membership are marked as outliers. However, these models assume that the data adheres to specific distributions. A common example of this type of outlier detection is the Bayesian probabilistic model.

These are only two of the core methods used to detect outliers. Other approaches include linear regression models, information theoretic models, high-dimensional outlier detection methods and other approaches.

33. What is the FSCK command used for?

FSCK, which stands for file system consistency check, is an HDFS filesystem checking utility that generates a summary report about the file system's status. However, the report merely identifies the presence of errors; it does not correct them. The FSCK command can be executed against an entire system or a select subset of files.

34. Are you open to gaining additional learning and qualifications that could help you advance your career with us?

Here's your chance to demonstrate your enthusiasm and career ambitions. Of course, your answer will depend on your current level of academic qualifications and certifications, as well as your personal circumstances, which might include family responsibilities and financial considerations. Therefore, respond forthrightly and honestly. Bear in mind that many courses and learning modules are readily available online. Moreover, analytics vendors have established training courses aimed at those seeking to upskill themselves in this domain. You can also inquire about the company's policy on mentoring and coaching.

35. Do you have any questions for us?

As mentioned earlier, it's a good rule of thumb to go to interviews with a few prepared questions. But depending on how the conversation has unfolded during the interview, you might choose not to ask them. For instance, if they've already been answered or the discussion has sparked other, more pertinent queries in your mind, you can put your original questions aside.

Also, be aware of how you time your questions, taking your cue from the interviewer. Depending on the circumstances, asking questions during the interview is acceptable, although it's generally more common to hold off on your questions until the end of the interview. That said, you should never hesitate to ask for clarification on a question the interviewer asks.

A final word on big data interview questions

Remember, the process doesn't end after an interview has ended. After the session, send a note of thanks to the interviewer(s) or your point(s) of contact. Follow this up with a secondary message if you haven't received any feedback within a few days.

The world of big data is expanding continuously and exponentially. If you're serious and passionate about the topic and prepared to roll up your sleeves and work hard, the sky's the limit.

Editor's note: This article was updated in February 2025.

Robert Sheldon is a freelance technology writer. He has written numerous books, articles and training materials on a wide range of topics, including big data, generative AI, 5D memory crystals, the dark web and the 11th dimension.

Elizabeth Davies is a technology writer and content development professional with more than two decades of experience in consulting, strategizing and collaborating with C-suite and other senior information and communication technology experts.