Blue Planet Studio - stock.adobe

6 steps in fact-checking AI-generated content

Generative AI tools are now prevalent, yet the extent of their accuracy isn't always clear. Users should question an AI's outputs and verify them before accepting them as fact.

Information disseminated online is increasingly created with the help of artificial intelligence, but such tools are far from perfect.

Earlier in 2024, Google Chrome users reported the search engine's AI overview provided them with misleading information -- such as telling them to use glue to keep cheese from sliding off pizza and eat one rock per day. This is known as an AI hallucination.

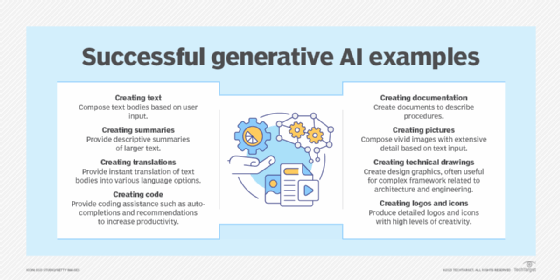

In addition to text, generative AI tools can produce audio, video and imagery. This AI-generated content can be used in research outlines, social media posts, product descriptions, blog posts and email content -- and all of it should be scrutinized. When used incorrectly, AI can mislead the public -- as with the Google example -- compromise data privacy, create biased or discriminatory content and further erode public trust in new technologies.

Six steps to fact-check AI-generated content

While everyone should verify information they find on the internet, it's even more important for content creators who use AI content generators -- such as OpenAI's ChatGPT and Google's Gemini -- for assistance. Double-checking AI outputs against credible sources can prevent the spread of misinformation and disinformation.

There are multiple steps involved in fact-checking AI-generated content, including the following:

1. Research key points to separate fact from fiction

Even if the content generator appears to have provided well-written and accurate information, users should be conscientious of false information. Make a list of key points in the AI's response and fact-check each one using credible sources. This includes the following information:

- Names and titles.

- Quotations.

- Company names.

- Any numbers -- including dates, statistics and ages.

- Sequence of events.

This is worth the time and effort, as predicating an entire article on information that turns out to be wrong can be detrimental to readers and compromise the writer's credibility.

2. Examine the plausibility of suspicious claims

Keep an eye out for outlandish claims in AI-generated text and audio outputs, as well as visual elements in images and videos that seem out of place. While verifying each claim or image an AI tool produces is important, if something immediately stands out as implausible, users might want to think about discontinuing use of that tool. For example, if a tool asserts there are a billion people living in any given European country and attempts to back it up with fabricated claims, a human can determine the tool is unreliable without research.

3. Validate AI outputs in depth using multiple sources

Sources used to train the AI tool data sets likely won't be quoted directly in the AI's outputs. Since it might be hard to pinpoint the original sources, similar sources can be used to verify the information. And multiple sources are encouraged, including the following:

- Authoritative websites -- including well-known news outlets and organizations.

- Research studies.

- Academic journals.

4. Independent fact-checking sites and tools could help save time

Using official fact-checking sites and tools -- such as Factcheck.org, Google Fact Check Tools, IBM Watson Discovery and Microsoft's natural language processing (NLP) -- could help save time. While various websites and research studies offer in-depth information on specific topics, fact-checking tools can take simpler claims and either substantiate or disprove them, making painstaking research unnecessary.

5. Reach out to subject matter experts if needed

To address any remaining uncertainties, reach out to subject matter experts directly. When unsure of an AI's outputs -- possibly because the topic at hand is new or niche and not covered extensively online -- credible websites or databases often provide contact information for qualified individuals, including email addresses or phone numbers.

6. Proofread the content

After all the facts have been verified by human fact-checkers, proofread the document. Like humans, AI systems can also misspell words, and use bad grammar and confusing wording. While using tools such as Grammarly can help in the process, humans should review it, too.

Drawing conclusions about AI content generators

After following these steps, content creators and readers can draw conclusions about whether an AI content generator or website relying on AI-generated content is credible.

In a reader's case, they might determine false AI-generated content has compromised a particular website's credibility if much of the information is incorrect or biased. Content creators who used a faulty AI tool to generate content and spotted many problems could either move forward with the AI tool with an abundance of caution or rule it out entirely and move to a new tool. Conversely, outputs found to be entirely or mostly accurate are indicative of a successful generative AI tool.

Cameron Hashemi-Pour is a technology writer for WhatIs. Before joining TechTarget, he graduated from the University of Massachusetts Dartmouth and received his MFA in Professional Writing/Communications. He then worked at Context Labs (BV), a software company based in Cambridge, MA, as a Technical Editor.