History and evolution of machine learning: A timeline

Machine learning's legacy dates from the early beginnings of neural networks to recent advancements in generative AI that democratize new and controversial ways to create content.

Call it what you want: AI's offshoot, AI's stepchild, AI's second banana, AI's sidekick, AI's lesser-known twin. Machine learning lacks the cache bestowed upon artificial intelligence, yet just about every aspect of our lives and livelihoods is influenced by this "ultimate statistician" and what it hath wrought since it was merely a twinkle in the eyes of neuroscientists Walter Pitts and Warren McCulloch eight decades ago. Their mathematical modeling of a neural network in 1943 marks ML's consensus birth year.

What is machine learning?

Machine learning is about the development and use of computer systems that learn and adapt without following explicit instructions. It uses algorithms and statistical models to analyze and yield predictive outcomes from patterns in data.

In some regards, machine learning might be AI's puppet master. Much of what propels generative AI comes from machine learning in the form of large language models (LLMs) that analyze vast amounts of input data to discover patterns in words and phrases.

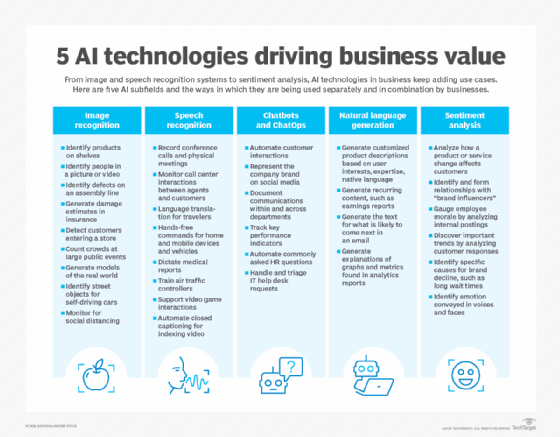

Many of AI's unprecedented applications in business and society are supported by machine learning's wide-ranging capabilities, whether it's digesting Instagrams or analyzing mammograms, assessing risks or predicting failures, or navigating roadways or thwarting cyberattacks we never hear about. Machine learning's omnipresence impacts the daily business operations of most industries, including e-commerce, manufacturing, finance, insurance services and pharmaceuticals.

Walk along the machine learning timeline

Through the decades after the 1940s, the evolution of machine learning includes some of the more notable developments:

- Pioneers named Turing, Samuel, McCarthy, Minsky, Edmonds and Newell dotted the machine learning landscape in the 1950s, when the Turing Test, the first artificial neural network, and the terms artificial intelligence and machine learning were conceived.

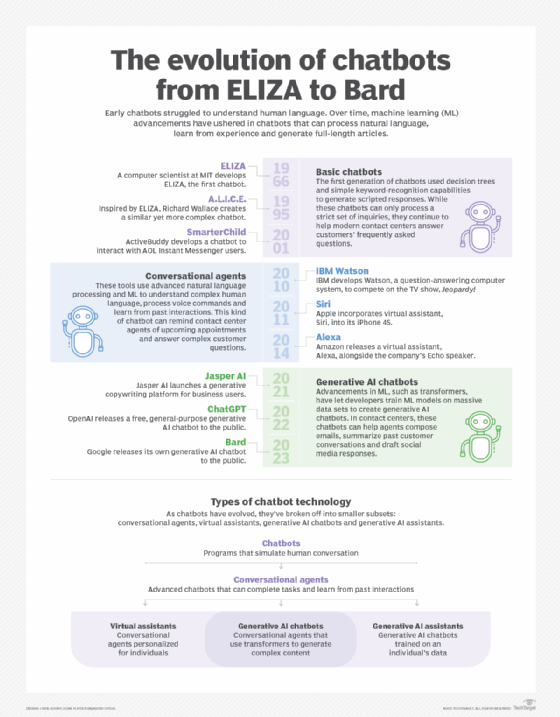

- Eliza, the first chatbot; Shakey, the first mobile intelligent robot; Stanford Cart, a video-controlled remote vehicle; and the foundations of deep learning were developed in the 1960s.

- Programs that recognize patterns and handwritten characters, solve problems based on natural selection, seek appropriate actions, create rules to discard unimportant information, and learn like a baby learns to pronounce words highlighted the 1970s and 1980s.

- Programs capable of playing backgammon and chess threatened the domains of top-tier backgammon players and the reigning world chess champion in the 1990s.

- IBM Watson defeated the all-time Jeopardy! champion in 2011. (Do you recognize a pattern here?) Personal assistants, generative adversarial networks, facial recognition, deepfakes, motion sensing, autonomous vehicles, multimodal chatbot interfaces to LLMs, and content and image creation through the democratization of AI tools have emerged so far in the 2000s.

1943

Logician Walter Pitts and neuroscientist Warren McCulloch published the first mathematical modeling of a neural network to create algorithms that mimic human thought processes.

1949

Donald Hebb published The Organization of Behavior: A Neuropsychological Theory, a seminal book on machine learning development focusing on how behavior and thought in terms of brain activity relate to neural networks.

1950

Alan Turing published Computing Machinery and Intelligence, which introduced the Turing test and opened the door to what would be known as AI.

1951

Marvin Minsky and Dean Edmonds developed SNARC, the first artificial neural network (ANN), using 3,000 vacuum tubes to simulate a network of 40 neurons.

1952

Arthur Samuel created the Samuel Checkers-Playing Program, the world's first self-learning program to play games.

1956

John McCarthy, Marvin Minsky, Nathaniel Rochester and Claude Shannon coined the term artificial intelligence in a proposal for a workshop widely recognized as a founding event of the AI field.

Allen Newell, Herbert Simon and Cliff Shaw wrote Logic Theorist, the first AI program deliberately engineered to perform automated reasoning.

1958

Frank Rosenblatt developed the perceptron, an early ANN that could learn from data and became the foundation for modern neural networks.

1959

Arthur Samuel coined the term machine learning in a seminal paper explaining that the computer could be programmed to outplay its programmer.

Oliver Selfridge published "Pandemonium: A Paradigm for Learning," a landmark contribution to machine learning that described a model capable of adaptively improving itself to find patterns in events.

1960

Mechanical engineering graduate student James Adams constructed the Stanford Cart to support his research on the problem of controlling a remote vehicle using video information.

1963

Donald Michie developed a program named MENACE, or Matchbox Educable Noughts and Crosses Engine, which learned how to play a perfect game of tic-tac-toe.

1965

Edward Feigenbaum, Bruce G. Buchanan, Joshua Lederberg and Carl Djerassi developed DENDRAL, the first expert system, which assisted organic chemists in identifying unknown organic molecules.

1966

Joseph Weizenbaum created computer program Eliza, capable of engaging in conversations with humans and making them believe the software has human-like emotions.

Stanford Research Institute developed Shakey, the world's first mobile intelligent robot that combined AI, computer vision, navigation capabilities and natural language processing (NLP). It became known as the grandfather of self-driving cars and drones.

1967

The nearest neighbor algorithm provided computers with the capability for basic pattern recognition and was used by traveling salespeople to plan the most efficient routes via the nearest cities.

1969

Arthur Bryson and Yu-Chi Ho described a backpropagation learning algorithm to enable multilayer ANNs, an advancement over the perceptron and a foundation for deep learning.

Marvin Minsky and Seymour Papert published Perceptrons, which described the limitations of simple neural networks and caused neural network research to decline and symbolic AI research to thrive.

1973

James Lighthill released the report "Artificial Intelligence: A General Survey," which led to the British government significantly reducing support for AI research.

1979

Kunihiko Fukushima released work on neocognitron, a hierarchical, multilayered ANN used for pattern recognition tasks.

1981

Gerald Dejong introduced explanation-based learning in which a computer learned to analyze training data and create a general rule for discarding information deemed unimportant.

1985

Terry Sejnowski created a program named NetTalk, which learned to pronounce words like the way a baby learns.

1989

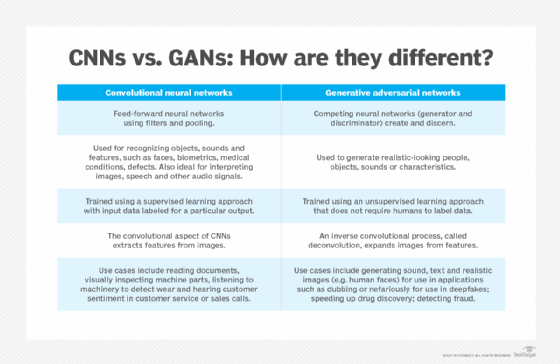

Yann LeCun, Yoshua Bengio and Patrick Haffner demonstrated how convolutional neural networks (CNNs) can be used to recognize handwritten characters, showing that neural networks could be applied to real-world problems.

Christopher Watkins developed Q-learning, a model-free reinforcement algorithm that sought the best action to take in any current state.

Axcelis released Evolver, the first commercially available genetic algorithm software package for personal computers.

1992

Gerald Tesauro invented TD-Gammon, a program capable of playing backgammon based on an ANN, that rivaled top-tier backgammon players.

1997

Sepp Hochreiter and Jürgen Schmidhuber proposed the Long Short-Term Memory recurrent neural network, which could process entire sequences of data like speech or video.

IBM's Deep Blue defeated Garry Kasparov in a historic chess rematch, the first defeat of a reigning world chess champion by a computer under tournament conditions.

1998

A team led by Yann LeCun released a data set known as the MNIST database, or Modified National Institute of Standards and Technology database, which became widely adopted as a handwriting recognition evaluation benchmark.

2000

University of Montreal researchers published "A Neural Probabilistic Language Model," which suggested a method to model language using feed-forward neural networks.

2002

Torch, the first open source machine learning library, was released, providing interfaces to deep learning algorithms implemented in C.

2006

Psychologist and computer scientist Geoffrey Hinton coined the term deep learning to describe algorithms that help computers recognize different types of objects and text characters in pictures and videos.

Fei-Fei Li started to work on the ImageNet visual database introduced in 2009. It became a catalyst for the AI boom and the basis of an annual competition for image recognition algorithms.

Netflix launched the Netflix Prize competition with the goal of creating a machine learning algorithm more accurate than Netflix's proprietary user recommendation software.

IBM Watson originated with the initial goal of beating a human on the Jeopardy! quiz show.

A rose by any other name

The term machine learning might not trigger the same kind of excitement as AI, but ML has been handed some noteworthy synonyms that rival the appeal of artificial intelligence. Among them are cybernetic mind, electrical brain and fully adaptive resonance theory. The names of countless machine learning algorithms that shape ML models and their predictive outcomes cut across the entire alphabet, from Apriori to Z array.

2010

Microsoft released the Kinect motion-sensing input device for its Xbox 360 gaming console, which could track 20 different human features 30 times per second.

Anthony Goldbloom and Ben Hamner launched Kaggle as a platform for machine learning competitions.

2011

Jürgen Schmidhuber, Dan Claudiu Ciresan, Ueli Meier and Jonathan Masci developed the first CNN to achieve "superhuman" performance by winning the German Traffic Sign Recognition competition.

IBM Watson defeated Ken Jennings, Jeopardy!'s all-time human champion.

2012

Geoffrey Hinton, Ilya Sutskever and Alex Krizhevsky introduced a deep CNN architecture that won the ImageNet Large Scale Visual Recognition Challenge and triggered the explosion of deep learning research and implementation.

2013

DeepMind introduced deep reinforcement learning, a CNN that learned based on rewards and played games through repetition, surpassing human expert levels.

Google researcher Tomas Mikolov and colleagues introduced word2vec to identify semantic relationships between words automatically.

2014

Ian Goodfellow and colleagues invented generative adversarial networks, a class of machine learning frameworks used to generate photos, transform images and create deepfakes.

Google unveiled the Sibyl large-scale machine learning project for predictive user recommendations.

Diederik Kingma and Max Welling introduced variational autoencoders to generate images, videos and text.

Facebook developed DeepFace, a deep learning facial recognition system that can identify human faces in digital images with near-human accuracy.

2016

Uber started a self-driving car pilot program in Pittsburgh for a select group of users.

2017

Google researchers developed the concept of transformers in the seminal paper "Attention is All You Need," inspiring subsequent research into tools that could automatically parse unlabeled text into LLMs.

2018

OpenAI released GPT, paving the way for subsequent LLMs.

2019

Microsoft launched the Turing Natural Language Generation generative language model with 17 billion parameters.

Google AI and the Langone Medical Center deep learning algorithm outperformed radiologists in detecting potential lung cancers.

2021

OpenAI introduced the Dall-E multimodal AI system that can generate images from text prompts.

2022

DeepMind unveiled AlphaTensor "for discovering novel, efficient and provably correct algorithms."

OpenAI released ChatGPT in November to provide a chat-based interface to its GPT 3.5 LLM.

2023

OpenAI announced the GPT-4 multimodal LLM that receives both text and image prompts.

Elon Musk, Steve Wozniak and thousands of more signatories urged a six-month pause on training "AI systems more powerful than GPT-4."

2024

Nvidia has a breakout year as the go-to AI and graphics chip maker, announcing a series of upgrades, including Blackwell and ACE, that founder and CEO Jensen Huang says will make "interacting with computers … as natural as interacting with humans." The 30-year-old company joined Microsoft and Apple in the $3 trillion valuation club.

Researchers at Princeton University teamed up with the U.S. Defense Advanced Research Projects Agency to develop a more compact, power-efficient AI chip capable of handling modern AI workloads.

Microsoft launched an AI weather-forecasting tool named Aurora that can predict air pollution globally in less than a minute.

Multimodal chatbot wars heat up among OpenAI's ChatGPT; Microsoft's Copilot, formerly known as Bing Chat; and Google's Gemini, formerly known as Bard.

Beyond 2024

Machine learning will continue to synergistically ride the coattails and support the advancements of its overarching behemoth parent artificial intelligence. Generative AI in the near term and eventually AI's ultimate goal of artificial general intelligence in the long term will create even greater demand for data scientists and machine learning practitioners.

More mature and knowledgeable multimodal chatbot interfaces to LLMs could become the everyday AI tool for users from all walks of life to quickly generate text, images, videos, audio and code that would ordinarily take humans hours, days or months to produce.

Machine learning will make further inroads into creative AI, distributed enterprises, gaming, autonomous systems, hyperautomation and cybersecurity. The AI market is seen growing 35% annually, surpassing $1.3 trillion by 2030, according to MarketsandMarkets. Gartner estimates a significant amount of business applications will embed conversational AI, and some portion of new applications will be automatically generated by AI without human intervention. In the process, business models and job roles could change on a dime.

Expect advances in the following technologies as machine learning becomes more democratized and its models more sophisticated and mainstream:

- Multimodal AI for understanding text inputs and comprehending human gestures and emotions by merging numeric text, data, images and videos.

- Deep learning for more accurately solving real-life complex problems by recognizing patterns in photos, text, audio and other data and automating tasks that would typically require human intelligence.

- Large action models, or LAMs, for understanding and processing multiple types of data inputs as well as human intentions to perform entire end-to-end routines.

- AutoML for better data management and faster model building.

- Embedded ML, or TinyML, for more efficient use of edge computing in real-time processing.

- MLOps for streamlining the development, training and deployment of machine learning systems.

- Low-code/no-code platforms for developing and implementing ML models without extensive coding or technical expertise.

- Unsupervised learning for data labeling and feature engineering without human intervention.

- Reinforcement learning for dishing out rewards or penalties to algorithms based on their actions.

- NLP for more fluent conversational AI in customer interactions and application development.

- Computer vision for more effective healthcare diagnostics and greater support for augmented and virtual reality technologies.

- Digital twins for simulation, diagnosis and development in a wide range of applications from smart city systems to human body parts.

- Neuromorphic processing for mimicking human brain cells, enabling computer programs to work simultaneously instead of sequentially.

- Brain-computer interfaces for restoring useful function to those disabled by neuromuscular disorders such as ALS, cerebral palsy, stroke or spinal cord injury.

In the midst of all these developments, business and society will continue to encounter issues with bias, trust, privacy, transparency, accountability, ethics and humanity that can positively or negatively impact our lives and livelihoods.

Ron Karjian is an industry editor and writer at TechTarget covering business analytics, artificial intelligence, data management, security and enterprise applications.