Getty Images/iStockphoto

6 content moderation guidelines to consider

User-generated content can affect a company's reputation. Content moderation helps stop the spread of disinformation and unacceptable posts that can harm or upset others.

Content moderation establishes set guidelines for acceptable community posts and ensures users follow these expectations.

Disinformation can spread quickly on social media and other user-generated content platforms. In January 2024, pornographic deepfake images of Taylor Swift spread on X, formerly known as Twitter, and one image was viewed 47 million times before the account was suspended. This incident happened after Elon Musk acquired Twitter in 2022 and changed the content moderation policies.

In another January 2024 incident, a YouTube video circulated after a violent killing in Pennsylvania, where a man displayed his father’s head and spouted conspiracy theories. The video was posted for a few hours and had around 5,000 views before being removed.

The burning question to both mishaps is how they stayed up so long and how they were not blocked by content moderation.

In addition to these extreme examples, user-generated content might also produce posts that are offensive to others with violence, hate speech and obscenities.

Content moderation can review and ensure information is correct before misinforming or upsetting other users.

What is content moderation?

Content moderation includes reviewing and creating guidelines for items posted on user-generated content websites such as social media, online marketplaces or forums. These guidelines cover video, multimedia, text and images to give a company control of the content an audience sees. It lets companies filter information that they might find unacceptable and not useful. Companies can determine if posts are appropriate or necessary for their brand and customers.

Why content moderation is not the same as censorship

Content moderation and censorship are two different concepts. Censorship suppresses or prohibits speech for those holding a minority viewpoint. The First Amendment protects citizens from government censorship. Content moderation, however, involves set guidelines by private companies to their sites and should be clearly stated ahead of time.

Benefits to content moderation include the following:

- removing misinformation and disinformation

- curbing hate speech

- protecting public safety

- eliminating obscenity

- removing paid ads and content unrelated to the site

Privately owned platforms are not required to post information they deem unsuitable or unsafe for their community members. Content moderation makes the community a safe place to share freely and not be bullied, harassed or fed incorrect information.

Why content moderation is important

User-generated content can help brands grow communities, create brand loyalty and establish trust. But to convey trust and protect the brand's identity, content moderation helps users avoid viewing misleading, disturbing, hurtful or dangerous content.

If a site isn't trustworthy or pleasant, customers might go somewhere else and relay the information to other potential customers.

Disinformation can spread quickly and can be dangerous to the community. If users create content that violates guidelines, companies can be in trouble if the content is illegal, offensive or disturbing. Cyberbullies and trolls can take advantage of an online community and harm the brand.

Types of content moderation

There are several ways to moderate content, such as human moderation or automated monitoring using machine learning. Some companies opt for a combination of manual and automated reviews. Users might also be able to report unacceptable behavior or content.

Machine learning and artificial intelligence

Artificial intelligence is the latest in content moderation. AI can read contextual cues as well as identify and respond to banned behavior immediately. Using machine learning to train automated moderation to look for certain phrases, obscenities or past offenders can make this process quicker and more efficient. And it does not create stress for employees who review content repeatedly.

Because AI uses algorithms to gather data, it is better at discovering and responding to toxic behaviors. However, some slang terms or sarcasm can be lost in translation. AI also does not have emotion and may not know if something could be offensive to others.

Human content moderator

Using strictly AI content moderation may not be enough. AI-generated images may slip through the cracks. And training AI on human emotions is extremely difficult.

Several companies have full-time employees to monitor content and ensure it follows the company's guidelines. Content moderators -- sometimes referred to as community managers -- make sure contributions are valuable, user behavior is acceptable and group members are engaged.

Human moderation requires manual review, which might be suitable for small businesses. Content moderators can empathize more and find subtle cultural references in the text that AI might miss.

The drawback of a human content moderator is the response time is slower than using an automated program. The review can also be inconsistent, as moderators might have to review large amounts of content. And with human error, the content is up to interpretation and might not be handled consistently. It can also be difficult and stressful for moderators to handle disturbing content and deal with irate users.

User reports

Having users report toxic behaviors or banned content is crowdsourcing content moderation. It gives users a sense of community and builds trust by having them contact the company when they see something negative that affects their experience.

Make sure users are aware of how to report misconduct with clear guidelines and direction. Users should know where to go and how it will be handled.

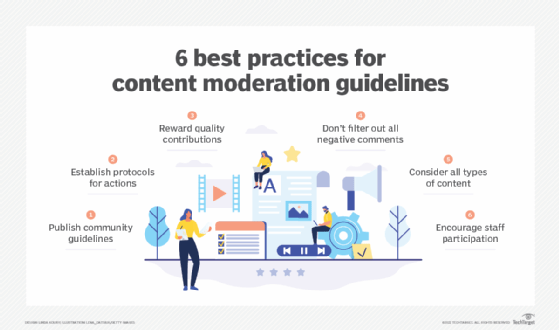

Best practices for content moderation guidelines

When creating content moderation guidelines, be sure to keep these practices in mind:

1. Publish community guidelines

Community guidelines for quality content rely on establishing clear rules and making sure they are visible. Be sure to outline what content, behavior and language is acceptable and unacceptable.

Rules and guidelines should be transparent and reviewed regularly to ensure the actions are reasonable. There is no one-size-fits-all content moderation plan. Keep the audience in mind when drafting it.

Be sure these guidelines are posted in locations users can easily see, such as a link in the navigation menu or in the website footer. These guidelines should also be shared when users register. Be sure to cover multiple languages if necessary to make sure the rules and consequences of breaking them are clear.

2. Establish protocols for actions

Provide clear and actionable sanctions for breaking moderation rules, such as the following:

- content editing

- content removal

- temporary suspension of access

- permanent blocking of access

Set timelines for suspensions, such as one offense is a one-month suspension. Guidelines should have clear actions or consequences based on the severity of the content. Also, determine what content should be reported to authorities in the most severe cases.

If a company uses automated monitoring, determine what comments should be passed to employees.

3. Reward quality contributions

Try rewarding contributions and encouraging members to be more active in the community. Try using badges such as "top fan" or "top contributor."

Letting users know they are valued can help engage users and encourage others to participate.

4. Don't filter out all negative comments

The first instinct is to remove all negative comments, which can harm a brand. It might seem bad to leave these comments, but responding to them appropriately can help maintain transparency with other users.

No one expects a company to have all positive reviews, but they will be interested to see how a company responds and treats commenting users. If people see a company resolving complaints and addressing customer confusion, it shows the company isn't hiding anything and wants to help its customers.

Decide which negative comments are OK to keep. Remove derogatory comments, but ones complaining about products or company mistakes can be a great way to address customers.

Content moderators can respond to these comments and provide resolutions. Companies should address mistakes and fix issues promptly.

5. Consider all types of content

Don't forget that the user-generated content in these moderation guidelines includes more than written comments. Consider other content, such as images, videos, links and live chats. Content moderation is used to provide a safe user experience across all types of content and viewing.

Learn more about monitoring user-generated content on video sites.

6. Encourage staff participation

Depending on the business, employees can help set the tone for conversations on social media. Employees can start the conversation and give people directions on what to post. Employees can also help provide informed answers should there be questions in the comments.

Remind employees to adhere to the company's employee social media guidelines for these postings.