What is a supercomputer?

A supercomputer is a highly advanced computer that performs at or near the highest operational rate for computers.

Traditionally, supercomputers have been used for scientific and engineering applications that must handle massive databases, perform a great amount of computation or both. Advances like multicore processors and general-purpose graphics processing units have enabled powerful machines that could be called desktop supercomputers or GPU supercomputers.

By definition, a supercomputer is exceptional in terms of performance. At any time, there are a few well-publicized supercomputers that operate at extremely high speeds relative to all other computers. The term supercomputer is sometimes applied to far slower -- but still impressively fast -- computers.

How do supercomputers work?

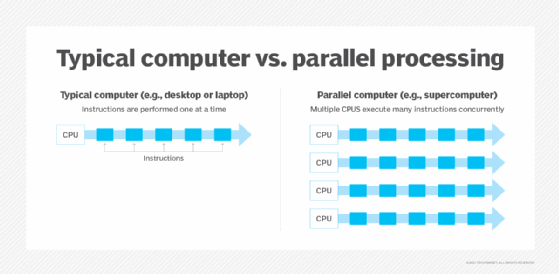

Supercomputer architectures are made up of multiple central processing units. These CPUs have elements composed of compute nodes and memory. Supercomputers can contain thousands of such compute nodes that use parallel processing to communicate with one another to solve problems. Figure 1 depicts parallel processing versus typical computer processing.

The largest, most powerful supercomputers are multiple parallel computers that perform parallel processing. There are two basic parallel processing approaches: symmetric multiprocessing and massively parallel processing. In some cases, supercomputers are distributed, meaning they draw computing power from many individual networked PCs in different locations instead of housing all the CPUs in one location.

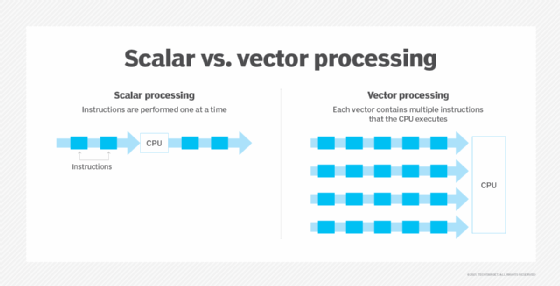

Another type of high-performance processing is vector processing, as depicted in Figure 2, versus scalar processing. In vector processing, multiple arrays of data called vectors are concurrently processed by special processors that can execute many instructions from multiple streams. This is used in supercomputers because it supports very high speeds and uses less memory than other techniques, making it an efficient architecture for supercomputing. By contrast, scalar processing, like most computers, executes one instruction at a time.

Supercomputer processing speed is measured in quadrillion floating point operations per second (FLOPS), also known as petaflops or PFLOPS. The most powerful supercomputer today, Frontier by Hewlett Packard Enterprises, processes at over 1 exaflops (one quintillion or 1018 FLOPS).

Supercomputer operating systems

Owing to the unique architecture of supercomputers, most operating systems (OSes) have been customized to support each system's unique characteristics and application requirements. Despite the tendency for unique OSes, almost every supercomputer OS today is based on some form of Linux.

No industry standard for supercomputing OSes currently exists, and Linux has evolved into the de facto foundation.

FLOPS vs. MIPS

Most people are familiar with the term MIPS (million instructions per second) as a measure of computer performance and processing speed. FLOPS are used in supercomputers to measure performance and are considered a more appropriate metric than MIPS due to their ability to provide highly accurate calculations.

The list of highest performing computers is called the TOP500 (www.top500.org), and includes the 500 highest performing computers in the world. The list is published twice annually. Table 1 lists the 10 fastest supercomputers on the list dated June 2024. Ratings are based on performance using petaflops.

| Name | Speed (PFLOPS) | System type | Location |

| El Capitan | 1,742 | HPE Cray EX255a | United States |

| Frontier | 1,353 | HPE Cray EX235a | Oak Ridge National Laboratory |

| Aurora | 1,012 | HPE Cray EX | Argonne National Laboratory |

| Eagle | 561 | Microsoft NDv5 | Microsoft Azure |

| HPC6 | 478 | HPE Cray EX235a | Italy |

| Supercomputer Fugaku | 442 | Supercomputer Fugaku | Japan |

| Alps | 435 | HPE Cray EX254n | Switzerland |

| LUMI | 380 | HPE Cray EX235a | Finland |

| Leonardo | 241 | BullSequana XH2000 | Italy |

| Tuolumne | 208 | HPE Cray EX255a | United States |

Currently, both Frontier and Aurora are in the exascale class, with both having speeds exceeding 1.0 exaflops (1018 FLOPS).

Differences between general-purpose computers and supercomputers

Processing power is the main difference between supercomputers and general-purpose computer systems. The fastest supercomputers can perform 100 PFLOPS or more, as noted in Table 1. A typical general-purpose computer can only perform hundreds of gigaflops to tens of teraflops.

Supercomputers consume lots of power. As a result, they generate so much heat they need to be stored in specially cooled environments.

Both supercomputers and general-purpose computers differ from quantum computers, which operate based on the principles of quantum physics.

What are supercomputers used for?

Supercomputers perform resource- and compute-intensive calculations that general-purpose computers cannot handle. They often run engineering and computational sciences applications, such as the following:

- Weather forecasting to predict the impact of extreme storms and floods.

- Oil and gas exploration to collect huge quantities of geophysical seismic data to aid in finding and developing oil reserves.

- Molecular modeling for calculating and analyzing the structures and properties of chemical compounds and crystals.

- Physical simulations like modeling supernovas and the birth of the universe.

- Aerodynamics such as designing a car with the lowest air drag coefficient.

- Nuclear fusion research to build a nuclear fusion reactor that derives energy from plasma reactions.

- Medical research to develop new cancer drugs and understand the genetic factors that contribute to opioid addiction and other ailments.

- Artificial intelligence and machine learning to facilitate the development of new technologies, support business analytics and other activities.

- Next-generation materials identification to find new materials for manufacturing.

- Cryptanalysis to analyze cyphertext, ciphers and cryptosystems to understand how they work and identify ways of defeating them.

Like any computer, supercomputers are used to simulate reality and make projections but on a larger scale. Some of the functions of a supercomputer can also be carried out with cloud computing. Like supercomputers, cloud computing combines multiple processors to achieve power that is impossible on a PC.

Notable supercomputers throughout history

Seymour Cray designed the first commercially successful supercomputer. It was the Control Data Corporation (CDC) 6600, released in 1964. It had a single CPU and cost $8 million -- the equivalent of $60 million today. CDC 6600 could handle 3 million FLOPS and used vector processors.

Cray went on to found a supercomputer company named Cray Research in 1972. Hewlett Packard Enterprise acquired Cray Research in 2019, and the company is still in operation as Cray Inc.

IBM has been a keen supercomputer competitor. IBM Roadrunner was the top-ranked supercomputer when it was launched in 2008. It was twice as fast as IBM's Blue Gene and six times as fast as any other supercomputer at that time. IBM's Watson is famous for having adopted cognitive computing power to beat champion Ken Jennings on the popular quiz show Jeopardy! Today, IBM's fastest supercomputer, as noted in the TOP500 list, is the Summit, based on IBM's Power System AC922 technology, used at the Oak Ridge National Laboratory.

Top supercomputers of recent years

Developed in China, Sunway's OceanLight supercomputer is reported to have been completed in 2021. It is thought to be an exascale supercomputer, which can calculate at least 1018 FLOPS. The system, whose performance data has not been released, was developed by National Supercomputer Center in Wuxi, China.

In the U.S., some supercomputer centers are interconnected on an internet backbone known as the very high-speed Backbone Network Service, or vBNS. It was developed in 1995 as part of the National Science Foundation Network. NSFNET was the foundation for an evolving network infrastructure known as the National Technology Grid, or Smart Grid, used for managing the distribution of energy. Internet2, a university-led project, has been part of this initiative.

At the lower end of supercomputing, data center administrators can use clustering for a build-it-yourself approach. Starting in 1994, the Beowulf Project offered guidance on how to put together clusters of off-the-shelf PC processors, using Linux OSes, and interconnecting them with Fast Ethernet. Applications must be written to manage the parallel processing.

Supercomputers and AI

Countries around the world are using supercomputers for research purposes. One example is Sweden's Berzelius, which began operation in the summer of 2021. The system is being used for AI research, primarily in Sweden.

Supercomputers often run AI programs because those applications typically require supercomputing-caliber performance and processing power. Supercomputers can handle the large amounts of data that AI and machine learning application development use.

Some supercomputers are engineered specifically with AI in mind. For example, Microsoft custom built a supercomputer to train large AI models that work with its Azure cloud platform. The goal is to provide developers, data scientists and business users with supercomputing resources through Azure's AI services. One such tool is Microsoft's Turing Natural Language Generation, a natural language processing model unveiled in 2020.

Another example of a supercomputer engineered specifically for AI workloads is Nvidia's Perlmutter. In 2021 it was the number 5 TOP500 supercomputer, but several others have surpassed it in recent years, as shown in Table 1. It was tasked with assembling the largest-ever 3D map of the visible universe by processing data from the Dark Energy Spectroscopic Instrument, a camera that captures dozens of photos per night focused on thousands of galaxies.

The future of supercomputers

The supercomputer and high-performance computing market is growing as vendors such as Amazon Web Services, Microsoft Azure and Nvidia develop their own supercomputers. HPC is becoming more important as AI capabilities gain traction in all industries, from predictive medicine to manufacturing.

According to a report released in August 2023 by Precedence Research, the supercomputer market in 2022 was valued at $8.8 billion and is forecast to reach about $25 billion by 2032, for a compound annual growth rate of 11% over the forecast period, 2023-2032.

The current focus in the supercomputer market is the race toward exascale processing capabilities. Exascale computing could present new possibilities that transcend those of even the most modern supercomputers. Exascale supercomputers are expected to be able to generate an accurate model of the human brain, including neurons and synapses. This would have a huge impact on the field of neuromorphic computing.

As computing power continues to grow exponentially, supercomputers with hundreds of exaflops could become a reality.

Supercomputers are becoming more prevalent as AI plays a bigger role in enterprise computing. Learn the top applications of AI in business and why businesses are using AI.