What is computer memory and what are the different types?

Memory is the electronic holding place for the instructions and data a computer needs to reach quickly. It's where information is stored for immediate use. Memory is one of the basic functions of a computer, because without it, a computer wouldn't be able to function properly. Memory is also used by a computer's operating system (OS), hardware and software.

There are technically two types of computer memory: primary and secondary. The term memory is used as a synonym for primary memory or as an abbreviation for a specific type of primary memory called random access memory (RAM). This type of memory is located on microchips that are physically close to a computer's microprocessor.

If a computer's central processing unit (CPU) had to only use a secondary storage device, computer systems would be much slower. In general, the more primary memory a computing device has, the less frequently it must access instructions and data from slower -- secondary -- forms of storage.

What is random access memory?

Solid-state memory is an electronic device that's represented as a two-dimensional matrix of single-bit storage cells or bits. Each set of storage cells is denoted as an address, and the number of storage cells at each address represents the data depth. For example, an extremely simple memory device might offer 1,024 addresses with 16 bits at each address. This would give the memory device a total storage capacity of 1,024 X 16 or 16,384 bits.

RAM is the overarching concept of random access. A CPU can read or write data to any memory address on demand, and will typically reference memory content in unique, radically different orders depending on the needs of the application being executed.

This random access behavior differs from classical storage devices, such as magnetic tape, where required data has to be physically located on the media each time before it can be written or read. It's this rapid, random access that makes solid-state memory useful for all modern computing.

Many types of RAM report performance specifications against two traditional metrics:

- Random access read/write performance. This is where addresses are referenced in random order.

- Sequential access read/write performance. This is where addresses are referenced in sequential order.

Primary vs. secondary memory

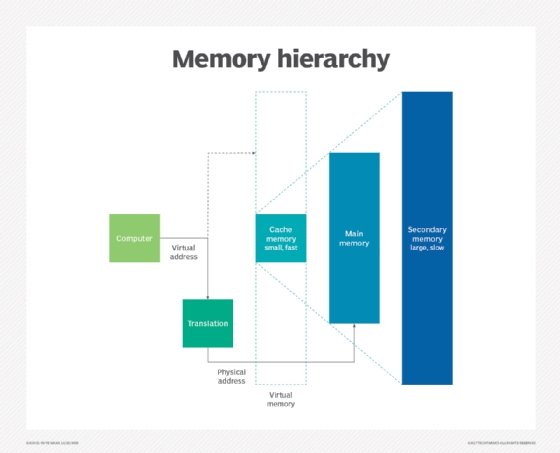

Memory is broadly classified as primary and secondary memory, though the practical distinction has fallen into disuse.

Primary memory refers to the technologies and devices capable of supporting short-term, rapidly changing data. This mainly encompasses cache memory and RAM located close to -- and accessed frequently by -- the main CPU.

Secondary memory refers to the technologies and devices primarily used to support long-term data storage where data is accessed and changed far less frequently. This typically includes memory devices, such as solid-state flash memory, as well as the complete range of magnetic hard disk drives (HDDs) and solid-state drives (SSDs).

In most cases, data is moved from secondary memory into primary memory where the CPU can execute it. It's then returned from primary memory to secondary memory when the file is saved or the application is terminated.

It's possible to use secondary memory as if it were primary memory. The most common example is virtual memory, which the Windows OS uses to allow more applications and data than solid-state RAM can accommodate. However, virtual memory provides greater latency and lower performance than solid-state primary memory. This happens because it takes longer for drives to read or write data, resulting in lower performance for applications using virtual memory.

Volatile vs. non-volatile memory

Memory can also be classified as volatile or non-volatile memory.

- Volatile memory. This includes memory technologies and devices where data must be constantly refreshed and is lost once power is removed from the memory device. All dynamic memory devices, such as dynamic RAM (DRAM), are volatile. All static memory devices, such as static RAM (SRAM), don't require a refresh to preserve data contents, but data is still lost from static memory devices when power is turned off.

- Non-volatile memory. NVM refers to memory technologies and devices where data contents are retained indefinitely even when power is removed from the memory device. Solid-state flash memory is a popular non-volatile memory technology. All types of read-only memory (ROM) devices are also non-volatile. Secondary memory, such as HDDs and SSDs, are routinely designated as non-volatile memory or non-volatile storage.

Memory vs. storage

Memory and storage are easily conflated as the same concept; however, there are important differences. Put succinctly, memory is primary memory, while storage is secondary memory. Memory refers to the location of short-term data, while storage refers to the location of data stored on a long-term basis.

Memory is often referred to as a computer's primary storage, such as RAM. Memory is also where information is processed. It lets users access data that's stored for a short time. The data is only stored for a short time because primary memory is volatile and isn't retained when the computer is turned off.

The term storage refers to secondary memory where data in a computer is kept. An example of storage is an HDD. Storage is non-volatile, meaning the information is still there after the computer is turned off and then back on. A running program might be in a computer's primary memory when in use -- for fast retrieval of information -- but when that program is closed, it resides in secondary memory or storage.

The amount of space available in memory and storage differs as well. In general, a computer will have more storage space than memory. For example, a laptop can have 16 gigabytes (GBs) of RAM while having 1 terabyte (TB) or more of storage. The difference in space is there because a computer doesn't need to quickly access all the information stored on it at once, so allocating a few GBs of memory space to run programs will suffice for most modern applications.

The terms memory and storage can be confusing because their use today is inconsistent. For example, RAM is referred to as primary storage and types of secondary storage can include flash memory. To avoid confusion, it's easier to talk about memory in terms of whether it's volatile or non-volatile and storage in terms of whether it is primary or secondary.

How does computer memory work?

When an OS launches a program, it's loaded from secondary memory to primary memory. Because there are different types of memory and storage, an example of this could be a program being moved from an SSD to RAM. Because primary storage is accessed faster, the opened program can communicate with the computer's processor at faster speeds. The primary memory can be accessed immediately from temporary memory slots or other storage locations.

Memory is volatile, which means that data in memory is stored temporarily. Once a computing device is turned off, data stored in volatile memory is automatically deleted. When a file is saved, it's sent to secondary memory for storage.

There are several types of computer memory, and computers operate differently depending on the type of primary memory used. However, semiconductor-based memory is typically associated with computer memory. Semiconductor memory will be made of integrated circuits with silicon-based metal-oxide-semiconductor (MOS) transistors.

Types of computer memory

Memory can be divided into primary and secondary memory. There are many types of primary memory, including the following:

- Cache memory. The temporary storage area, known as a cache, is more readily available to the processor than the computer's main memory source. It's also called CPU memory because it's typically integrated into the CPU chip or placed on a separate chip with a bus interconnect with the CPU.

- Random access memory. RAM refers to the processor's ability to access any storage location randomly.

- Dynamic random access memory. The data or program code a computer processor needs to function typically uses this semiconductor memory. DRAM stores bits in cells consisting of a capacitor and a transistor, must be refreshed frequently to retain contents and loses its data once power is turned off.

- Static random access memory. SRAM also only retains data bits for as long as power is supplied to it. However, unlike DRAM, it doesn't have to be periodically refreshed.

- Double Data Rate Synchronous DRAM. DDR SDRAM can theoretically improve memory clock speed to at least 200 megahertz.

- DDR 4 SDRAM. DDR4 RAM is a type of DRAM that has a high-bandwidth interface and is the successor to its previous DDR2 and DDR3 versions. DDR4 RAM allows for lower voltage requirements and higher module density. It's coupled with higher data rate transfer speeds and enables dual in-line memory modules (DIMMs) up to 64 GB.

- DDR 5 SDRAM. DDR5 RAM is a type of DRAM with higher bandwidth and capacity than previous DDR types. It offers superior power consumption, can operate at speeds up to 8,400 million transactions per second and can support up to 512 GB per DIMM.

- Direct Rambus DRAM. DRDRAM is a memory subsystem designed to transfer up to 1.6 billion bytes per second. The subsystem consists of RAM, the RAM controller, the bus that connects RAM to the microprocessor and devices in the computer that use it.

- Read-only memory. ROM is a type of computer storage containing non-volatile, permanent data that can usually only be read from and not written to. ROM frequently contains basic input/output system programming that lets a computer start up or regenerate each time it's turned on.

- Programmable ROM. PROM is ROM that a user can modify once. It lets a user tailor a microcode program using a special machine called a PROM programmer.

- Erasable PROM. EPROM is PROM that can be erased and re-used. The erasure process involves shining an intense ultraviolet light through a window designed into the memory chip.

- Electrically erasable PROM. EEPROM is a user-modifiable ROM that can be erased and reprogrammed repeatedly through the application of higher-than-normal electrical voltage. Unlike EPROM chips, EEPROMs don't need to be removed from the computer to be modified. However, an EEPROM chip must be erased and reprogrammed in its entirety, not selectively.

- Virtual memory. A memory management technique where secondary memory is used as if it were part of the main memory. Virtual memory uses hardware and software to let a computer compensate for physical memory shortages by temporarily transferring data from RAM to disk storage.

Advanced memory technologies

Beyond the common memory types, electronic device manufacturers are constantly developing new and innovative memory technologies to meet enterprise and consumer needs. Advanced and emerging memory technologies include the following:

- Flash memory. Non-volatile solid-state memory based on NAND and NOR flash memory chips is being used in all manner of commercial storage devices such as camera memory cards, USB drives and more recently SSD devices. Flash operates as erasable, rewritable ROM and can tolerate many thousands of erase/rewrite cycles. Common 2D flash devices are being supplanted by 3D flash that stack and interconnect 2D flash chips to boost memory capacity.

- 3D XPoint. This memory appeared in 2015 under the Optane brand. 3D XPoint stores bits based on changes in resistance, combined with a stackable cross-grid memory cell array based on a physical principle called Ovonic Threshold Switch. The technology was known for fast, low-latency storage and byte-addressable operation. It has since been abandoned, with production discontinued in 2021.

- Quantum memory. QRAM devices can store the quantum state of a photon without damaging the volatile quantum information the photon carries. Quantum memory stores information as quantum bits, or qubits, which can store a zero or a one, or both at the same time. This enables enormous storage capacity and ensures high security within the storage media.

- High-bandwidth memory. HBM technology is used to provide memory devices with high-bandwidth and low-power consumption. This lends well to high-performance computing applications and is typically used in memory devices where arrays of storage cells are to build large 3D storage subsystems, such as the Micron HBM3E 36 GB hybrid memory cube for AI tasks.

- Graphene memory. Graphene memory technology uses graphene -- a simple 2D material composed of carbon atoms -- to create memory devices such as graphene-based resistive RAM, or RRAM, graphene flash memory, and graphene-based HDDs.

- Neuromorphic memory. These devices mimic the behaviors of the human brain. They're commonly implemented as a spiking neural network where nodes that represent spiking neurons process and retain data like living neurons. Neuromorphic memory is particularly useful for AI systems.

Memory specifications

Memory devices are described in technical specifications that define their operational characteristics. Common memory specifications include the following:

- Buffer type. A memory device can be buffered or unbuffered; this is also referred to as registered and unregistered. Buffered memory devices are slightly slower than unbuffered memory but typically offer better data integrity which is important for enterprise server-side computing.

- Capacity. Memory capacity is the amount of digital information that a memory device can store. It's measured in bytes, such as gigabytes.

- Channels. These are the number of connections through which memory exchanges data with the system. Single-, dual- and quad-channel configurations are common. More channels generally yield better memory performance because greater data volumes can be exchanged over time.

- Form factor. Memory chips are typically combined onto formal devices that can be installed into slots and receptacles on a motherboard. Common form factors include DIMMs and small outline DIMMs.

- Latency. This metric looks at the delays involved in accessing memory locations to perform a read or write operation. Lower latency yields better memory performance.

- Speed. This is the rate at which information can be read from, or written to, a memory device. Memory speed is measured in transfers per second or megahertz.

- Voltage. This is the system voltage needed to operate a memory device measured in volts. Most modern memory devices use low voltages, such as 1.2V or 1.35V to improve speed and performance.

Memory optimization and management

Memory optimization involves a variety of techniques to improve the use and lifespan of computer memory, such as the following:

- Memory allocation. Memory optimization requires allocating memory in a way that's appropriate for the OS and applications using the memory. These strategies include static, dynamic, stack, heap and pool. For example, static allocation is when memory is known and allocated at compile time, and the memory's size and location are fixed. Static allocation is fast and simple, but it can waste memory and limit flexibility. By comparison, dynamic allocation refers to memory allocation at runtime. It allows for flexibility in memory size and use during the program's execution, but it can result in memory fragmentation.

- Memory leaks. Software must give back the memory that's allocated, but poor software design can cause some memory to remain unreleased. When that happens, the OS can't recover the unneeded memory and reallocate it to other applications. Eventually, the computer runs short of available memory, resulting in poor performance and system crashes. Proper software design and testing are required to find and fix memory leaks.

- Memory management. This is the way an OS organizes, monitors and controls memory use. Different memory management techniques include paging, segmentation, virtual memory, garbage collection and memory compression. For example, paging divides physical memory into fixed-size units or pages and maps them to logical units, called frames. Paging can improve memory use and protection. It can also cause page faults and increase overhead. A system can use multiple memory management techniques.

- Flash wear. Flash memory is widely used, but it's limited by finite erase/rewrite cycles. A central part of optimizing flash life is managing flash cell wear through techniques such as wear leveling and cycle management.

Timeline of the history and early evolution of computer memory

In the early 1940s, memory was only available up to a few bytes of space. One of the more significant signs of progress during this time was the invention of acoustic delay line memory. This technology enabled delay lines to store bits as sound waves in mercury, and quartz crystals to act as transducers to read and write bits. This process could store a few hundred thousand bits.

In the late 1940s, non-volatile memory began to be researched, and magnetic-core memory, which enabled the recall of memory after a loss of power, was created. By the 1950s, this technology had been improved and commercialized. That led to the invention of PROM in 1956. Magnetic-core memory became so widespread that it was the main form of memory until the 1960s.

Metal-oxide-semiconductor field-effect transistors, also known as MOSFET or MOS semiconductor memory, was invented in 1959. This enabled the use of MOS transistors as elements for memory cell storage. MOS memory was cheaper and needed less power compared to magnetic-core memory. Bipolar memory, which used bipolar transistors, started being used in the early 1960s.

In 1961, Bob Norman proposed the concept of solid-state memory on an integrated circuit (IC) chip. IBM brought memory into the mainstream in 1965. However, users found solid-state memory to be too expensive compared to other memory types.

Other advancements during the early to mid-1960s were the invention of bipolar SRAM, Toshiba's introduction of DRAM in 1965 and the commercial use of SRAM in 1965. The single-transistor DRAM cell was developed in 1966, followed by a MOS semiconductor device used to create ROM in 1967. From 1968 to the early 1970s, N-type MOS, or NMOS, memory also became popular.

By the early 1970s, MOS-based memory was more widely used as a form of memory. In 1970, Intel had the first commercial DRAM IC chip. One year later, erasable PROM was developed and EEPROM was invented in 1972.

Quantum computing can process massive amounts of data at great speed. Learn more about how it works and what the storage challenges are.