interlaced display

What is an interlaced display?

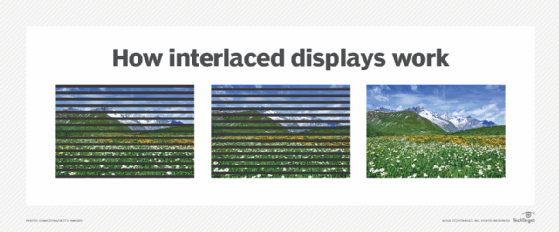

An interlaced display, or interlaced scan video, is when a video only changes every other row of pixels in the image at each screen refresh. The lines are scanned alternately in two interwoven lines. Interlacing is used in broadcast television to reduce the amount of bandwidth needed for each frame but still maintain a high refresh rate.

What is the difference between interlaced and progressive displays?

In a progressive video of a noninterlaced display, every line or row is updated at each screen refresh. In interlaced video, only every other line or row is changed at each screen refresh, or frame. Progressive video is preferred and is considered better in quality, but interlaced video was introduced due to technical limitations in early television standards. In most cases the human eye cannot easily tell the difference between progressive and interlaced video.

For example, a progressive signal would update line 1, then 2, then 3, then 4, etc., until the bottom of the frame and then start over at line 1. An interlaced signal would show line 1, then line 3, then line 5 etc.; on the next frame, it would show line 2, then 4, then 6, etc.

Interlaced signals are denoted by the number of rows and a lowercase i. For example, 1080i is a signal of 1,080 lines of pixels but only information for every other line for each screen refresh. Progressive signals are denoted by the number of rows and a lowercase p. For example, 720p is a signal of 720 lines of pixels with every line updated each screen refresh.

A 1080i signal and a 720p signal, at the same refresh rate, would contain about the same amount of information in each frame and use the same amount of bandwidth. A 720p signal would have clear perceived motion, while 1080i would have a more detailed image.

Interlaced displays vs. CRTs

Early displays used cathode ray tubes (CRTs). In CRTs, magnets would cause a beam of electrons to sweep back and forth across the back of coated glass to produce an image. CRTs could either progressively scan every line as the electron beam went across the screen, or they could interlace by only going across every other line with the electron beam before they returned the beam to the top left to start again.

CRTs are analog devices, and by simply adjusting the timing of the input signal, they could correctly show either progressive or interlaced video. CRTs could natively switch back and forth between displaying progressive or interlaced video. In general, though, televisions were specialized for interlaced video and computer monitors for progressive signals.

Modern liquid crystal display, or LCD, screens are inherently progressive. They use digital signals to update every pixel at the same time. This can lead to artifacts or imperfections when they show interlaced video. This has led to collectors seeking out older CRT displays to accurately show old videos or play old game consoles.

Interlaced television history and modern use

Television was initially developed during the 1920s and 1930s. During that time, all electronics were analog in nature. Additionally, the ability to record a broadcast for later showing was not developed until decades later. The TV station needed to broadcast what the camera was showing live over radio waves to be picked up and shown by the television sets. Compromises were needed to make this possible.

The compromise that led to interlaced video was between bandwidth and how the eye perceives motion. The human eye notices flicker if a screen is updated less than about 50 times a second, or 50 hertz (Hz). Because televisions could use the line frequency of grid electricity, it was convenient to set the refresh rate to 60 Hz in the U.S. and 50 Hz in Europe for early broadcasts.

There was not enough analog bandwidth available to update the entire image 60 times a second. It was only possible to show up to 30 frames a second, but that would have noticeable and distracting flicker for the viewer. In the U.S. National Television Standards Committee standard, the compromise was made to show half of a frame at each refresh at 60 Hz. It did this by interlacing or showing every other line.

Content recorded for home viewing would be stored in an interlaced format. Most commonly, this would be on Video Home System, or VHS, and then digital video disc, or DVD, at 480i resolution. This can lead to artifacts showing old, interlaced video content on modern progressive display high-definition TVs (HDTVs).

The transition to digital television and broadcast HDTV still necessitated interlaced video in some cases. The Advanced Television Systems Committee (ATSC) digital television standard, when it was introduced in the U.S., could not support full 1080p at 60 Hz. Many broadcasts were limited to 1080i at 60 Hz.

Future of interlaced video

With the advancement of technology, interlaced video will soon no longer be needed. The ATSC standard was updated in 2008 with support for the H.264 codec with 1080p at 60 Hz. Cable and satellite television can offer full 1080p or greater service. Blu-ray disks can store enough data for Full HD videos. High-speed internet connections and streaming services can display any needed resolution. Next-generation TV broadcasting will use ATSC 3.0, which uses advanced codecs to send up to 4K 120 Hz video over the air.

See also: interlaced scan, raster, raster graphics and raster image processor.