What is an application architecture?

An application architecture is a structural map of how a software application is assembled and how applications interact with each other to meet business or user requirements. An application architecture helps ensure applications are scalable and reliable, and assists enterprises in identifying gaps in functionality.

In general, an application architecture defines how applications interact with entities such as middleware, databases and other applications. Application architectures usually follow software design principles that are generally accepted among adherents, but might lack formal industry standards.

The application architecture can be thought of like architectural blueprints when constructing a building. The blueprints set out how the building should be laid out and where things such as electric and plumbing service should go. The builders can then use these plans to construct the building and refer to these plans later if the building needs to be refurbished or extended. Building a modern software application or service stack without a well-considered application architecture would be as difficult as trying to construct a building without blueprints.

An application architect will often have the principal responsibility for the application's design and ensuring it meets the stated business goals.

This article is part of

What is APM? Application performance monitoring guide

Application architecture technology and industry standards

Larger software publishers, including Microsoft, typically issue application architecture guidelines to help third-party developers create applications for their platforms. In its case, Microsoft offers an Azure Application Architecture Guide to help developers producing cloud applications for the Microsoft Azure public cloud computing platform. It provides a range of cloud services, including those for compute, analytics, storage and networking. Users can choose from these services to develop and scale new applications -- or run existing applications -- in the public cloud.

In addition, consortia have been created to define architectural standards for specific industries and technologies. Similarly, the Object Management Group (OMG), a standards consortium of vendors, end users, academic institutions and government agencies, operates multiple task forces to develop enterprise integration standards. OMG's modeling standards include the Unified Modeling Language (UML) and the Model Driven Architecture. UML can be used to create the application architecture diagram used by the programming teams.

Benefits of an application architecture

Overall, an application architecture helps IT and business planners work together so that the right technology is available to meet the business objectives. More specifically, an application architecture offers the following benefits:

- Reduces costs by identifying redundancies, such as the use of two independent databases that can be replaced by one.

- Improves efficiency by identifying gaps, such as essential services that users can't access through mobile apps.

- Creates an enterprise platform for application accessibility and third-party integration.

- Allows for interoperable, modular systems that are easier to use and maintain.

- Helps architects "see the big picture" and align software strategies with the organization's overall business objectives.

Application architecture types and patterns

Each software application is unique, therefore no two will be designed exactly alike. Still, there are enough similarities between many applications that several common patterns or types of architecture have developed.

The exact architecture used for any application will depend on several factors, such as the size, reusability and use of external data. To illustrate, a single-family home on a farm will be designed differently from an apartment block in a city center. Similarly, a small application used by a single team will be designed differently from a large multipurpose application with thousands of users that pulls data from various sources.

Common application architecture patterns

These patterns define how a single application is designed and functions.

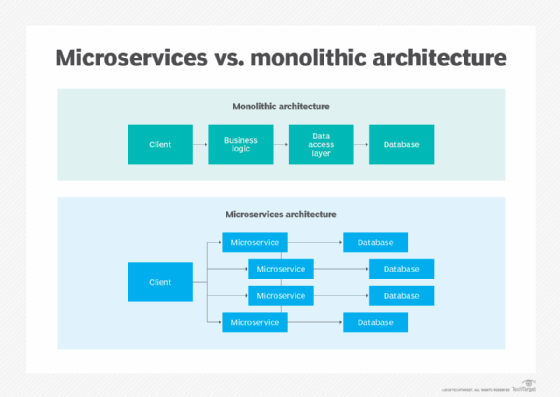

- Monolithic architecture is where a single application with a single codebase handles all functions. The monolithic architecture application handles user input, data processing and storage. Monolithic architecture was used in many older business applications. These applications are no longer desirable as they are slow to update and hard to maintain. However, they can still be useful for extremely small applications maintained by small teams.

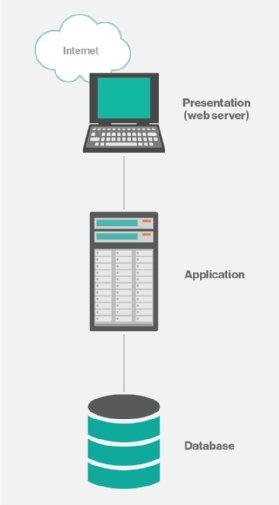

- N-tier architecture is where the application functions are divided into hierarchical layers, each handling a different portion of the functionality. The most common and simple is the two-tier client-server architecture, while the three-tier is a common architecture for web applications.

- Microservices architecture is where each function of an application is divided into a small service or component that operates independently of the others. The microservices will often use application programming interfaces to communicate with each other. Microservices allow applications to be updated quickly and scale rapidly, but can result in siloing of the design.

The above architectures can use various methods to achieve subgoals. Some of these include serverless architecture, single-page application or cloud computing.

Common organization application architecture patterns

These patterns define how an organization arranges the applications it uses and how they interact with each other. They are used to define the data flow in an N-tier or microservices architecture.

- Event-driven architecture uses events passed between components. An event in event-driven architecture can be any change in the data. An example of an event might be a new order or a box being delivered. These components can generate events or consume events. The two main subtypes of event-driven architecture are the publisher-subscriber model and event stream model.

- Service-oriented architecture uses smaller services to perform business processes. These services in service-oriented architecture are independent and reusable. The services often communicate using an enterprise service bus.

Learn how an experienced application architect with both technical and business chops can propel an organization's software strategy. Read about ways to enable secure software development.