floating-point operations per second (FLOPS)

What is floating-point operations per second (FLOPS)?

Floating-point operations per second (FLOPS) is a measure of a computer's performance based on the number of floating-point arithmetic calculations that the processor can perform within a second. Floating-point arithmetic is a term used in computing to describe a type of calculation carried out on floating-point representations of real numbers.

The use of floating-point numbers makes it possible for a computer to handle extremely long numbers and their varying magnitudes of precision. A floating-point number can be expressed in notation similar to scientific notation, although it commonly uses base 2 rather than base 10. Floating-point notation is often represented by the following formula (or something similar):

sign * mantissa * radixexponent

The sign placeholder indicates whether the number is positive or negative. Its value is either +1 or -1. The mantissa is a core number and is often fractional, as in 6.901. The radix is the numeric base, which is base 2 in floating point notation. The exponent is applied to the base. For example, the following floating-point equation represents the decimal number -51,287,949.31:

-1 * 3.057 * 224

Why use floating-point arithmetic?

Floating-point arithmetic is often required to perform workloads such as scientific calculations, advanced analytics or 3D graphics processing. Computers that run these workloads are commonly measured in FLOPS, which provides a way to gauge a computer's performance and compare it to other computers.

When FLOPS is used as a performance metric, it is typically expressed with a prefix multiplier such as tera or peta, as in teraFLOPS or petaFLOPS. The following table provides a breakdown of several FLOPS prefix multipliers.

| Measure | Abbreviation | Number FLOPS | Equivalent FLOPS |

| kiloFLOPS | kFLOPS | 103 | 1,000 FLOPS |

| megaFLOPS | MFLOPS | 106 | 1,000 kFLOPS |

| gigaFLOPS | GFLOPS | 109 | 1,000 MFLOPS |

| teraFLOPS | TFLOPS | 1012 | 1,000 GFLOPS |

| petaFLOPS | PFLOPS | 1015 | 1,000 TFLOPS |

| exaFLOPS | EFLOPS | 1018 | 1,000 PFLOPS |

| zettaFLOPS | ZFLOPS | 1021 | 1,000 EFLOPS |

| yottaFLOPS | YFLOPS | 1024 | 1,000 ZFLOPS |

History of the FLOPS concept

Frank H. McMahon from the Lawrence Livermore National Laboratory came up with the concept of FLOPS. He used the measurement to compare supercomputers based on the number of floating-point calculations they could perform. In the early days of supercomputers, those numbers were relatively low, but they've steadily grown as computer performance has increased.

In 1964, for example, the world's fastest computer was the CDC 6600, which contained a 60-bit, 10 MHz processor that could deliver 3 MFLOPS in performance. The computer also included a set of peripheral processors for housekeeping. The CDC 6600 remained the fastest computer until 1969, after which, a series of computers followed, each one faster than the previous generation.

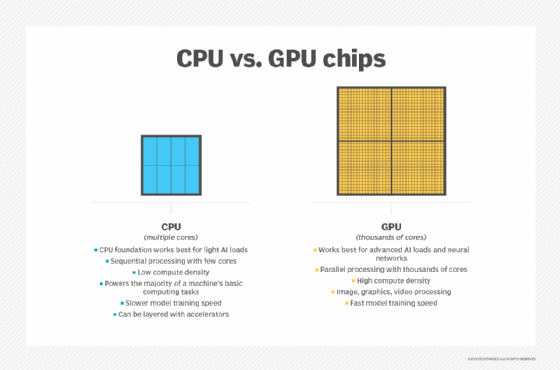

Now, over 50 years later, the world's fastest computer is the Frontier system at the Oak Ridge National Laboratory in Tennessee. It is the only computer whose performance delivers 1.1 EFLOPS. The system is made up of 74 HPE Cray EX supercomputer cabinets. Each node contains an optimized EPYC processor and four AMD Instinct accelerators. All total, the system has over 9,400 CPUs and 37,000 GPUs.

The term FLOPS is sometimes treated as the plural form of FLOP, which means floating-point operation, but this is an incorrect interpretation. The "s" in FLOPS refers to "second" and is not indicative of a plural form.

See top considerations for HPC infrastructure in the data center and learn why IT pros see a role for high-performance computing in business.