Direct Memory Access (DMA)

What is direct memory access (DMA)?

Direct Memory Access (DMA) is a capability provided by some computer bus architectures that enables data to be sent directly from an attached device, such as a disk drive, to the main memory on the computer's motherboard. The microprocessor, or central processing unit (CPU), is freed from involvement with the data transfer, speeding up overall computer operation.

DMA enables devices -- such as disk drives, external memory, graphics cards, network cards and sound cards -- to share and receive data from the main memory in a computer. It does this while still allowing the CPU to perform other tasks.

Without a process such as DMA, the computer's CPU becomes preoccupied with data requests from an attached device and is unable to perform other operations during that time. With DMA, a CPU initiates a data transfer with an attached device and can still perform other operations while the data transfer is in progress. DMA enables a computer to transfer data to and from devices with less CPU overhead.

An alternative to DMA is Ultra DMA, which provides a burst data transfer rate up to 33 megabytes per second (MBps). Hard drives that have Ultra DMA/33 also support programmed input/output (PIO) modes 1, 3 and 4, and multiword DMA mode 2 at 16.6 MBps.

How does DMA work?

Usually, a specified portion of memory is designated as an area to be used for direct memory access. For example, in the Industry Standard Architecture bus standard, up to 16 MB of memory can be addressed for DMA. Other bus standards might allow access to the full range of memory addresses. Peripheral component interconnect uses a bus master with the CPU delegating I/O control to the PCI controller.

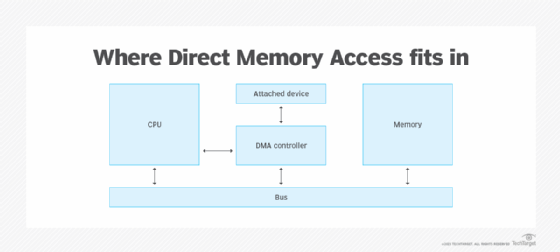

DMA channels send data between an attached peripheral device and the system memory. System resources such as the CPU, memory, attached I/O devices and a DMA controller are connected through a bus line, which is also used for DMA channels. The DMA controller is used to start memory read/write cycles and to generate memory addresses.

The CPU gets the DMA controller to begin a data transfer and associate a memory address to use. The DMA controller sets the destination addresses and read/write lines to the system memory. It then changes the internal memory address with each transferred byte of data until a full block of data is transferred.

The DMA controller moves data to and from memory using one of the following methods:

- Burst mode. When the CPU gives the DMA controller access to the system bus, the DMA controller transfers the whole data block in one contiguous sequence. Once completed, control of the bus reverts back to the CPU. This method causes the CPU to be inactive while transferring data. The DMA controller initially gains access to the system bus using Bus Request and Bus Grant processes.

- Cycle-stealing mode. The DMA controller accesses the system bus in the same way as burst mode, but control of the system bus reverts to the CPU using a Bus Grant after one byte is transferred. Another Bus Request is initiated, and the process is repeated until the whole data block is transferred. The cycle-stealing transfer mode is useful in systems where controllers monitor data in real time.

- Transparent mode. The DMA controller transfers data only when the CPU is executing operations that don't use system buses. With this DMA transfer method, the CPU doesn't have to stop performing its operations. Transparent mode DMA operations takes the longest to transfer data blocks, but it's the most efficient mode in terms of system performance.

DMA vs. RDMA

Remote Direct Memory Access (RDMA) is another memory access method that enables two networked computers to exchange data in main memory without relying on the CPU, cache or the operating system of either computer. Like locally based DMA transactions, RDMA frees up resources and improves throughput and performance. This results in faster data transfer rates and lower latency between RDMA-enabled systems.

RDMA is useful in applications that require fast and massive parallel high-performance computing clusters and data center networks. For example, RDMA is useful when analyzing big data, in supercomputing environments and for machine learning that requires low latencies and high transfer rates.

Learn more about how RDMA works and its advantages.