What are AI hallucinations and why are they a problem?

An AI hallucination is when a large language model (LLM) powering an artificial intelligence (AI) system generates false information or misleading results, often leading to incorrect human decision-making.

Hallucinations are most associated with LLMs, resulting in incorrect textual output. However, they can also appear in AI-generated video, images and audio. Another term for an AI hallucination is a confabulation.

AI hallucinations refer to the false, incorrect or misleading results generated by AI LLMs or computer vision systems. They are usually the result of using a small dataset to train the model resulting in insufficient training data, or inherent biases in the training data. Regardless of the underlying cause, hallucinations can be deviations from external facts, contextual logic and, in some cases, both. They can range from minor inconsistencies to completely fabricated or contradictory information. LLMs are AI models that power generative AI chatbots, such as OpenAI's ChatGPT, Microsoft Copilot or Google Gemini (formerly Bard), while computer vision refers to AI technology that allows computers to understand and identify the content in visual inputs like images and videos.

LLMs use statistics to generate language that is grammatically and semantically correct within the context of the prompt. Well-trained LLMs are designed to produce fluent, coherent, contextually-relevant textual output in response to some human input. That is why AI hallucinations often appear plausible, meaning users might not realize that the output is incorrect or even nonsensical. The lack of realization might lead to incorrect decision-making.

This article is part of

What is GenAI? Generative AI explained

Why do AI hallucinations happen?

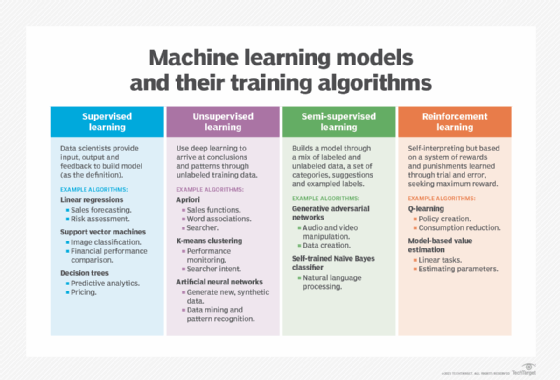

All AI models, including LLMs, are first trained on a dataset. As they consume more and more training data, they learn to identify patterns and relationships within that data, which then enables them to make predictions and produce some output in response to a user's input (known as a prompt). Sometimes however, the LLM might learn incorrect patterns, which can lead to incorrect results or hallucinations.

There are many possible reasons for hallucinations in LLMs, including the following:

- Poor data quality. Hallucinations might occur when there is bad, incorrect or incomplete information in the data used to train the LLM. LLMs rely on a large body of training data to be able to produce output that's as relevant and accurate to the user who provided the input prompt. However, this training data can contain noise, errors, biases or inconsistencies; consequently, the LLM produces incorrect and, in some cases, completely nonsensical outputs.

- Generation method. Hallucinations can also occur from the training and generation methods used, even when the data set itself is consistent and reliable, and contains high-quality training data. For example, the model's previous generations might create bias or the transformer might perform false decoding, both of which result in the system hallucinating its response. Models might also have a bias toward generic or specific words, which might influence the information they generate or lead them to fabricate their response.

- Input context. If the input prompt provided by the human user is unclear, inconsistent or contradictory, hallucinations can arise. While the quality of training data and training methods used are out of the users' control, they can control the inputs they provide to the AI system. By honing their inputs and providing the right context to the AI system, they can get it to produce better results.

Types of AI hallucinations

There are several types of AI hallucinations, including the following:

- Sentence contradiction. This is when an LLM generates a sentence that contradicts a previous sentence. For example:

- Prompt: Write a description of a landscape in four-word sentences.

- Output: The grass was green. The mountains were blue. The river was purple. The grass was brown.

- Prompt contradiction. This is when a sentence contradicts the prompt used to generate it. For example:

- Prompt: Write a birthday card for my niece.

- Output: Happy anniversary, Mom and Dad!

- Factual contradiction. This is when fictitious information is presented as a fact. For example:

- Prompt: Name three cities in the United States.

- Output: New York, London, Toronto.

- Irrelevant or random hallucinations. This is when random information with little or no relation to the input is generated.

- Prompt: Describe London to me.

- Output: London is a city in England. Cats need to be fed at least once a day.

Examples of AI hallucinations

One infamous example of an AI hallucination event occurred in February 2023 when Google's chatbot, Gemini, made an incorrect claim about the James Webb Space Telescope (JWST). In a promotional video, Gemini was prompted, "What new discoveries from the James Webb Space Telescope can I tell my 9-year-old about?" Gemini responded that the JWST took the very first pictures of an exoplanet outside the Earth's solar system. That information was false, since the first images of an exoplanet were taken in 2004 by the European Southern Observatory's Very Large Telescope (VLT) and the JWST was not launched until 2021. Bard's answer sounded plausible and was consistent with the prompt provided, but was pointed out as false by news agency Reuters.

Another AI hallucination occurred in late 2022 when Meta (formerly Facebook) demoed Galactica, an open-source LLM trained on millions of pieces of scientific information from textbooks, papers, encyclopedias, websites and even lecture notes. Galactica was designed for use by science researchers and students, many of whom reported that the system generated inaccurate, suspicious or biased results. One professor at New York University said that Galactica failed to accurately summarize his work, while another user said that it invented citations and research when he prompted it to perform a literature review on the topic of whether HIV causes AIDS.

These issues invited a lot of criticism, with many users labeling Galactica's LLM and its results as suspicious, irresponsible and even dangerous. This problem can have serious implications for AI users who don't know much about a subject that they are relying on Galactica for help. Following the outcry, Meta took down the Galactica demo just three days after launch.

Like Google Gemini and Meta Galactica, OpenAI's ChatGPT has also been embroiled in numerous hallucination controversies since its public release in November 2022. In June 2023, a radio host in Georgia brought a defamation suit against OpenAI, accusing the chatbot of making malicious and potentially libelous statements about him. In February 2024, many ChatGPT users reported that the chatbot tended to switch between languages or get stuck in loops. Others reported that it generated gibberish responses that had nothing to do with the user's prompt.

In April 2024, some privacy activists filed a complaint against OpenAI, stating that the chatbot regularly hallucinates information about people. The plaintiffs also accused OpenAI of violating Europe's privacy regulation, the General Data Protection Regulation (GDPR). A month later, a reporter working for the technology news website, The Verge, published a hilarious post in which she detailed how ChatGPT unequivocally told her that she had a beard and that she didn't actually work for The Verge.

Since these issues were first reported, these companies have either fixed the applications or are working on fixes. Even so, the events clearly demonstrate an important weakness in AI tools: They can generate undesirable output that might lead to undesirable or dangerous results for users.

Why are AI hallucinations a problem?

An immediate problem with AI hallucinations is that they significantly undermine user trust. As users begin to experience AI as a real tool, they might develop more inherent trust in them. Hallucinations can erode that trust and stop users from continuing to use the AI system.

Hallucinations might also lead to generative anthropomorphism, a phenomenon in which human users perceive that an AI system has human-like qualities. This might happen when users believe that the output generated by the system is real, even if it generates images depicting mythical (not real) scenes or texts that defy logic. In other words, the system's output is like a mirage that manipulates users' perception, leading them to believe in something that isn't actually real and then use this belief to guide their decisions.

The capacity of fluid and plausible hallucinations to fool people can be problematic or dangerous in many situations, especially if users are optimistic and non-skeptical. The AI system could inadvertently spread misinformation during elections, affecting its results or even leading to social unrest. Hallucinations might even be weaponized by cyberattackers to execute serious cyberattacks against governments or organizations.

Finally, LLMs are often black box AI, which makes it difficult -- often impossible -- to determine why the AI system generated a specific hallucination. It's also hard to fix LLMs that produce hallucinations because their training cuts off at a certain point. Going into the model to change the training data can use a lot of energy. Also, AI infrastructure is expensive so finding the root cause of hallucinations and implementing fixes can be a very costly endeavor. It is often on the user -- not the proprietor of the LLM -- to watch for hallucinations.

Generative AI is just that -- generative. In some sense, generative AI makes everything up.

For more on generative AI, read the following articles:

Pros and cons of AI-generated content

How to prevent deepfakes in the era of generative AI

How can AI hallucinations be detected?

The most basic way to detect an AI hallucination is to carefully fact-check the model's output. However, this can be difficult for users who are dealing with unfamiliar, complex or dense material. To mitigate the issue, they can ask the model to self-evaluate and generate the probability that an answer is correct or highlight the parts of an answer that might be wrong, and then use that information as a starting point for fact-checking.

Users can also familiarize themselves with the model's sources of information to help them conduct fact-checks. For example, if a tool's training data cuts off at a certain year, any generated answer that relies on detailed knowledge past that point in time should be double-checked for accuracy.

How to prevent AI hallucinations

There are a number of ways AI users can avoid or minimize the occurrence of hallucinations in AI systems:

- Use clear and specific prompts. Clear, unambiguous prompts, plus additional context can guide the model so it provides the intended and correct output. Some examples are listed below:

- Using a prompt that limits the possible outputs by including specific numbers.

- Providing the model with relevant and reliable data sources.

- Assigning the model a specific role, e.g., "You are a writer for a technology website. Write an article about x."

- Filtering and ranking strategies. LLMs often have parameters that users can tune. One example is the temperature parameter, which controls output randomness. When the temperature is set higher, the outputs created by the language model are more random. Top-K, which manages how the model deals with probabilities, is another example of a parameter that can be tuned to minimize hallucinations.

- Multishot prompting. Users can provide several examples of the desired output format to help the model accurately recognize patterns and generate more accurate output.

It is often left to the user -- not the LLM owner or developer -- to watch out for hallucinations during LLM use and to view LLM output with an appropriate dose of skepticism. That said, researchers and LLM developers are also trying to understand and mitigate hallucinations by using high-quality training data, using predefined data templates and specifying the AI system's purpose, limitations and response boundaries.

Some companies are also adopting new approaches to train their LLMs. For example, in May 2023, OpenAI announced that it had trained its LLMs to improve their mathematical problem-solving ability by rewarding the models for each correct step in reasoning toward the correct answer instead of just rewarding the correct conclusion. This approach is called process supervision and it aims to provide precise feedback to the model at each individual step in order to manipulate it into following a chain of thought and thus produce better output and fewer hallucinations.

History of hallucinations in AI

Google DeepMind researchers proposed the term IT hallucinations in 2018. They described hallucinations as "highly pathological translations that are completely untethered from the source material." The term became more popular and linked to AI with the release of ChatGPT which made LLMs more accessible but also highlighted the tendency of LLMs to generate incorrect output.

A 2022 report called "Survey of Hallucination in Natural Language Generation" describes how deep learning-based systems are prone to "hallucinate unintended text," affecting performance in real-world scenarios. The paper's authors mention that the term hallucination was first used in 2000 in a paper called "Proceedings: Fourth IEEE International Conference on Automatic Face and Gesture Recognition," where it carried positive meanings in computer vision, rather than the negative ones we see today.

ChatGPT was many people's introduction to generative AI. Take a deep dive into the history of generative AI, which spans more than nine decades. Also, read our short guide to managing generative AI hallucinations, explore steps in fact-checking AI-generated content and see how to craft a responsible generative AI strategy. Learn about the best large language models, skills needed to become a prompt engineer and prompt engineering best practices.