alphaspirit - Fotolia

3 ways to implement vSphere GPU virtualization

You can implement GPU hardware using VMDirectPath I/O, Nvidia Grid or Bitfusion FlexDirect on vSphere. Understand the pros and cons of each to know which will work best for you.

For many years, vSphere administrators have implemented dedicated GPUs in their servers to support their virtual desktop users. VMware vSphere coupled with Horizon support dedicated or shared GPU adapters from Advanced Micro Devices and Nvidia for Windows virtual desktops. This boosts performance for many types of workloads, including computer-aided design software, game development platforms, and medical and geographical information systems.

Adapters with dedicated GPUs also improve performance for other types of workloads where users need computational power. Artificial intelligence, machine learning and statistical processing can all benefit. However, not all applications are designed to use GPUs, and, in some cases, you must develop adapters to enable the software to use a GPU instruction set.

VMware provides documentation to help you get started with this new GPU hardware use case. You must also work with your users so they have a clear understanding of how their applications can benefit and what resources they need.

Most admins measure GPU performance in floating-point operations per second, but adapters also come with different numbers of cores, so depending on which adapter you buy, you might see a difference. You should also consider early on whether you must dedicate GPU hardware to a single application or if you can share it.

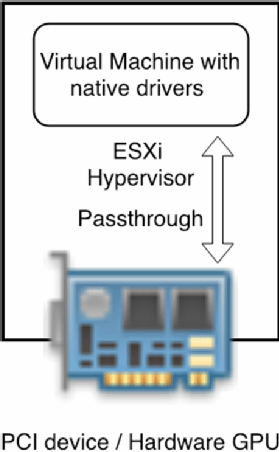

VMDirectPath I/O

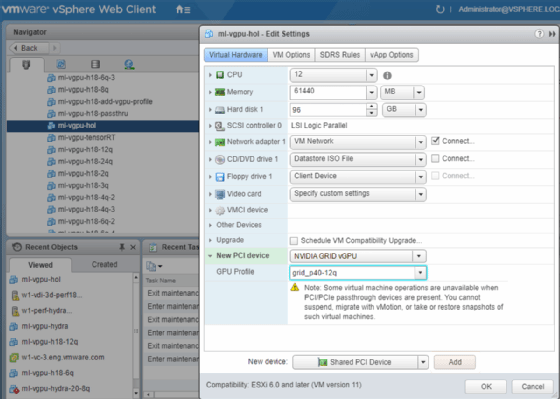

VMDirectPath I/O is the easiest way to provide VMs with access to GPU hardware in vSphere, but it comes with a few limitations. With this type of deployment, you select a Peripheral Component Interconnect (PCI) adapter in your ESXi host -- the GPU adapter -- and assign it directly to a VM. This bypasses the hypervisor, which doesn't need drivers or other modifications. The VM can access the adapter with the native drivers from the GPU vendor. In this type of deployment, you place an adapter in a physical workstation.

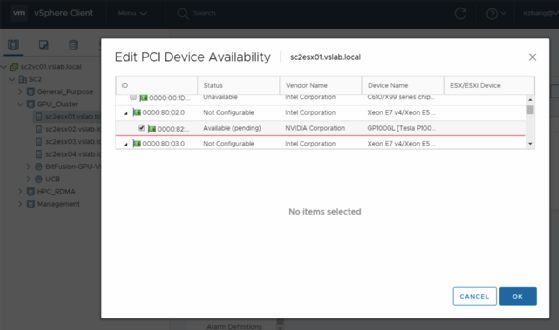

For example, after selecting the Nvidia Tesla P100 adapter for configuration with pass-through, as shown in Figure A below, it will no longer be available for anything else.

The GPU is now accessible to any VM in your data center. A VM can even use more than one PCI adapter, but you must remember that a VM can't use an adapter that is already assigned to another VM. You might want to consider this if you have a limited number of VMs that must work with a dedicated GPU or when you set up your first proof of concept.

However, you can't use vMotion or take snapshots of VMs with a dedicated adapter. Still, the overhead is minimal, and you can get near-physical performance out of your VMs.

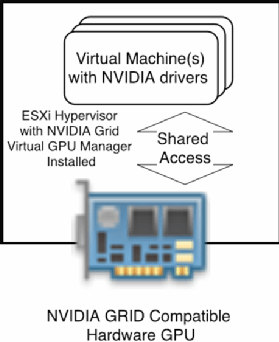

Nvidia Grid

Using Nvidia Grid enables multiple VMs to share a GPU. It requires you to install software in the hypervisor and your VMs. The official name of the software is Nvidia Quadro Virtual Data Center Workstation, but most people refer to it as Nvidia Grid.

You must install Nvidia Grid Manager as a vSphere Installation Bundle file on the ESXi host and install the related Nvidia Grid drivers in each VM.

With Nvidia Grid, multiple users can share the hardware GPU resources. Therefore, in most cases, users can make more efficient use of the total hardware capacity when it is divided over multiple applications.

Starting with vSphere 6.7 U1, you can also migrate these VMs to another host with vMotion. The destination host must have the same hardware and must have enough resources available. With vSphere 6.7, you must suspend and resume vSphere to make these VMs available.

With virtual desktops that use a GPU, you might be able to restart the VMs on another host, and then the user can just log in again. But when a user starts a computational workload that runs for days or even weeks, you won't make friends with that application owner if you stop and restart the VM. Luckily, now you can live-migrate the VM to another host to improve its overall performance or to perform maintenance.

Assigning a Nvidia Grid GPU profile to a VM enables that VM to use the GPU. You must configure all the hosts in a cluster with the same hardware so you can run the VMs anywhere in the cluster. Because it's not always feasible to install these adapters in all the hosts, you might sometimes see dedicated clusters used for Nvidia Grid.

Bitfusion FlexDirect on vSphere

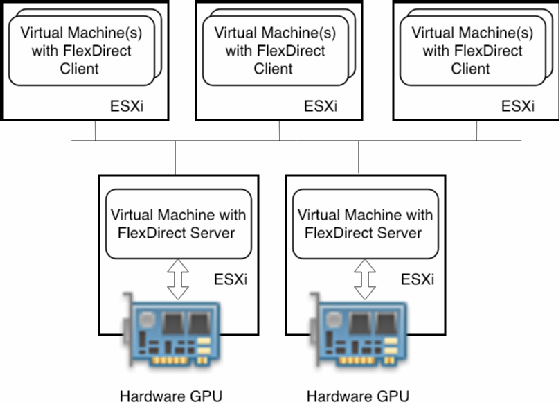

Bitfusion FlexDirect on vSphere creates an abstraction layer between the GPU hardware and the VMs that use it. VMs can access the GPU hardware via a fast networking technology, making them independent from the physical hardware.

This enables you to use vMotion, as the client and server VMs connect via the network. The hosts containing the hardware GPUs don't have to be the same as the hosts running the consuming VMs. Therefore, you have more flexibility to provide this functionality to VMs on a larger number of ESXi hosts, even across clusters.

You can use either a TCP/IP or Remote Direct Memory Access (RDMA) network to connect the hosts. You can implement the latter using InfiniBand or RDMA over Converged Ethernet.

Hardware availability and evaluating your options

The two vendors that play the greatest role in providing GPU capacity to ESXi hosts are Advanced Micro Devices and Nvidia. They both offer a range of adapters and their hardware offerings change continually.

Some server vendors offer machines that come with a preinstalled GPU. This might be efficient if you're replacing server hardware and already know that you intend to include GPU support as part of the deployment.

When evaluating GPUs, you must validate the performance requirements and what type of adapter works best for your workloads. Consider the benchmarks available for many types of applications and adapters prior to deciding what will be best for your data center.