metamorworks - stock.adobe.com

Privacy groups urge Zoom to abandon emotion AI research

Privacy organizations want Zoom to ditch emotion-tracking AI. The tech is invasive, discriminatory and doesn't work, the groups said.

Multiple human rights organizations have asked Zoom to keep emotion-tracking AI out of its products, calling the technology discriminatory, reliant on pseudoscience and potentially dangerous.

Almost 30 advocacy groups cosigned a letter to Zoom CEO Eric Yuan this week. They urged him to abandon research into emotion AI, which uses facial expressions and vocal cues to determine a user's state of mind. The letter's signatories, including the American Civil Liberties Union and the Electronic Privacy Information Center, said emotion AI's invasive nature, inaccuracy and potential for misuse would harm Zoom users.

Zoom did not respond to a request for comment.

Advocacy group Fight for the Future started the campaign in reaction to a Protocol story about Zoom's plans to incorporate emotion AI in products. The organization said Zoom has a responsibility as an industry leader to protect the public from such problematic tech.

"[Zoom] can make it clear that this technology has no place in video communications," the letter read.

Emotion AI's invasive monitoring would violate worker privacy and human rights, the groups said. A company, for example, could use the technology to review meetings and punish employees for expressing the wrong emotions.

The efficacy and ethics of emotion AI are controversial. Even if AI software correctly reads a person's expression, it may not accurately judge their feelings. In a 2019 study, Northeastern University professor Lisa Feldman Barrett and her colleagues found that facial expressions have limited reliability.

For example, a scowl could indicate anger, confusion or concentration. Culture and individual circumstances may also affect how people express their feelings.

Facial-recognition AI tools have struggled with race as well. A 2018 study by University of Maryland professor Lauren Rhue discovered that facial-recognition software attributed negative emotions to Black people more often than white people.

Commercial facial-recognition software also misidentified dark-skinned women almost 35% of the time, compared to an error rate of 0.8% for light-skinned men, according to an MIT and Stanford University paper. In their letter to Zoom, privacy advocates said features based on emotion AI would embed error-prone tools in the productivity software used by millions of people.

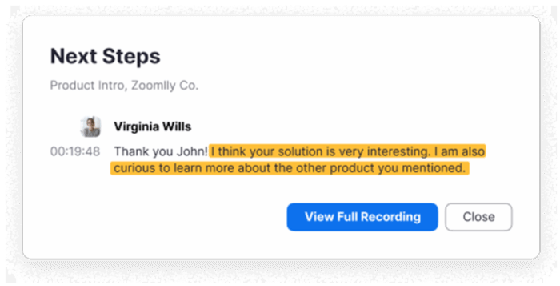

Zoom has seized on AI to add value to video meetings. Last month, the company launched an AI sales tool that analyzes video call transcripts, using data like the number of questions a customer asked to determine if they were engaged. The IQ for Sales feature is the first in a series of planned AI add-ons for Zoom.

Even IQ for Sales' sentiment analysis is troublesome, Fight for the Future campaign director Caitlin Seeley George said. The tool may misread data from people with disabilities, other cultures, or non-native English speakers.

"A platform as big as Zoom, with such a huge reach, should be critical of what functions they're offering and how they might cause harm in ways they aren't realizing," George said.

The privacy groups have asked Zoom to answer their concerns by May 20. The company has not replied yet, but Fight for the Future is hopeful for a meaningful discussion on the topic, George said.

Mike Gleason is a reporter covering unified communications and collaboration tools. He previously covered communities in the MetroWest region of Massachusetts for the Milford Daily News, Walpole Times, Sharon Advocate and Medfield Press. He has also worked for newspapers in central Massachusetts and southwestern Vermont and served as a local editor for Patch. He can be found on Twitter at @MGleason_TT.