Getty Images

NVMe-oF over IP: A complete SAN platform

NVMe/TCP or NVMe/RoCE are technologies that can now provide complete SAN platforms with multiple cost structures and are able to support different customer requirements.

Fibre Channel has historically been the technology of choice for building SANs, including those that support the NVMe-oF protocol. This success has mainly been due to the richness of FC fabric services, which enable simplified provisioning and a high degree of automation during SAN deployment.

More specifically, FC Zone Server and FC Name Server provide the following:

- Access control. The SAN administrator can define which endpoints (N_Ports) are allowed to communicate.

- Discovery. It allows N_Ports to automatically discover the address and properties of the other N_Ports they can communicate with.

These services are provided by the fabric, without the need to access or provision individual endpoints.

Although SAN customers have come to rely on the presence of these services, Fibre Channel can be more expensive to deploy, which could be one factor that limits a broader adoption of FC SAN technology.

Many in the storage industry believe a SAN transport with a more flexible cost structure would enable a broader use of SANs. Ethernet, with a rich ecosystem of developers and manufacturers and its much higher volumes, is a network technology with a greatly improved cost structure.

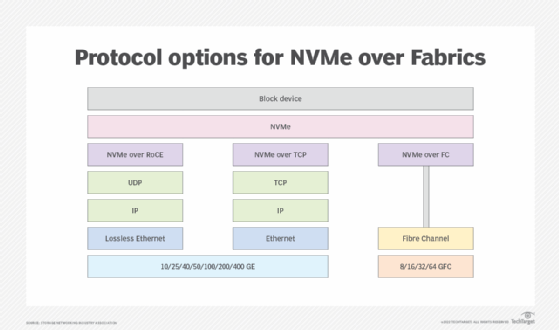

With Ethernet, two platforms exist to transport NVMe-oF:

- NVMe/RDMA over Converged Ethernet (RoCE), which uses lossless bridging to ensure a lossless Remote Direct Memory Access transport; and

- NVMe/TCP, which uses the ubiquitous TCP.

RoCE traditionally requires the deployment of a lossless Ethernet network at scale. As experienced with Fibre Channel over Ethernet, this process has been difficult to achieve. As a result, the usage of NVMe/RoCE has been limited to smaller, high‑end deployments or large, sophisticated cloud service providers for which having the lowest latency is important.

NVMe/TCP, on the other hand, can be used at every scale, even outside the data center -- such as for edge deployments -- with few or no changes to the network configuration. The flexibility of TCP makes NVMe/TCP the transport of choice for broad adoption and deployment of NVMe-oF.

Until recently, both NVMe/RoCE and NVMe/TCP suffered from a lack of discovery or provisioning automation services equivalent to the Fibre Channel fabric services. This places a burden on the host and storage administrators because of the number of endpoint configuration steps and significantly limits the scalability of NVMe-oF fabrics based on these transports.

To address the lack of automation with Ethernet, the Fabrics and Multi-Domain Subsystem subgroup of NVMe Express recently completed Technical Proposal 8009 and TP 8010. These additions to the NVMe architecture define a new NVMe entity, the Centralized Discovery Controller (CDC), as well as a mechanism to automatically discover it.

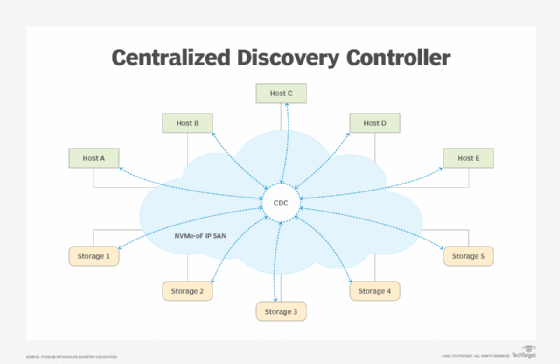

The CDC is accessible through one or more IP addresses from all NVMe hosts and subsystem storage interfaces of an NVMe-oF IP-based fabric. Each host and subsystem can discover the IP addresses of the CDC through Multicast DNSes and establish a long-term TCP connection with it, as shown in the image.

This TCP connection is used by hosts and subsystems to register discovery information with the CDC and by the hosts to discover its accessible subsystems. Hosts can discover subsystems by querying the CDC, similar to what hosts do today when they use Fibre Channel fabrics. Furthermore, TP 8010 defines a comprehensive zoning model for the CDC that enables a SAN administrator to specify fabric access control rules. In other words, the CDC implements centralized services equivalent to the Fibre Channel fabric services. This fills one of the most significant gaps of the NVMe-oF architecture.

An administrator can implement a CDC as a centralized process or as a distributed function across the switches of an Ethernet fabric, with both implementations having multiple redundancy options. Since the CDC is accessible through its IP address, it can deploy on a single subnet or can encompass multiple networks. Theoretically, it could be located anywhere on the internet!

The NVMe architecture has also recently been enhanced on the security front. This includes the definition of Diffie-Hellman-Hash-based Message Authentication Code-Challenge-Handshake Authentication Protocol, or DH-HMAC-CHAP (TP 8006), and the secure channel specification (TP 8011) that describe how to use Transport Layer Security (TLS) 1.3 to secure NVMe/TCP connections. The security architecture also supports concatenating the establishment of a TLS session to the completion of a DH-HMAC-CHAP transaction, using the DH-HMAC-CHAP session key as the pre-shared key needed to set up the TLS session. This enables NVMe-oF to use the secret provisioning scheme of the authentication protocol to also set up TLS secure channels, thereby avoiding the need to do a separate security provisioning for TLS.

Fibre Channel provides similar security features in the FC Security Protocols 2 (FC-SP-2) standard, however the security stance of Fibre Channel is quite different from NVMe over an IP-based network. Fibre Channel fabrics are deployed as isolated, air-gapped networks, and this isolation gives customers a perception of security, even if attacks are still possible through the management network. Therefore, the security protocols defined in FC-SP-2 are not widely implemented and are infrequently deployed.

The situation is different with NVMe over an IP-based network. Here, security protocols are not a luxury; customers expect and require them to be there. While this implies that these protocols need to be deployed and managed, the results are much stronger from a security standpoint.

Given the performance-optimized nature of NVMe-oF, it is reasonable to wonder what the performance impact of these new extensions would be. Centralized discovery is a control protocol; therefore, it has no performance impact on data transfer rate or latency. DH-HMAC-CHAP authentication is also a control protocol and has negligible performance impact to both throughput and latency. The same cannot be said for running storage traffic over a TLS secure channel. The reason is that each TCP segment -- and hence every storage transfer -- must be encrypted or decrypted, and these security operations require a significant amount of processing power. The good news is the amount of processing that a platform needs to do to support TLS depends on the amount of hardware assist available.

Today, multiple platforms are available with different performance levels at different cost points:

- At the low end, NVMe/TCP software-only platforms can provide full support for an NVMe/TCP SAN at virtually no cost by using the Ethernet interfaces available on a platform.

- Hardware acceleration for security processing on the network interface card enables absorbing the performance impact of TLS for a moderate cost.

- At the high end, smart network interface cards (SmartNICs) or data processing units can completely offload the NVMe-oF processing from a platform, including both TCP termination and TLS support. A SmartNIC-based platform can provide full line-rate performance and even present all networked storage as locally attached storage to a system. Of course, this option has the highest cost.

All these features demonstrate that, when NVMe/TCP is used as a SAN technology, it can provide a flexible cost structure and support all deployments ranging from cost-sensitive to maximum performance. This flexible cost structure, combined with comprehensive security support, as well as the centralized discovery and zoning services provided by the CDC, makes NVMe/TCP a complete SAN platform. For latency-sensitive deployments, NVMe/RoCE is also an additional option. Taken all together, NVMe-oF over IP networks are now a compelling alternative to traditional Fibre Channel fabrics.

About the author

Dr. Claudio DeSanti is a member of the Storage Networking Industry Association (SNIA) Networking Storage Forum and is a distinguished engineer with Dell Technologies' Integrated Solutions and Ecosystem CTIO group, focused on NVMe-oF and edge computing configurations. The SNIA Networking Storage Forum drives the broad adoption and awareness of storage networking platforms.

SNIA is a not-for-profit global organization, made up of member companies spanning the storage market. As a recognized and trusted authority for storage leadership, standards and technology expertise worldwide, SNIA's mission is to lead the storage industry in developing and promoting vendor-neutral architectures, standards and educational services that facilitate the efficient management, movement and security of information.