We have talked about the complexities of modern hard disk drives (HDDs) and the innovations that go into them to continually increase capacity and performance. However, there is no question that solid state drives (SSDs) have also earned their place in the sun. SSDs bring speed, durability and flexibility to enterprise workloads such as AI, machine learning and edge applications. But, SSDs are not a one-size-fits-all solution. We’ve compiled a list of important considerations to help make the choice of data center SSDs a little less daunting.

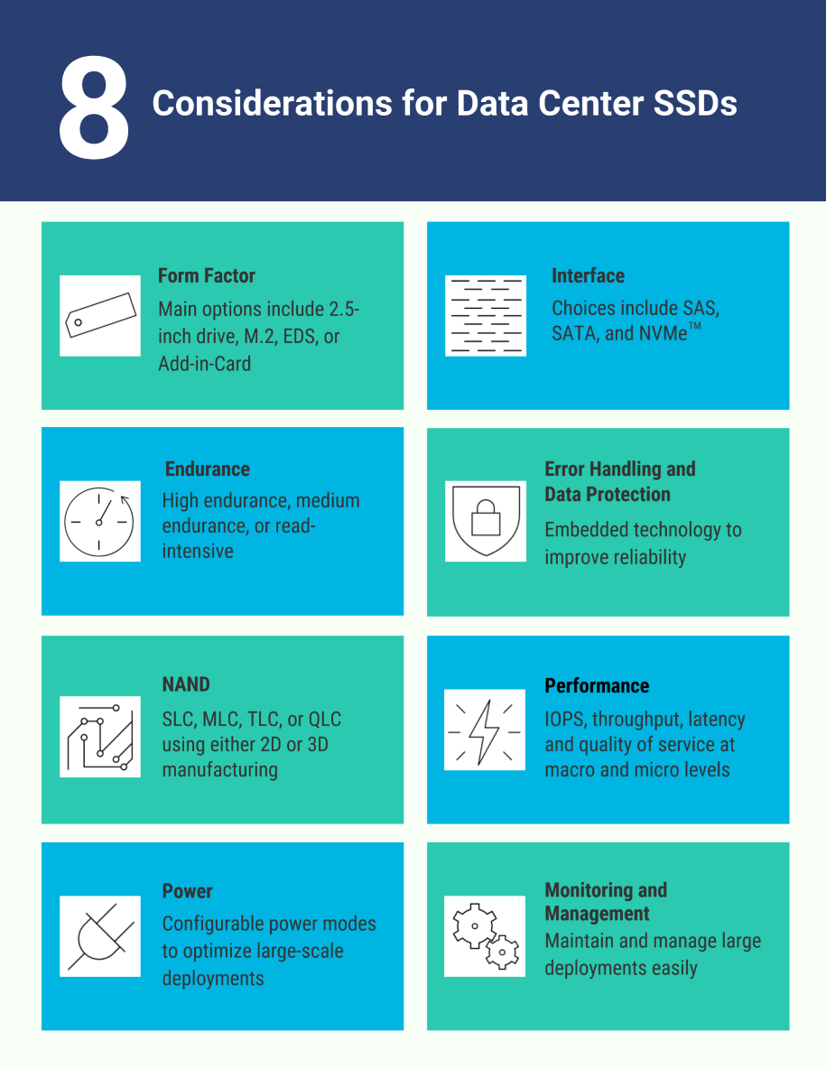

1. Form Factors

Because there are no moving parts in SSDs, they can be found in more unique physical form factors than their hard drive counterparts. You may find different versions of the same SSD, with similar performance in different form factors, to work best with your infrastructure.

Why It’s Important: The form factor defines where the SSD fits, whether it is possible to replace it without powering down the server, and how many SSDs can be packed into a chassis. A wide array of form factors is available from 2.5-inch drive to M.2, EDS, or add-in card, each with specific strengths that vary depending on your needs.

We go into more detail on navigating the complexities of SSD form factors in this article.

2. Interface Options

The interface is the protocol that connects compute resources with storage. There are three interface options for SSDs today: SATA, SAS, and NVMe. NVMe is the newest protocol and is known for its low latency and high bandwidth, accelerating performance for enterprise workloads. SATA and SAS can support both SSDs and HDDs, while NVMe is generally an SSD-only protocol and is the interface of the future.

Why It’s Important: The interface, or protocol, refers to the electrical and logical signaling between the SSD and the CPU. It defines the maximum bandwidth, minimum latency, and expandability and hot-swap capability of the SSD. In other words, access to storage is influenced by the protocol.

3. Endurance Considerations

Hard drives use a magnetic domain to record ones and zeros to effectively provide an unlimited number of writes. By contrast, flash cells (the spot where data is recorded) actually move charges in and out of an insulator to store bits, and they can be written upon only a finite number of times. The physical process of erasing and writing bits into flash, which can be performed from 100 to over 10,000 times, eventually destroys the cell’s ability to store bits. This is why flash drives have different endurance ratings and why flash error correction technologies matter.

Why It’s Important: Each SSD warranty allows for a limited amount of written data over its useful lifetime because the underlying flash supports only a finite number of erase and write cycles. Choosing too high of an endurance SSD for a read-mostly application will unnecessarily increase costs, while choosing too low of an endurance SSD for a high-write workload could result in premature application failure.

4. Error Handling, Power Protection, and End-to-End Data Protection

SSDs are fast, but without using proper enterprise–level data protection you can put your data at risk. The data protection guarantees of enterprise SSDs cover three main areas: NAND error handling, power-failure protection, and end-to-end data path protection. These areas are intended to prevent data loss or erroneous data retrieval at different stages of the SSD processing pipeline.

Why It’s Important: A true differentiator between consumer and enterprise SSDs is error case handling. Unexpected power failure, random bit flips in the controller or data path, and other flash errors can all cause data corruption—and there is a wide variance in how effectively, if at all, these conditions are covered.

5. NAND Types

The NAND cell is the most fundamental storage component in an SSD. This important metric is the number of bits stored per cell. This measurement dramatically influences endurance and the NAND cell array layout, which can significantly impact density and costs.

Why It’s Important: SSDs today are built on flash cells, with a wide variety of implementations. These range from single-layer, single-bit-per-cell configurations to three-dimensionally stacked groups where each flash cell stores up to 16 different charge levels. Flash technology has evolved with density and durability increasing through multiple generations of SLC, MLC, TLC and QLC. Understanding each NAND type’s strength and weaknesses helps you choose the appropriate lifetime and reliability SSD for a particular application.

6. Benchmarking

There is no one-size-fits-all approach to determining the true application performance of an SSD without actually running that application. But even when the application can be tested on a specific SSD, how can you predict the entire breadth of input possibilities (e.g., a web store’s peak load during Black Friday or Singles’ Day, or an accounting database’s year-end reconciliation).

Why It’s Important: The desire for increased performance is often the first reason architects look at SSDs. Benchmarking real performance, however, is neither simple nor easy. The proper figures of merit must be obtained under a preconditioned, representative workload. This is a necessary step to understanding SSD performance in a specific application.

7. Power

All data center SSDs are specified with a default power envelope, usually within the interface requirements. For special workloads with hard limits for power consumption, such as when deployed in massive racks constrained by power or cooling, some data center SSDs can be configured to set a lower power usage limit. In this case, the SSDs power-throttle to reduce maximum energy consumption, often at the cost of performance. If you use a power-throttling configuration in your workload, you must verify the real performance impact by testing the SSDs with the power-throttling setting enabled.

Why It’s Important: SSDs often can be tuned in place to optimize power or performance envelopes. By intelligently utilizing these options, you can realize a significant data center-wide power savings or performance gain.

8. Monitoring and Management

Different interface technologies support different monitoring technologies. The basic monitoring technology, available in SATA, SAS, and NVMe interfaces, is called SMART (Self-Monitoring, Analysis, and Reporting Technology). It provides a monitoring tool for checking basic health and performance of a single drive.

Why It’s Important: Deploying SSDs is relatively easy; however, as more SSDs are installed, using tools that can monitor health, performance, and utilization from a centralized platform will save time and reduce stress.

Bottom Line Recommendations for Data Center SSDs

Enterprise SSDs have completely revolutionized the data center. While hard drives still have a bright future in data centers for storing massive amounts of data at scale with the best TCO, the unparalleled latency and bandwidth advantage of SSDs for high-speed databases and other applications is undeniable.

Choosing the right SSD for a specific deployment can be difficult, as there is a spectrum of enterprise-class SSDs that span the gamut of price, performance, form factor, endurance, and capacity. When evaluating data center SSDs, look beyond the simple IOPS or bandwidth numbers to match performance to your needs. Consider quality of service to ensure your application SLAs can be met, mixed workload performance to better match real-life workloads, and form factor to assist in hot-swap or fail-in-place architectures.

Click here to learn more about Western Digital’s SSD offerings and how they best fit into your enterprise.