With NVMe-oF, who needs rack-scale PCIe?

NVMe over networks is winning out as the best way to offer shared, flash-based storage systems, with Dell EMC, NetApp, Pure Storage, Western Digital and startups in the game.

Just a few years ago, before the emergence of the NVMe-oF specification, the future data center in my crystal ball was based on a rack-scale, switched PCIe fabric. I figured that OCuLink provided a practical standard for the necessary cables and connectors, while merchant PCIe chips from Broadcom or IDT would let Dell, Hewlett Packard Enterprise or a bunch of startups build a rack-scale system without even programming a field-programmable gate array, let alone spinning an ASIC.

Sure, NextIO Inc. and VirtenSys Ltd. had unsuccessfully tried externalizing PCIe a decade ago, using it to enable a rack full of servers to share a few expensive 10 Gbps Ethernet cards, Fibre Channel (FC) host bus adapters (HBAs) or RAID controllers. Sharing expensive peripherals, such as disk storage and laser printers, was, after all, one of the original justifications for networks from LANs to SANs.

The problem with their approach was, to my mind, NextIO and VirtenSys products ended up costing almost as much as just putting a network interface card (NIC) and HBA in every server. Meanwhile, sharing those I/O cards reduced their value with each host, only getting one-half or one-quarter of the bandwidth.

Then, DSSD arrived on the scene, with Andy Bechtolsheim, Jeff Bonwick, and Bill Moore leading a well-funded, all-star cast that EMC acquired in 2014. Their approach was to share a block of flash across the hosts in a rack, which looked like a much better idea. Fusion-io cards and the other Peripheral Component Interconnect Express (PCIe) SSDs of the time were much more expensive than NICs and HBAs, but the real difference is that shared storage is much more valuable than the isolated puddles of ultralow latency that PCIe SSDs provided.

DSSD wasn't just a way to share SSDs; it was a shared storage system that could deliver 100 microsecond (µs) latency when all flash array vendors were bragging about 1 millisecond latency. For 100 µs latency, I thought users would put up with having to load a kernel driver.

But while EMC was touting DSSD as the best thing since System/360, a group of pioneering startups that included Apeiron Data Systems, E8 Storage, Excelero and Mangstor demonstrated nonvolatile memory express (NVMe) over networks. These systems added just 5 to 20 µs to the 75 or 80 µs latency of NVMe SSDs, matching DSSD's magic 100 µs latency over Ethernet.

In 2017, with NVMe over Fabrics (NVMe-oF) on the horizon, Dell EMC wisely decided to shutter the DSSD platform. Few customers wanted to pay for such expensive custom hardware and PCIe when they could get the same latency at a fifth the cost.

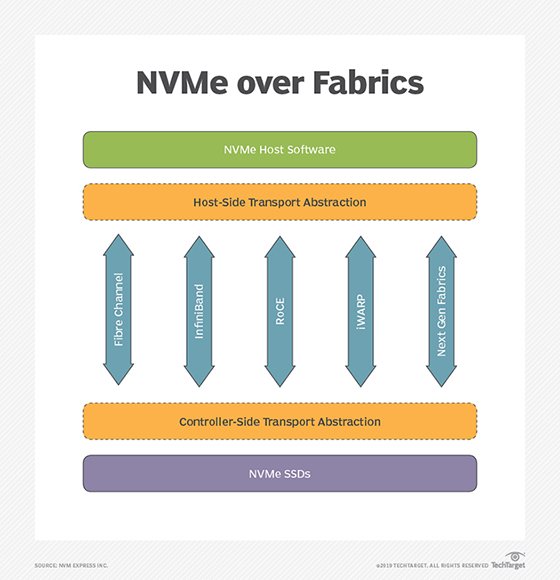

Today, most of those startups, along with industry titans, like Dell EMC, NetApp, Pure Storage and Western Digital, are shipping storage systems supporting the NVMe-oF protocol. These systems produce 100 µs-class latency over the standard Ethernet and FC network gear customers already use. Many of them use NVMe-oF as a front-end storage access protocol between hosts and storage systems, like iSCSI and FC, as well as a replacement for SAS as the back-end connection between storage systems and external media shelves.

If NVMe-oF is becoming the standard storage protocol for host-to-array and array-to-shelf communications, is there a place for rack-scale PCIe? Or was rack-scale PCIe a technology like the dirigible, autogyro and digital watch that went from futuristic to quaint without a real stop at modern?

Composable infrastructure supplier Liqid and Gen-Z Consortium are talking up a rack-scale infrastructure that shares not just storage or I/O cards, but GPUs and memory. That's a story for another day.