vali_111 - Fotolia

Ceph vs. Swift: Two object storage systems for different needs

Some cloud object storage experts think that Ceph is better than Swift, but that isn't true. The fact is that both options can work, but your workload needs matter.

In the Ceph vs. Swift debate, Ceph offers more flexibility in accessing data and storage information, but that doesn't mean it's a better object storage system than Swift.

Swift and Ceph both deliver object storage; they chop data into binary objects and replicate the pieces to storage. With both Ceph and Swift, the object stores are created on top of a Linux file system. In many cases, that is XFS, but it can be an alternative Linux file system.

Also, both Ceph and Swift were built with scalability in mind, so it's easy to add storage nodes as needed.

That is where the Ceph vs. Swift similarities end.

Accessing data

Swift was developed by Rackspace to offer scalable storage for its cloud. Because it was developed with cloud in mind, its main access method is through the RESTful API. Applications can address Swift directly (bypassing the OS) and commit data to Swift storage. That is very useful in a purely cloud-based environment, but it also complicates accessing Swift storage outside the cloud.

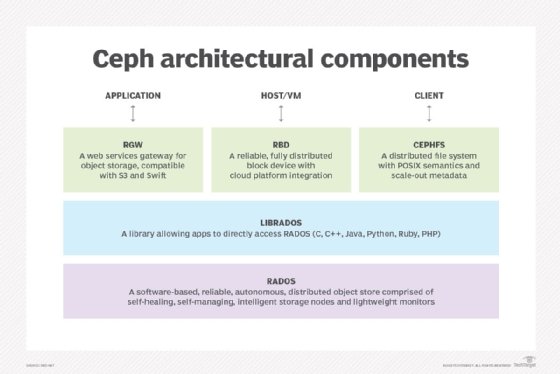

From the beginning, Ceph developers made it a more open object storage system than Swift. Ceph has four access methods:

- Amazon S3-compatible RESTful API access through the Rados gateway: This makes Ceph comparable to Swift, but also to anything in an Amazon S3 cloud environment. Amazon provides the blueprint for anything happening in modern cloud environments.

- CephFS: This is a Portable Operating System Interface-compliant file system that runs on top of any Linux distribution so the OS can access Ceph storage directly.

- Rados Block Device (RBD): RBD is a Linux kernel-level block device that allows users to access Ceph like any other Linux block device.

- ISCSI gateway: This is an addition to the Ceph project that was made by SUSE. It allows administrators to run an iSCSI gateway on top of Ceph, which turns it into a SAN filer that any OS can access.

When assessing Ceph vs. Swift, remember that Ceph offers many more ways to access the object storage system. Because of that, it's more usable and flexible than Swift.

Accessing storage information

Another way that Ceph is radically different from Swift is how clients access the object storage system. In Swift, the client must contact a Swift gateway, which creates a potential single point of failure. To solve this problem, many Swift environments implement high availability for the Swift gateway.

Ceph uses an object storage device (OSD), which runs on every storage node. Ceph can contact the OSD to get information about the storage topology and where to go to gather the binary objects to gain access to original data. The other component that is required to access the object store runs on the client, so Ceph's access to storage doesn’t have a single entry point. This makes it more flexible than Swift.

Ceph vs. Swift: How use cases break down

There are fundamental differences in the way Ceph and Swift are organized, but that doesn't mean one is better than the other.

Both are healthy, open source projects that are actively used by customers around the world; organizations use Ceph and Swift for different reasons. Ceph performs well in single-site environments that interact with virtual machines, databases and other data types that need a high level of consistency. Swift is a better match for very large environments that deal with massive amounts of data. That difference is a direct result of how both object storage systems handle data consistency in their replication algorithms. Ceph data is strongly consistent across the cluster, whereas Swift data is eventually consistent, but it may take some time before data is synchronized across the cluster.

The bottom line in the Ceph vs. Swift debate is that neither of the two object storage systems is better than the other; they serve different purposes, so both will persist.