SwiftStack adds a disk object-based AI reference architecture

SwiftStack's reference architecture for AI and machine learning bundles Nvidia servers for compute and disk-based Cisco or Dell EMC servers to scale object storage.

Object storage specialist SwiftStack is adding a software twist to the list of storage vendors partnering with Nvidia on reference architectures designed for AI and machine learning deployments.

Array vendors Dell EMC, IBM, NetApp and Pure Storage have already partnered with supercomputer maker Nvidia. Unlike those hardware vendors, SwiftStack sells only software and doesn't stipulate flash hardware in its AI reference architecture.

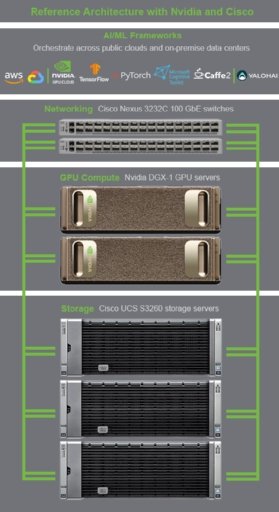

The new reference architecture consists of Nvidia DGX-1 GPU servers to host the AI and machine learning software, Cisco UCS S3260 servers equipped with spinning disks to run the object storage software and Cisco Nexus 3232C switches for networking via 100 Gigabit Ethernet.

SwiftStack is making available its AI reference architecture through reseller and systems integrator GPL Technologies, which also partners with Cisco, Dell EMC and Nvidia. GPL put together additional entry-level four-node or six-node AI and machine learning options that bundle disk-based Dell EMC R740xd servers for the SwiftStack object storage nodes, Dell EMC S4148 switches and Nvidia DGX-1 GPU servers for the AI and machine learning workloads.

"We've deployed other storage systems with DGX that work just fine, and there are many reference architectures out there. But we like that SwiftStack delivers solid performance in a software-defined solution that is flexible enough to run on commodity hardware in a variety of configurations," said Jason Blum, CTO of GPL, based in Burbank, Calif. GPL's other AI and machine learning installations use Pure Storage FlashBlades or Dell EMC Isilon F800 all-flash NAS storage, Blum said.

AI reference architecture with disk-based object store

Disk-based object storage is slower than all-flash systems. But Shailesh Manjrekar, head of AI and machine learning product and solution marketing at SwiftStack, based in San Francisco, said an object store can achieve high throughput if the user scales out with enough spindles and pipes to feed the massively parallel GPU layer with data. The big Nvidia GPU compute boxes running the AI and machine learning software have enough memory and flash to make latency a nonissue, he said.

SwiftStack claims its AI reference architecture delivered 100 GBps of throughput using 4,000 GPU cores and 15 PB of storage for an autonomous vehicle customer running the Nvidia GPU Cloud, or NGC. Manjrekar said the SwiftStack system ingests 1 PB of data per week from each of the autonomous vehicle's sensors, radar and laser-based lidar.

"That's how large of a scale we're talking about. You cannot build anything like that using a flash-based storage system," he said.

Less expensive to scale

Amita Potnis, a research manager in IDC's enterprise infrastructure practice, said all-flash arrays are more expensive than SwiftStack's disk-based object storage. Vendors such as NetApp might offer object storage as a secondary tier, but they use all-flash products for primary storage, she said. SwiftStack's software approach tends to be a bit easier for users who want to start small and scale to potentially hundreds of petabytes, Potnis said.

Object storage scales out through the addition of commodity server nodes. SwiftStack supports S3 and Swift object APIs and NFS and SMB for file-based data ingest and access. The company's 1space technology creates a single namespace that can span an on-premises object storage cluster and one or more public clouds for data management and migration. Customers can tag or label the data they ingest with extensive metadata and search it through the product's integration with Elastic's Elasticsearch.

On the AI and machine learning side of the equation, SwiftStack supports the Nvidia's NGC container registry, facilitating access to software such as Caffe, MXNet, PyTorch and TensorFlow. It offers direct support for TensorFlow, which can talk to object storage through S3-based APIs. The vendor also supports Valohai's Deep Learning Management PaaS AI and machine learning framework.

Manjrekar said SwiftStack partnered with GPL to enable customers to focus strictly on their business outcomes and give them a "single-throat-to-choke" support model. The two companies will show off the new AI and machine learning reference architecture at this week's Nvidia GPU Technology Conference in San Jose, Calif.

Nvidia has forged a series of storage partnerships over the past year. To coincide with the conference, Pure Storage this week expanded its AI-ready infrastructure with a hyperscale configuration, using Nvidia DGX-1 and DGX-2 systems, Mellanox InfiniBand and Ethernet networking technology, and a new FlashStack for AI option that uses Cisco UCS C480 ML servers and FlashBlade storage.

Dell EMC has AI and machine learning reference architectures with PowerEdge and Nvidia DGX servers and its all-flash Isilon file storage. IBM packages Nvidia server with its Spectrum Scale parallel file system and flash storage. Other AI and machine learning options include NetApp's AI OnTap Converged Infrastructure and DataDirect Networks A³I platform with Nvidia DGX servers.

"At the end of the day, you don't need a reference architecture. You can plug a DGX into a lot of fast storage, and as long as you have the know-how, you can make it work," GPL's Blum said. "The reference architectures just give you some assurance that you have a validated system that's going to do what you expect. It's one less thing to worry about."