What you need to know about flash caching

Using solid-state storage as cache can boost server and application performance dramatically, but the kind of flash cache you choose is critical.

Flash storage devices offer an effective way to eliminate data storage performance problems, especially when they're installed on the server where the application resides. Using flash as a cache for that application's data allows performance acceleration to be leveraged in an automated fashion, and it can accelerate both internal server storage and storage on a shared storage network. The challenge facing IT professionals is determining which flash caching alternative is best.

There are three primary types of flash caching that you should consider when you need to improve storage performance:

- File-level caching

- Block-level caching

- Aggregated caching

Each approach has its pros and cons; this article describes each one so you can select the right caching solution for your environment.

Products for all these approaches typically install on a server in the environment that has access to flash-based storage that's either internal to the server or on a storage network. The most typical use case is putting flash storage inside the server, often referred to as server-side flash.

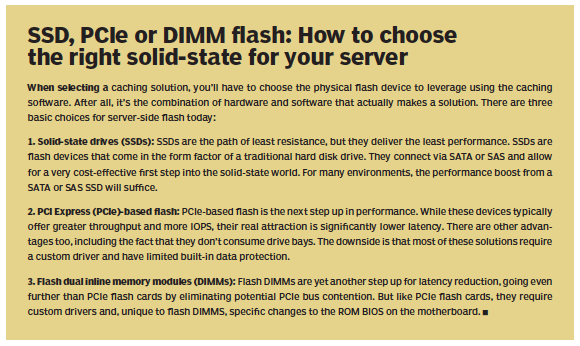

All three of these caching solutions can leverage hard drive form-factor solid-state drives (SSDs), PCI Express (PCIe)-based flash cards or the emerging dual inline memory module (DIMM)-based flash devices installed in a server's memory sockets. Most of these solutions will also support flash storage on a dedicated storage network. Typically, the only requirement is for the flash to be presented to the software as a block device.

File-level flash caching

As its name implies, file-level caching software operates at the file level within the operating system or application. These products automatically analyze and rate specific files to measure their cache worthiness. But most will accelerate the entire file, not just a portion of it.

A file-level caching solution cannot typically be installed at the hypervisor level within a virtualized environment, so it can't provide its caching services to multiple virtual machines (VMs) from a single instance of the software. Instead, it needs to be installed within the guest operating system on each VM that you want to accelerate. File-level caching can also be installed on bare-metal or non-virtualized servers.

The key is that each instance of the software has to be provided with a dedicated block device made up of some form of flash.

While these implementation requirements entail managing multiple instances of caching software and, in virtualized environments, subdividing the internal server SSD into potentially many independent block devices, the reward is very efficient application acceleration. Cache isn't wasted on data that for some reason may temporarily qualify for the SSD tier.

File-level caching products tend to be used in application-specific environments where the files that need to be accelerated, such as database logs and indexes, can be discretely selected. This makes manual selection of the file to be analyzed by the cache a common occurrence with these solutions.

The result is that file-level caching delivers excellent acceleration of a few mission-critical files without requiring excessive flash capacity. But to achieve that level of efficiency, there has to be an understanding of which files should be designated as cache worthy long before the flash hardware and software is purchased.

Block-level flash caching

Block-level caching solutions operate at the block level, so they don't or can't pay attention to the file or application generating the I/O. Instead, they simply look for the most active blocks of data and accelerate them regardless of where those blocks may come from.

Unlike file-level caching, block-level caching is ideal for virtualized environments where you may want to deploy one caching solution that will accelerate performance across all the VMs on that server. The advantage of this approach is that only one instance of the caching software needs to be implemented per physical host. The downside is that, for the most part, all data on that host is treated equally so any active data set will consume flash capacity.

Some block-level products are now adding intelligence so that specific I/O to specific VMs can be tracked. With that capability, you can customize the caching software to pin certain VMs into cache or to always exclude certain VMs. But this optimization is an all-or-nothing proposition; the block-level caching solution can't peer into a VM to only accelerate certain files within that virtual machine.

VM migration and server-side caching

Both file- and block-level caching products are challenged in virtualized environments when it comes to virtual machine migration. If a VM is moved from one physical server to another, the caching software must intercept that migration and invalidate the cache prior to the migration event actually occurring. (Cache invalidation is the process of emptying the contents of a cache.)

At a bare minimum, most caching products on the market ensure the cache is invalidated prior to VM movement. The concern, though, is how that cache is rebuilt when the VM reaches its destination server. This is where file-level caching has a distinct advantage. Since the caching software is installed in the guest operating system, the policies on the specific files to be accelerated follow the VM and those files can be quickly reloaded into cache after the VM is migrated.

Block-level caches must rebuild the cache analytics from scratch when the VM reaches the destination host server. That means the performance available for requalifying data for cache worthiness will now be bound by the speed of hard disk drives (HDDs). In some cases, it can take several days for the right data to be placed back into the cache on the new server.

A few block-level solutions can transmit the cache analytics to the destination host when a migration occurs. Obviously, that means the same caching software needs to be installed on each host, but that would be typical. By transferring the cache analytics with the VM as it's migrated, the caching software running on the receiving host can immediately copy the right blocks for that VM into its cache area. Although performance will still be HDD-bound while this process occurs, it should be a very fast process that typically takes just minutes.

Aggregated caching solutions

The third flash caching alternative is an aggregated caching solution. These products solve the migration issue by combining flash resources internal to the servers into a virtual but shared pool of storage. They also provide better resiliency since data protection schemes similar to RAID can be implemented. The combination of being migration-friendly and resilient makes these aggregated solutions ideal for both read and write caching.

These products work by installing the caching aggregation software on all the hosts in the virtual cluster where it performs two functions: aggregating flash resources, and providing intelligence to each host about how to best use that aggregated flash pool as a cache. This intelligence is similar to the caching algorithms used by file- and block-level cache solutions.

At least three (but typically more) of the hosts in the cluster need to contribute flash resources, which are then aggregated into a virtual pool of flash storage that acts as a cache layer to the legacy storage layer. It's important to note that while a minimum of three hosts must participate, not all the hosts need to contribute flash capacity. Most of these cache aggregation software products allow any host connected in the cluster to access the shared pool of flash.

Product sampler: Flash caching software

Here are some examples of flash caching products that work at the file, block or aggregated level.

File-level caching

Block-level caching

- SanDisk FlashSoft

- Proximal Data AutoCache

- HGST Virident EnhanceIO SSD Cache Software

Aggregated caching

Once the aggregated pool of flash is established, the software then provides the caching intelligence to each host to move the most active data to the flash tier and, in many cases, to directly write I/O to the flash tier first (thanks to the aggregated cache's better availability). But unlike the other two cache types, if a migration occurs there's no need to rebuild the cache analytics, since it uses a shared pool. The target host simply picks up where the original host left off.

The downside to the aggregated approaches is that all access to the flash tier involves moving data across the network. For performance to be consistent, most of the vendors of these products suggest using a dedicated network for the cache tier. For maximum benefit, these aggregated cache solutions require a well-designed server-to-server network. The other two caching solutions typically service all cache requests from the flash internally installed in the server.

What's the best flash cache option?

The best server caching solution for your company depends on your environment. For example, if your server-to-server network is already built out and upgraded, then an aggregated cache solution has a lot of upside and may provide a more resilient way to cache data. But if your server-to-server network isn't able to handle this type of workload, the internal server options provided by a file- or block-level cache may be the best choice. They supply a significant performance increase without having to touch the network, a critical point since many storage administrators don't have authorization to upgrade or modify the server network.

About the author:

George Crump is president of Storage Switzerland, an IT analyst firm focused on storage and virtualization.