Ways to add enterprise NVMe drives to your infrastructure

Organizations can benefit from faster data transport between applications and storage through NVMe. When looking at enterprise NVMe, remember management and cost considerations.

NVMe flash unlocks the potential of PCIe infrastructure: It enables latency-sensitive applications to tap compute and storage resources more efficiently.

One of the benefits of NVMe is that organizations can get more value out of the money they spend on servers and storage. NVMe's scalability also positions it to serve unified file, block and object storage.

There are several ways to introduce enterprise NVMe drives into a data center. The approach and type of drive an organization chooses should be governed by cost, capacity and performance needs.

Choose an approach based on your needs

Server-based NVMe flash

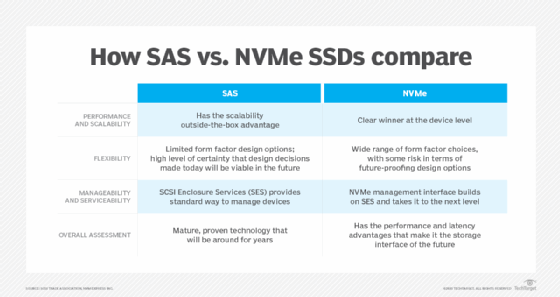

For organizations that rely on server-based flash farms to process data, one option may be to phase in NVMe drives to replace traditional SSDs. This means a straightforward swap of SAS- or SATA-connected SSDs with NVMe drives. In fact, DAS in servers is how NVMe drives initially were implemented.

To extract full NVMe performance, storage servers might require corresponding boosts in compute power. Determine the appropriate NVMe form factor to use. The servers need enough slots to insert PCIe add-in cards. If the servers do not have enough slots and organizations aren't ready to buy new servers, NVMe U.2 devices could serve as local read/write cache or a boot device. Most servers have more U.2 slots than PCIe slots.

End-to-end NVMe flash

All-flash arrays with SAS or SATA SSDs remain dominant, but NVMe-based systems are going mainstream. Startups such as Pavilion Data Systems and Excelero, which was recently acquired by Nvidia, pioneered end-to-end NVMe flash arrays that use NVMe SSDs for back-end storage and support NVMe front-end host connectivity.

Leading storage vendors have added NVMe-based arrays to their all-flash lineups, often mixing flash and storage class memory with automated tiering and data placement. The list includes the following:

- Dell EMC PowerMax

- HPE Alletra

- Hitachi Virtual Storage Platform E Series

- IBM Elastic Storage System 3000

- NetApp All Flash Fabric-Attached Storage A-Series

- Pure Storage FlashArray//X

NVMe over Fabrics

These flash arrays incorporate NVMe-oF implementations for shared storage. The NVMe-oF messaging layer transports data traffic across long distances.

Dave Raffo, senior analyst at Evaluator Group, based in Boulder, Colo., said native TCP is the easiest method for deploying NVMe-oF since TCP is native to the box.

"You don't need to make any hardware changes. You use the same Ethernet network for NVMe that you're already using," Raffo said.

Organizations could also deploy Fibre Channel, InfiniBand and Remote Direct Memory Access-enabled Ethernet as NVMe-oF. Unlike TCP, there are additional costs to consider when using these existing networks as an NVMe fabric. Fibre Channel and InfiniBand may require switching upgrades, while additional network interface cards are needed to support Ethernet-based NVMe-oF.

Consider the cost

Despite declining flash prices and available NAND supply -- prior to the prevailing supply chain crunch -- NVMe SSDs still cost more than SAS/SATA SSDs. This is partly a function of NVMe's newness. As more organizations make the switch, NVMe drives will achieve commodity pricing. That's a consideration to keep in mind for capacity planning.

"NVMe is being widely adopted by the hyperscale data centers. That's going to drive volume and drop the costs," said Tom Coughlin, president of Coughlin Associates, a digital storage consulting firm in Atascadero, Calif.

As NVMe adoption increases, the SAS and SATA interfaces will receive less development and eventually cost more on a per-gigabyte basis than NVMe, Coughlin said.

Keep up with capacity and performance needs

Be mindful of capacity and performance needs. Taken cumulatively, NVMe running across multiple PCIe lanes is faster than SCSI connectivity, but an individual SAS drive could outperform NVMe in a lane-by-lane comparison. SAS drives use one or two links to connect to a host, whereas NVMe uses four lanes and consumes more resources to transfer data.

NVMe architecture supports up to 65,535 (64K) queues, and each queue can handle 64K I/O commands. SAS devices support 254 queues, and SATA drives have a queue depth of 32. NVMe drives enable applications to bypass the host bus adapter to communicate directly with storage via PCIe lanes. This parallelism enables NVMe storage to support a broader range of workloads simultaneously.

When going all-NVMe, use software-defined storage (SDS) products that scale compute and storage independently to avoid straining NVMe capacity. This helps ensure that compute and storage resources are sufficiently allocated to avoid CPU bottlenecks. Organizations that deploy AI, big data analytics, key-value stores, streaming media and cloud-based applications that require fast storage can greatly benefit from SDS' scalability.

Another way to incorporate NVMe is to dedicate a tier of NVMe drives for operational data alongside existing SSD storage. This approach requires a software tool that can intelligently manage data placement.

Organizations can also consider disaggregated hyper-converged infrastructure or composable systems of integrated hardware to introduce enterprise NVMe drives to their infrastructure. These systems present a financial commitment beyond the cost of upgrading servers and drives. When deciding if the investment is worth it in the long run, organizations should assess the applications they plan to serve.

"If you've got SAS or SATA and you're not lacking capacity, keep reusing it until it wears out," and move to NVMe during refresh, Coughlin said.