- Share this item with your network:

- Download

Storage

- FeatureIs an all-flash data center worth it?

- FeatureScalability drives SAN market growth

- FeatureBuilding an IT resiliency plan into an always-on world

- FeatureUse the cloud to enhance the functions of primary storage

- OpinionSymbolic IO IRIS a breakthrough in server, storage architecture

- OpinionData center storage architecture gets smarter with AI

boscorelli - Fotolia

Use the cloud to enhance the functions of primary storage

Learn some of the best ways to leverage public cloud as a storage tier to complement primary storage and make data centers more efficient.

IT managers viewed cloud storage skeptically or as a threat when services such as Amazon Simple Storage Service and Elastic Block Store started appearing a little over a decade ago. As the logic went, the cloud might serve archiving needs, but I sure wouldn't trust placing primary data there due to security, availability and performance concerns.

Today, cloud storage's potential to enhance on-premises deployments is no longer disputable. And while the industry has yet to resolve all the issues that make IT managers uncomfortable, cloud technology security has made solid progress -- notwithstanding the operator error that took down Amazon S3 for a few hours in February.

Maturation has enabled the cloud to become a favorite target for secondary and tertiary data through a growing array of backup, disaster recovery and archiving services. And, increasingly, we're using cloud storage to enhance the functions of primary storage as a tier in the storage hierarchy.

Extending storage tiering to the cloud

Tiering provides a way to balance storage performance, space and cost requirements of different applications. Data is assigned to different storage classes, based on frequency of access and related factors and then placed on the media that best matches the requirements for that class of storage.

Storage tiering technology has become highly automated, with data placement decisions based on a set of policies, enabling companies to create a hybrid storage architecture, which can span media in the data center and across one or more providers' clouds (see "Evolution of storage tiering technologies").

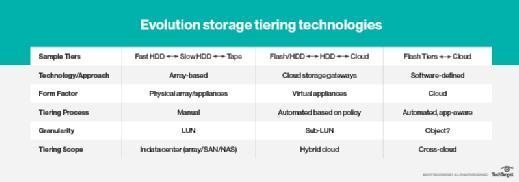

Evolution of storage tiering technologies

Storage tiering has evolved significantly since the early 2000s when it focused primarily at the array level to define tiers based on the performance and cost of the media. The idea behind tiering is to place most frequently accessed data on the highest-performing tiers and move less often accessed data to more cost-effective, lower-performance storage. As designers soon learned, frequently accessed, less aged data usually belongs to business-critical, often performance-sensitive, applications, and less regularly accessed data does not. So SAN or array-based tiering might place frequently accessed, IOPS- or latency-sensitive data on higher-performance SAS drives and less accessed user or application data on capacity SATA drives. Rarely accessed or older data might be migrated to an archive tier based on tape.

The advent of automated storage tiering changed everything, enabling dynamic data migration between tiers based on most recent access patterns and storage policies.

Today, tiering has evolved dramatically in practice, but the underlying concept remains the same. As the chart suggests, admins base one or more performance tiers on SSD and flash media (e.g., nonvolatile DIMMs, nonvolatile memory express flash and SSDs), while the cloud's replaced slow disk and tape in the capacity tier.

Tiering is now commonly done at the sub-LUN level, while gateways and nascent software-defined technologies offer a wider choice of tiering approaches. We don't know for certain what the future holds, but we imagine that data tiering will become ever more granular and intelligent in the years ahead.

Recent Taneja Group research suggested more than 60% of IT practitioners either already use or plan to use some form of storage tiering. Two-thirds, meanwhile, have extended storage tiers to the public cloud for at least some critical workloads. The cloud will likely play a larger role in tiering going forward.

Cloud tiering that benefits primary storage

Vendors have introduced products that move data out of primary storage as it becomes cold or inactive. Unlike traditional local storage tiering, the newest products permit tiering to the public cloud for greater scalability and more cost-effective use of storage overall.

Most products are automated and allow you to set policies that govern data migration based on frequency of access, age or other factors. All complement and enhance the functions of primary storage.

Cloud storage delivers an array of benefits

Realize significant benefits when taking a cloud storage approach to enhance one or more primary storage use cases:

- scalability and resilience of cloud storage while maintaining data control;

- predictable application performance -- after testing and validation;

- ease of integration between on-premises (block and file protocols) and cloud (object and REST protocols) storage -- no training or new staff investment required;

- cloud provider choice reduces lock-in potential and provides freedom to choose what works best for your environment (e.g., optimal mix of data management capabilities and pricing model);

- free up local primary storage to dynamically reallocate on-premises capacity and performance resources to most critical workloads;

- more cost-effective and often automated way to store older, less active data (e.g., to cloud archival storage services like Amazon Glacier);

- simple, efficient data protection through cloud-enabled snapshots; and

- rapid recovery of workloads with cloud-based DR (disaster recovery as a service).

Products that facilitate the cloud as a separate primary block or file storage tier fall into two major categories: cloud storage gateways and software-defined storage-based offerings.

Cloud storage gateways

Cloud storage gateways have come a long way since their 2010 introduction. They've also gone through several naming iterations and may be referred to as cloud controllers, cloud-integrated storage, cloud-caching appliances or other terms.

Originally focused on low-cost cloud backup or archive, storage gateways now address several different primary and secondary storage use cases, including file sync and sharing, collaboration, cloud-based disaster recovery (DR) for recovery in the cloud or on premises, and in-cloud data analytics, in addition to front ends for scale-out NAS in the cloud.

Cloud storage gateways appear as traditional arrays to workloads, but function as high-performance local caches or tiers in front of cloud capacity on the back end, generally as highly scalable object storage. They automatically translate file or block protocols into object protocols. That allows existing apps running on premises to benefit from cloud storage scalability and resiliency without the burden and complexity of integrating legacy storage into the cloud. Gateways come as physical or virtual appliances, and can replace traditional block or file storage systems when storage is built-in.

Cloud storage gateways that handle primary storage commonly include flash-based caching and, in a few cases, primary storage tiers. Though vendor caching algorithms vary, most dynamically store frequently accessed data in flash cache on an ongoing basis, ensuring critical on-premises apps meet performance objectives while translating file or block protocols into object storage in the background. Local pinning prevents the flushing of critical data from the local cache or storage tier.

What cloud storage gateway to choose?

Look for cloud storage gateways that enable cache to be dynamically resized so it's better tuned to requirements of specific use cases. A cache supporting functions of primary storage might be sized to capture 100% of data stored in the cloud, but you may size your cache that supports archive storage to contain small fractions of cloud data. Some also let you size and assign individual caches to different data sets to support varying performance needs and use cases.

Gateways dedicated to archiving cold or inactive data also benefit primary storage by freeing up on-premises performance and capacity resources so they're used more productively to benefit primary workloads.

Gateway products should also include data reduction such as deduplication or compression to minimize the impact on network performance and to reduce the capacity and cost of data stored in the cloud. Look for products that support deduplication and compression for specific applications, since not all workloads will benefit equally. They should also provide encryption for data at rest and in motion, and support space-efficient snapshots and cloning locally and in the cloud to ensure data's protected. Also ask about directory technologies such as Active Directory or Lightweight Directory Access Protocol (LDAP) to verify full integration into your current environment.

Check out cloud storage gateway offerings from vendors such as Microsoft (Azure StorSimple) and Dell EMC (CloudArray) to support a variety of primary storage use cases, such as collaboration, databases or virtual machines. If you're looking for a more cost-effective scale-out NAS, look at offerings from Panzura and Nasuni. And to consolidate remote office/branch office infrastructure, consider a gateway appliance from Ctera Networks, which can serve as a front-line array to replace local primary storage in ROBO deployments.

Words of caution: Qualify cloud storage gateways for specific functions of primary storage upfront to ensure they meet latency and IOPS objectives for applications you have in mind. Also, choose a storage gateway that can access multiple cloud providers, both to avoid potential lock-in and enable choosing the provider that best meets the needs of particular workloads.

Software-defined primary storage

Cloud storage gateways may allow moving storage to the cloud, but they always assume applications remain running on premises. A new wave of software-defined storage (SDS) products promises to take things a step further by seamlessly transferring primary storage workloads between the data center and the cloud. While these are in early stages of adoption, we at Taneja Group believe they have merit, given the desire to more fully take advantage of the scalability, resilience and agility of the public cloud.

Vendors take two architectural approaches to make this happen: (a) using a distributed, platform-agnostic storage plane to create a single logical pool of storage that spans on premises and cloud and (b) enabling storage volumes to run as a service alongside public cloud compute.

Of course, if you're using object storage in the data center to support primary workloads, and it happens to be compatible with one or more public cloud storage services, such as Amazon S3, then you likely already have an easy way to migrate primary storage workloads into and out of the cloud. Achieving this for primary block and file storage is much tougher, but we expect that'll begin to change by next year.

Because these new emerging technologies primarily focus on nonproduction use cases, such as enabling cloud-based DR or data analytics, and given their relative immaturity, evaluate them thoroughly -- on paper and with hands-on testing -- to determine if and how they can help enhance your primary storage workloads.

Look before you leap

Cloud storage that complements or enhances primary storage should meet your needs in each of the following areas:

Accessibility: How broadly accessible must cloud-resident data be, given your primary storage use cases? For example, file sync and sharing and collaboration require access from almost anywhere, whereas analytics workloads may only require accessibility from the data center.

Security: Most cloud gateways and newer software-based hybrid storage products and services encrypt data at rest and in flight, but underlying technologies can vary. Also, verify how you'll manage and control keys -- whether they're generated by you or the vendor -- and ensure they're adequately protected.

Avoid lock-in: Still a real possibility for single-provider platforms such as Amazon Web Services Storage Gateway. You must demand support for multiple public clouds and cost-effective data migration out of the cloud should you decide to switch providers.

Data center to cloud network capabilities: Evaluate network availability and performance requirements, and make sure your network provides the redundancy, connectivity and bandwidth necessary. Check whether the vendor offers deduplication or compression to reduce bandwidth usage and costs.

Application performance: Scope out latency, IOPS and other performance requirements based on the use cases and workloads you plan to run. Once you've qualified these against an offering's specifications, insist on hands-on testing or proof-of-concept exercises to validate performance meets expectations.

Costs: Given its extreme scalability and ease of use, cloud storage can become habit-forming, and the costs of storing and accessing data there can grow quickly. Estimate monthly storage costs for potential public cloud providers ahead of time, using their cost calculators where available, and review monthly bills to ensure estimates are in range. In addition, look for tools that help allocate and manage costs.

Functions of primary storage ready for cloud?

Until recently, cloud storage was largely the domain of developers, who benefited from ease of use and pay-as-you-go accessibility of object storage services. But the advent of cloud storage gateways and some emerging hybrid cloud software technologies has changed that, enabling storage admins to make productive use of cloud storage for primary workloads.

If you believe that at least some of your tier-two or tier-one workloads could benefit from the scalability, resilience and broad accessibility public cloud offers, take a closer look at the tiering and other storage products and services outlined here. They may prove a fast and easy onramp to the cloud for one or more of your key use cases.

Next Steps

Three ways hybrid cloud can improve storage performance

Customers use cloud to boost data storage infrastructure

Storage CTOs see bright future in cloud