peshkova - Fotolia

Track these 4 emerging storage technologies in 2021

As they seek to increase the efficiency and performance of data storage systems in 2021, administrators should keep these four emerging technologies in mind.

There were significant changes within the data storage ecosystem throughout 2020. And, in 2021, that trend is set to continue.

In 2020, storage admins saw advancements and updates related to storage class memory (SCM), 3D quad-level cell (QLC) drives, cloud storage, Kubernetes persistent storage and machine learning.

This year, there are several emerging storage technologies that will mature, and make their way into the enterprise:

- PCIe Gen 4 and Gen 5

- Compute Express Link (CXL) 2.0

- Switchless interconnect

- Data processing units (DPUs)

While some of these technologies might not seem cutting-edge, they will have a profound effect on storage performance in 2021. Most will likely appear in servers from vendors such as Dell Technologies, HPE, Cisco and Supermicro before they appear in storage systems. Software-defined storage (SDS) is likely the first storage type to take advantage of them.

PCIe Gen 4 and Gen 5

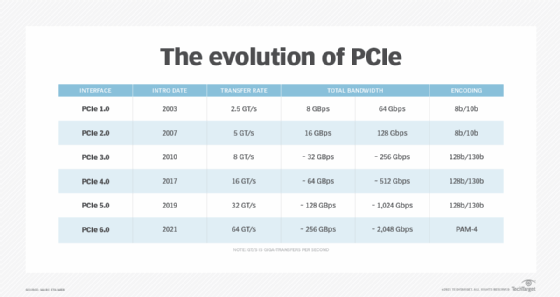

PCIe Gen 4 delivers twice the bandwidth per lane as Gen 3, and Gen 5 delivers twice that of Gen 4. This is crucial to eliminate a major external and internal interconnect bandwidth issue. A Gen 3 PCIe slot provides approximately 32 GBps of total throughput, or close to 256 Gbps (see the chart below). That's not going to cut it for multiple 200 Gbps network interface cards (NICs) or adapters, let alone 400 Gbps interconnect; it becomes a performance chokepoint.

The good news is Gen 4 delivers approximately 64 GBps total throughput, or roughly 512 Gbps, which is more than sufficient for multiple 200 Gbps ports. Gen 5 doubles that again to nearly 128 GBps, or approximately 1,024 Gbps, solving the issue of multiple 400 Gbps interconnects.

Intel has said its processors will support both Gen 4 and Gen 5 in 2021. AMD supports Gen 4 but has said nothing about Gen 5. Because most storage controllers are based on Intel and AMD, expect storage systems to start including PCIe Gen 4 and Gen 5 in 2021. By 2022, Gen 5 support should be the standard.

CXL 2.0

PCIe Gen 5 support is important because of the latest version of the CXL 2.0 open standard interface. CXL is a CPU-to-device interconnect protocol on PCIe that targets high-performance workloads. It specifically takes advantage of the PCIe Gen 5 specification and enables alternate protocols to use the PCIe physical layer.

Once CXL-based accelerators are plugged into a PCIe x16 (16-lane) slot, it negotiates with the host processor port at PCIe 5.0 transfer rates of 32 giga-transfers per second (GT/s). When both sides support CXL 2.0, they will use CXL transaction protocols, which are more efficient and have lower latencies. If one or both do not support CXL 2.0, they will operate as standard PCIe devices. Transfer speeds are up to 64 GBps bidirectionally over a 16-lane link.

These details have a huge potential effect on storage system and SDS performance. CXL significantly and materially improves performance over PCIe with the three transactional protocols of CXL.io, CXL.cache, and CXL.memory. CXL.io is nearly indistinguishable from PCI Express 5.0. CXL.io is used for device discovery, configuration, register access, interrupts, virtualization and bulk direct-memory access (DMA). This is the main protocol. CXL.cache and CXL.memory are optional. CXL.cache empowers accelerators to cache system memory to enable CXL coherency. CXL.memory provides host processors direct access to accelerator-attached memory. The CPU, GPU or TPU can view that accelerator attached memory as an additional address space. This eliminates a lot of inefficiencies and latency.

Although aimed primarily at heterogeneous computing, there is an intriguing storage play with NVMe SSDs, NVMe SCM, scale-out storage systems and SDS. Coherency between the CPU memory space and memory in attached devices eliminates the back-and-forth DMA operations by reading and writing directly into the other device's memory system.

Major vendors, including Intel, AMD and Nvidia, support CXL 2.0, which speaks to the market acceptance of this emerging storage technology. Expect the first CXL 2.0 systems and drives to appear sometime in the second half of 2021.

Switchless interconnect

Switchless interconnect solves a major storage problem. As scaling requirements escalate, switches become less efficient, add more latency and increase costs.

Switchless interconnect does its own routing. It limits hops and latency, and reduces requirements for power, cooling, rack space, cables and transceivers. It can use a dragonfly configuration instead of a fat tree. This simplifies the large configurations that high-performance computing environments demand. The vendor that delivers switchless interconnect has spent years developing it. Although the vendor is still in stealth, expect to see this technology in storage systems and SDS by the second half of 2021.

DPUs

DPUs are another emerging storage technology to track in 2021. There are two currently in the market: one from Nvidia/Mellanox and one from Fungible.

The Nvidia/Mellanox DPU is focused on networking offload. It offloads the majority of the most common high-performance networking protocols, such as RDMA, NVMe/JBOF, storage space direct (S2D with RDMA), Lustre RDMA, NFS RDMA, NVMe-oF (RDMA over Converged Ethernet, InfiniBand, TCP/IP), Open vSwitch Kernel Datapath offload, Network Packet Shaping, Nvidia GPUDirect and User Datagram Protocol offload.

The Nvidia/Mellanox DPU aims to accelerate communications between initiators and targets. The Nvidia/Mellanox Connect-X NIC/Adapter stands out in the market for its high performance, especially in storage. It is likely to stay that way in 2021. Although, Fungible is a tough competitor.

There are two different Fungible DPUs. One is an initiator that runs in servers. The other is specifically a storage target up to four times the bandwidth at 800 Gbps. The Fungible DPU is designed to offload all infrastructure services from x86 processors. It works on both PCIe Gen 3 and Gen 4. It has built in encryption/decryption, compression/decompression and programmability. It supports NVMe-oF, NVMe over TCP and, uniquely, Fungible TrueFabric. TrueFabric further reduces latency and guarantees no more than three hops, no matter how big the number of initiators, switches and targets. TrueFabric requires both Fungible initiators and Fungible storage targets; although, the latter are compatible with Nvidia/Mellanox initiators.

Expect to see other storage systems in the market based on the Fungible DPU in the second half of 2021.