- Share this item with your network:

- Download

Storage

- FeatureIs an all-flash data center worth it?

- FeatureScalability drives SAN market growth

- FeatureBuilding an IT resiliency plan into an always-on world

- FeatureUse the cloud to enhance the functions of primary storage

- OpinionSymbolic IO IRIS a breakthrough in server, storage architecture

- OpinionData center storage architecture gets smarter with AI

Nomad_Soul - Fotolia

Scalability drives SAN market growth

Better performing, more scalable and capacious storage area networks are required for ever-growing amounts of unstructured data, demanding applications and big data analytics.

We've seen how NAS arrays are holding their own against the combined onslaught of cloud storage and scale-out data centers. Similarly, according to our survey results, storage area networks continue to dominate over much touted unstructured data storage and management products encroaching in on their space in the market.

For example, in a January 2017 report, industry intelligence and consulting firm Future Market Insights predicted the global SAN market would grow in value modestly but steadily from about $16.08 billion in 2016 to more than $22 billion by 2026.

Tried and true

Nearly 70% of respondents in our surveys have installed SANs to handle their primary storage needs, more than twice the number with file-based network-attached storage devices. Forget about unified arrays, converged infrastructure and hyper-converged storage systems. All three of those barely break double digits. Among enterprises that have bought primary storage within the last three months, purchases in the SAN market double NAS buys.

Still, unified arrays and converged and hyper-converged storage products appear poised for growth over the next six months to a year. The number of intended SAN buys grows slightly to 77% during that period, a little above today's installed rate, and NAS purchases remain essentially constant at 38%. But our surveys indicate a substantial uptick in unified array, converged and hyper-converged infrastructure storage buys -- at 14%, 17% and 21%, respectively.

Why new SANs now?

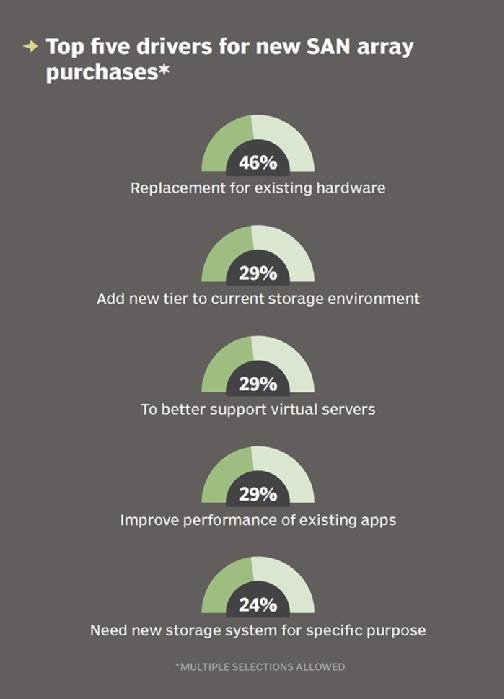

The top reason for buying a new SAN is to replace existing hardware. Nearly half of the respondents in our survey listed that as the reason. That is followed by adding a new storage tier, better support for virtual servers and to improve the performance of existing apps.

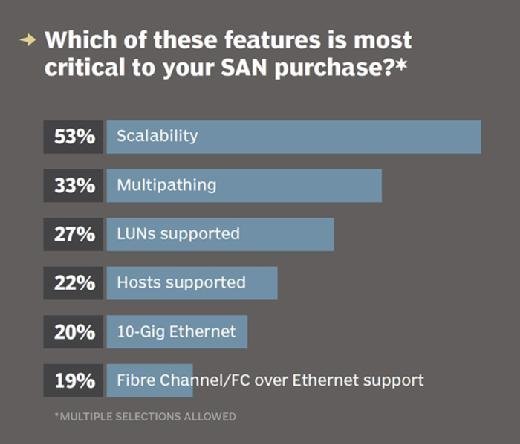

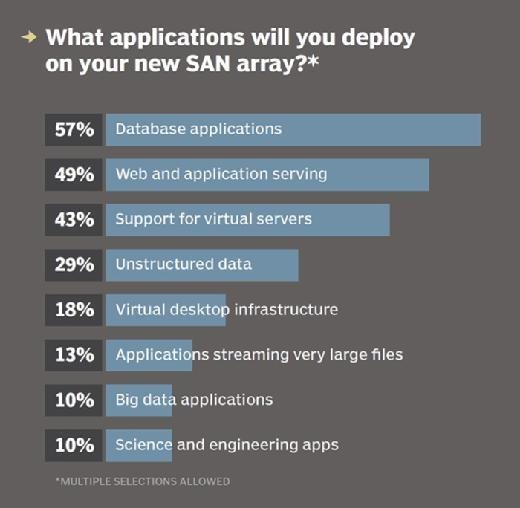

The most important features respondents look for when entering the SAN market today included scalability at 53%, followed fairly distantly by multipathing, LUN and host support, 10-Gig Ethernet, and Fibre Channel or FC over Ethernet support. For the most part, these SANs will be used to host databases (59%) and virtual servers (50%) and for web and application serving (42%). Other uses included unstructured data such as file data, user shares, and more (19%), virtual desktop infrastructure (15%), big data apps (18%), and streaming large files for purposes such as media production and science and engineering (both at 10%).

Certainly, hyper-converged infrastructure is much simpler and less expensive than SANs. But, in most cases, when you need to add more storage capacity to hyper-converged platforms, you also must add unneeded compute and virtualization resources. Hence, the recent advent of the so-called disaggregation phase of the evolution of hyper-convergence.

Disaggregation, in short, isn't about going back to the age of silos of functionality. Rather, it tilts nodes in one direction or the other -- say, more to compute than storage and vice versa. The idea is to make it easier to add only those resources that you want or need in a hyper-converged infrastructure, enabling a capability that hyper-converged isn't able to offer now, except under very specific and limited situations, and only from a few vendors.

And let's not forget the cloud. What's not to like about an elastic, pay-as-you-go model that can move infrastructure cost to a third-party? It may not be the best place to keep critical data, however. "The main public clouds do not offer storage that is functionally equivalent to storage area networks," Christos Karamanolis, CTO of the storage and availability business unit at VMware, told TechTarget earlier this year. That's going to change, however. He said the change will come when products like VMware vSAN and Microsoft Storage Spaces provide customers "the ability to use the same operational model no matter where their IT infrastructure lives," on premises, in the cloud or both.

Until these methods are perfected and become widely available, I doubt we'll see huge changes in what's driving many folks to remain in SAN camp.

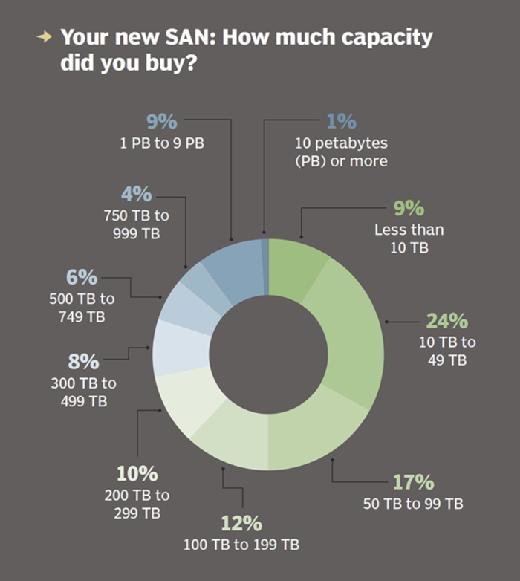

While expandability is an important feature of SANs, according to our surveys, most businesses prefer to buy initially with a large amount of storage capacity --an average of nearly 700 TB. The majority (51%) reported recently buying a SAN in the 10 TB to 199 TB range. The average is raised by the 9% reporting new SAN purchases with between a petabyte and 9 PB of capacity.

Expect the average capacity number to steadily increase as more businesses learn to better take advantage of all the data they are ingesting and creating using big data analytics. Another driver of data growth is increasing adoption of the internet of things, where anything and everything becomes a collector and disseminator of data.

For maximum impact -- and in some cases, any impact at all -- much of this data must be analyzed in as close to real time as possible to have any chance of protecting your IT infrastructure or benefit business. The former to catch malware, for example, before it can do maximum damage. Sure, a lot of big data analysis can take place after the fact in secondary storage, but to gain the most immediate benefits, it has to be analyzed in real or near real-time.

SANs remain the preferred primary storage platform of choice for these purposes. While popular emerging alternatives are catching up to offerings in the SAN market in some areas, they're not there yet.

Next Steps

Primary storage ruled by SAN arrays

2016 Products of the Year in storage and SAN management

HPE buys Nimble and gets another SAN platform

Dig Deeper on Primary storage devices

-

![]()

Five ways the hyper-converged infrastructure market is changing

By: Stephen Pritchard -

![]()

Five reasons to look at hyper-converged infrastructure

By: Stephen Pritchard -

![]()

HCI vs. SAN: How to choose between them

By: Stacey Peterson

-

![]()

Disaggregated hyper-converged infrastructure vs. traditional HCI

By: Brien Posey