idspopd - Fotolia

Hadoop Distributed File System helps tackle big data

Hadoop Distributed File System storage systems are being enlisted to tackle big data thanks to vendors like EMC, Hitachi, IBM and NetApp, and Apache.

It's common for storage discussions to begin with a reference to data growth. The implied assumption is that companies will want to capture and store all the data they can for a growing list of analytics applications. Today, because the default policy for retaining stored data within many enterprises is "save everything forever," many organizations are regularly accumulating multiple petabytes of data.

Despite what you might think about the commoditization of storage, there is a cost to storing all of this data. So why do it? Because executives today realize that data has intrinsic value due to advances in data analytics. In fact, that data can be monetized. There's also an understanding at the executive level that the value of owning data is increasing, while the value of owning IT infrastructure is decreasing.

Hadoop Distributed File System (HDFS) is fast becoming the go-to tool enterprise storage users are adopting to tackle the big data problem. Here's a closer look as to how it became the primary option.

Where to put all that data?

Traditional enterprise storage platforms -- disk arrays and tape siloes -- aren't up to the task of storing all of the data. Data center arrays are too costly for the data volumes envisioned, and tape, while appropriate for large volumes at low cost, elongates data retrieval. The repository sought by enterprises today is often called the big data lake, and the most common instantiation of these repositories is Hadoop.

Originated at the Internet data centers of Google and Yahoo, Hadoop was designed to deliver high-analytic performance coupled with large-scale storage at low cost. There is a chasm between large Internet data centers and enterprise data centers that's defined by differences in management style, spending priorities, compliance and risk-avoidance profiles, however. As a result, HDFS was not originally designed for long-term data persistence. The assumption was data would be loaded into a distributed cluster for MapReduce batch processing jobs and then unloaded -- a process that would be repeated for successive jobs.

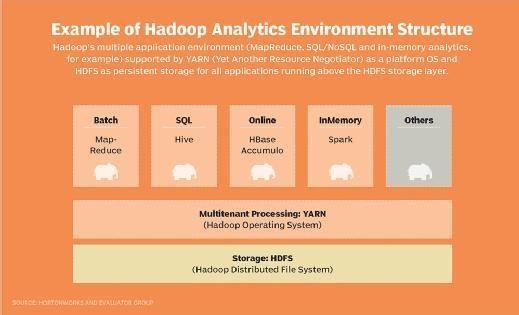

Nowadays, enterprises not only want to run successive MapReduce jobs, they want to build multiple applications that, for example, converge analytics with the data generated by online transaction processing (OLTP) on top of the Hadoop Distributed File System. Common storage for multiple types of analytics users is needed as well (See Figure 1: Hadoop's multiple application environment supported by YARN and HDFS). Some of the more popular applications include Apache HBase for OLTP and Apache Spark and Storm for data streaming, as well as real-time analytics. To do this, data needs to be persisted, protected and secured for multiple user groups and for long periods of time.

Filling the Hadoop storage gap

Current versions of Hadoop Distributed File System have storage management features and functions consistent with persisting data, and the Apache open-source community works continuously to improve HDFS to make it more compatible with enterprise production data centers. Some important features are still missing, however. So the challenge for administrators is to determine whether or not the HDFS storage layer can in fact serve as an acceptable data-preservation foundation for the Hadoop analytics platform and its growing list of applications and users. With multiple Apache community projects taking the attention of developers, users are often kept waiting for production-ready Hadoop storage functionality in future releases. The current list of Hadoop storage gaps to be closed includes:

Inefficient and inadequate data protection and DR capabilities. Hadoop Distributed File System relies on the creation of replicated data copies (usually three) at ingest to recover from disk failures, data loss scenarios, loss of connectivity and related outages. While this process does allow a cluster to tolerate disk failure and replacement without an outage, it still doesn't totally cover data loss scenarios that include data corruption.

In a recent study, researchers at North Carolina State University found that, while Hadoop provides fault tolerance, "data corruptions still seriously affect the integrity, performance and availability of Hadoop systems." This process also makes for very inefficient use of storage media -- a critical concern when users wish to retain data in the Hadoop cluster for up to seven years, as may be required for regulatory compliance reasons. The Apache Hadoop development community is looking at implementing erasure coding as a second "tier" for low-frequency-of-access data in a new version of HDFS later this year.

HDFS also lacks the ability to replicate data synchronously between Hadoop clusters, a problem because synchronous replication can be a critical requirement for supporting production-level DR operations. And while asynchronous replication is supported, it's open to the creation of file inconsistencies across local/remote cluster replicas over time.

Inability to disaggregate storage from compute resources. HDFS binds compute and storage together to minimize the distance between processing and data for performance at scale, resulting in some unintended consequences for when HDFS is used as a long-term persistent storage environment. To add storage capacity in the form of data nodes, an administrator has to add processing resources as well, needed or not. And remember that 1 TB of usable storage equates to 3 TB after copies are made.

Data in/out processes can take longer than the actual query process. One of the major advantages of using Hadoop for analytics applications vs. traditional data warehouses lies in its ability to run queries against very large volumes of unstructured data. This is often accomplished by copying data from active data stores to the big data lake, a process that can be time-consuming and network resource-intensive depending on the amount of data. Perhaps more critically from the standpoint of Hadoop in production, this can lead to data inconsistencies, causing application users to question whether or not they are querying a single source of the truth.

Alternative Hadoop add-ons and storage systems

The Apache community often creates add-on projects to address Hadoop deficiencies. Administrators can, for example, use the Raft distributed consensus protocol to recover from cluster failures without recomputation, and the DistCp (distributed copy) tool for periodic synchronization of clusters across WAN distances. Falcon, a feed processing and management system, addresses data lifecycle and management, and the Ranger framework centralizes security administration. These add-ons have to be installed, learned and managed as separate entities, and each has its own lifecycle, requiring tracking and updating.

To address these issues, a growing list of administrators have begun to integrate data-center-grade storage systems with Hadoop -- ones that come with the required data protection, integrity, security and governance features built-in. The list of "Hadoop-ready" storage systems includes EMC Isilon and EMC Elastic Cloud Storage (ECS), Hitachi's Hyper Scale-Out Platform, IBM Spectrum Scale and NetApp's Open Solution for Hadoop.

Let's look at two of these external Hadoop storage systems in more detail to understand the potential value of this alternate route.

EMC Elastic Cloud Storage

ECS is available as a preconfigured hardware/software appliance or as software that can be loaded onto scale-out racks of commodity servers. It supports object storage services as well as HDFS and NFS v3 file services. Object access is supported via Amazon Simple Storage Service (S3), Swift, OpenStack Keystone V3 and EMC Atmos interfaces.

ECS uses Hadoop as a protocol rather than a file system and requires the installation of code at the Hadoop cluster level, and the ECS data service presents Hadoop cluster nodes with Hadoop-compatible file system access to its unstructured data. It supports both solid-state and hybrid hard drive storage embedded into ECS nodes and scales up to 3.8 PB in a single rack depending on user configuration. Data and storage management functions include snapshot, journaling and versioning, and ECS implements erasure coding for data protection. All ECS data is erasure coded, except the index and metadata, where ECS maintains three copies of the data.

Additional features of value in the context of enterprise production-level Hadoop include:

- Consistent write performance for small and large file sizes. Small file writes are aggregated and written as one operation, while parallel node processing is applied to large file access.

- Multisite access and three-site support. ECS allows for immediate data access from any ECS site in a multisite cluster supported by strong consistency (applications presented with the latest version of data, regardless of location, and indexes across all locations synchronized). ECS also supports primary, remote and secondary sites across a single cluster, as well as asynchronous replication.

- Regulatory compliance. ECS allows administrators to implement time-based data-retention policies. It supports compliance standards such as SEC Rule 17a-4. EMC Centera CE+ lockdown and privileged delete also supported.

- Search. Searches can be performed across user-defined and system-level metadata. Indexed searching on key value pairs is enabled with a user-written interface.

- Encryption. Inline data-at-rest encryption with automated key management, where keys generated by ECS are maintained within the system.

IBM Spectrum Scale

IBM Spectrum Scale is a scalable (to multi-PB range), high-performance storage system that can be natively integrated with Hadoop (no cluster-level code required). It implements a unified storage environment, which means support for both file and object-based data storage under a single global namespace.

For data protection and security, Spectrum Scale offers snapshots at the file system or set level and backup to an external storage target (backup appliance and/or tape). Storage-based security features include data-at-rest encryption and secure erase, plus LDAP/AD for authentication. Synchronous and asynchronous data replication at LAN, MAN and WAN distances with transactional consistency is also available.

Spectrum Scale supports automated storage tiering using flash for performance and multi-terabyte mechanical disk for inexpensive capacity with automated, policy-driven data movement between storage tiers. Tape is available as an additional archival storage tier.

Policy-driven data compression can be implemented on a per-file basis for approximately 2x improvement in storage efficiency and reduced processing load on Hadoop cluster nodes. And, for mainframe users, Spectrum Scale can be integrated with IBM z Systems, which often play the role of remote data islands when it comes to Hadoop.

A Spark on the horizon

Apache Spark as a platform for big data analytics runs MapReduce applications faster than Hadoop, but also like Hadoop, is a multi-application platform offering analysis of streaming data. Spark's more efficient code base and in-memory processing architecture accelerate performance while still leveraging commodity hardware and open-source code. Unlike Hadoop, Spark does not come with its own persistent data storage layer, however, so the most common Spark implementations are on Hadoop clusters using HDFS.

The growth of Spark is the result of growing interest in stream processing and real-time analytics. Again, Hadoop Distributed File System wasn't originally conceived to function as a persistent data store underpinning streaming analytics applications. Spark will make storage performance tiering for Hadoop even more attractive; yet another reason to consider marrying Hadoop with enterprise storage.