SSD caching

What is SSD caching?

SSD caching, also known as flash caching or flash memory caching, is the temporary storage of frequently-accessed data on NAND flash memory chips in a solid-state drive (SSD) to improve the drive's input/output (I/O) performance and allow data requests to be met with improved speed. Data that is frequently accessed is stored in a fast SSD cache to reduce latency, shorten load times and improve IOPS performance.

In a common scenario, a computer system stores a temporary copy of the most active data in the SSD cache and a permanent copy of the data on a hard disk drive (HDD). Using an SSD cache with slower HDDs can improve data access times.

The need for SSD caching

SSD caching works on both reads and writes.

The goal of SSD read caching in an enterprise IT environment is to store previously requested data as it travels through the network so it can be retrieved quickly when needed. Placing previously requested information in temporary storage, or cache, reduces demand on an enterprise's network and storage bandwidth and accelerates access to the most active data.

The objective of SSD write caching is to temporarily store data until slower persistent storage media has adequate resources to complete the write operation. In doing so, the SSD write cache can boost overall system performance.

SSD cache software applications, working with SSD cache drive hardware, can help boost the performance of applications and virtual machines (VMs), including VMware vSphere and Microsoft Hyper-V. The applications can also extend basic operating system (OS) caching features with Linux and Windows. SSD cache software options are available from storage systems, OSes, VMs, applications and third-party vendors.

All in all, SSD caching can boost access to less frequently accessed data. In addition, it can be a cost-effective alternative to storing data on top-tier flash storage.

How SSD caching works

Host software or a storage controller determines the data that will be cached. An SSD cache is secondary to dynamic random access memory (DRAM), non-volatile DRAM (NVRAM) and RAM-based caches implemented in a computer system. Unlike the primary cache where the data goes through the cache for each I/O operation, the SSD cache is only used if caching the data in it can improve overall system performance.

Once the SSD cache is set up, data gets stored in the low-latency SSD to allow for faster data access later. If the same data -- known as hot data or active data -- is required later, the computer will read the cached data from the SSD instead of from the slower storage media. Storing hot data on the SSD reduces latency and improves data access speed.

When a data request is made, the system queries the SSD cache after each DRAM-, NVRAM- or RAM-based cache miss. If the data is available in the SSD cache, it is known as a cache hit. The request goes to the primary storage system if the SSD cache does not have a copy of the data or if the data cannot be read from the SSD cache. When this situation occurs, known as a cache miss, the system has to access the required data from the original, slower storage media.

This is the sequence of events when data is accessed from the SSD cache:

- The computer checks the DRAM cache for the required data.

- If it finds the data, it accesses it.

- If it doesn't find the data in the DRAM, it checks the SSD cache.

- If it doesn't find the data in the SSD cache, it gets it from the persistent storage media.

- Frequently-accessed data is copied to the SSD cache to allow for fast access later.

Important considerations for SSD caching

The effectiveness of an SSD cache depends on the ability of the cache algorithm to predict data access patterns. With efficient cache algorithms, a large percentage of I/O can be served from an SSD cache.

Two of the most common SSD caching algorithms are:

- Least frequently used. Tracks how often data is accessed; the entry with the lowest count is removed first from the cache.

- Least recently used. Retains recently used data near the top of cache; when the cache is full, the less recently accessed data is removed.

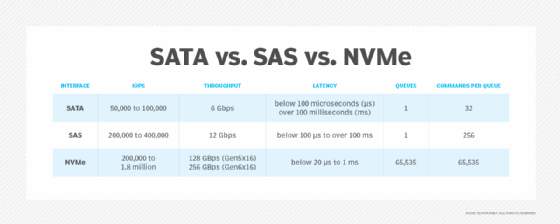

The form factor options of a flash-based cache include a Serial-Attached SCSI (SAS), Serial ATA or non-volatile memory express (NVMe) SSD; a Peripheral Component Interconnect Express (PCIe) card; or a dual in-line memory module (DIMM) installed in server memory sockets.

Types of SSD caching

System manufacturers implement different types of SSD write caching, which include the following:

Write-through SSD caching. The system simultaneously writes data to the SSD cache and the primary storage device, typically a hard disk drive system or other relatively slower media. When a file containing cached data is updated, both the copies on persistent storage will also get updated. However, the data is not available from the SSD cache until the host confirms that the write operation is complete at both the cache and the primary storage device.

Write-through SSD caching can be cheaper for a manufacturer to implement because the cache typically does not require data protection. Also, simultaneous updates prevent the loss of changes in the event of unexpected drive failure or power outage. The drawback of this approach is the latency associated with the initial write operation.

Write-back SSD caching. In this method, the data I/O block is written to (and therefore available from) the SSD cache before the data is written to the primary storage device and the host confirms that operation. The advantage is the low latency and higher performance for both reads and writes.

The main disadvantage is the risk of data loss in the event of an SSD cache failure. That said, vendors using a write-back cache try to minimize this issue by implementing protections, such as redundant SSDs, mirroring to another host or controller, or a battery-backed RAM.

Write-around SSD caching. The system writes data directly to the primary storage device, initially bypassing the SSD cache. The SSD cache requires a warmup period, as the storage system responds to data requests and populates the cache. The response time for the initial data request from primary storage will be slower than subsequent requests for the same data served from the SSD cache. Write-around caching reduces the chance that infrequently accessed data will flood the cache and also efficiently caches high-priority data requests.

SSD caching locations

SSD caching can be implemented with an external storage array, a server, an appliance or a portable computing device, such as a desktop or laptop. For portable devices, technologies are now available, such as Intel Smart Response Technology, a feature of Intel Rapid Storage Technology (Intel RST), to store the most frequently used data and applications in an SSD cache.

With Intel RST, a lower-cost, small-capacity SSD can be used with a low-cost, high-capacity HDD to provide a cost-effective, high-performance SSD cache (and overall storage system). The SSD cache can be part of a solid-state hybrid drive or a separate drive used with an HDD. The technology is designed to distinguish high-value data, such as application, user and boot data from low-value data, such as the data associated with background tasks.

Storage array vendors often use NAND flash-based caching to augment faster and more expensive DRAM- or NVRAM-based caches. Dedicated flash cache appliances are designed to add caching capabilities to existing storage systems. When inserted between an application and a storage system, flash cache appliances use built-in logic to determine which data should be placed in its SSDs. When a data request is received, the flash cache appliance can fulfill it if the data resides on its SSDs. Physical and software-based virtual appliances can cache data at a local data center or within the cloud.

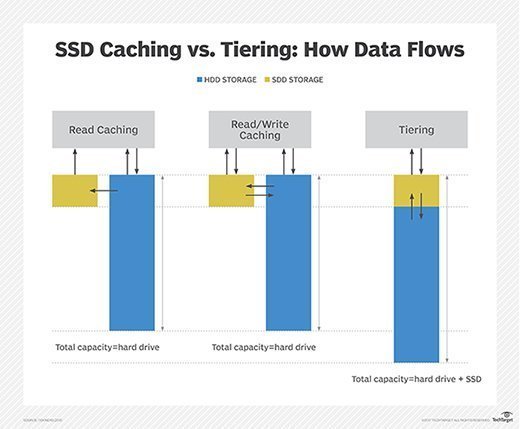

SSD caching vs. storage tiering

Manual or automated storage tiering moves data blocks between slower and faster storage media to meet performance, space and cost objectives. In contrast, SSD caching maintains only a copy of the data on high-performance flash drives. The primary version is stored on less expensive or slower media, typically HDDs, although low-cost flash can also be used.

SSD caching software or the storage controller determines which data will be cached. Also, a system does not need to move inactive data when the cache is full; it can simply invalidate it. Because only a small percentage of data is typically active at any given time, SSD caching can be a cost-effective approach to accelerate application performance, rather than storing all data on flash storage.

However, I/O-intensive workloads, such as financial trading applications, data analytics, virtualization, video streaming, content delivery networks (CDNs) and high-performance database servers, might benefit from data placement on a faster storage tier to avoid the risk of cache misses (which can happen with SSD caches).

Learn about several SSD performance benchmarks, including SSD response time in this primer.