Continuous delivery vs. continuous deployment: Which to choose?

Continuous delivery and continuous deployment both compress and de-risk the final stages of production rollout. Learn how to choose the proper path for your organization.

IT teams don't have to choose between continuous delivery and continuous deployment for high-velocity software releases. Rather, they must choose the right approach for a specific process at a given time.

Modern organizations use agile development approaches to respond to market forces in real time. Thanks to virtual platforms and microservices, development teams can introduce new code to production quickly. Continuous integration, delivery and deployment speed up code deployments without increasing risk, and they help shift quality to the right -- into the purview of operations staff. Rapid feedback throughout CI/CD helps teams determine when the build broke and what change broke it. Together, these continuous approaches create a DevOps pipeline that organizations rely on to build and test software without gates blocking rollout to production.

A DevOps environment requires all the elements of a continuous process to come together. To ensure that it works well, organizations generally use an integrated tool set that monitors, audits and fully controls the process. Such tools enable an organization to define how they address different code streams. For example, an organization flags a high-impact, highly complex code element as requiring continuous delivery with oversight from admins throughout deployment. In contrast, they send more incremental, low-impact changes through an automated continuous deployment approach. Don't fall into the trap of choosing between continuous delivery and continuous deployment. Use them both at the right time.

What is continuous integration?

The CI/CD pipeline begins with continuous integration, a process that prepares new code for production. Continuous integration (CI) enables organizations to add new code to address issues or add functionality to existing software products. Updates are a necessary step in any development approach to support the business responsively. With CI pipeline capabilities, individuals or small teams can work on separate code areas, and then bring those pieces of code together asynchronously. CI tools use automation to ensure a consistent and coherent approach as the code moves from development to the operational environment. CI tools check code quality, validate builds and test integrations into the main code branch before the change is pushed through to operations.

Exactly what integration means can vary by team and organization. At the simplest, it means to run the compile step. Commonly, it includes security checks, scans for common programming mistakes before compilation, and unit tests. When tests fail, the CI server knows which commit -- and which author -- introduced the change, it notifies the author to fix the error. Version control keeps everything organized.

Once the code passes tests, the pipeline can notify operations staff who perform final checks and then trigger a deployment, a process called continuous delivery. Or, the code can go through specialized tooling to deploy automatically, known as continuous deployment. Both processes use the acronym CD so a CI/CD pipeline could refer to either. Continuous delivery and continuous deployment setups compress the deployment process so they go faster than manual deployment. They ensure consistent testing and automated configurations for production environment factors to minimize risk. Monitoring and rollback capabilities protect live software. When deploying small subcomponents, CD environments require an exhaustive set of automated tooling or processes, which carry a minor risk.

What is continuous delivery?

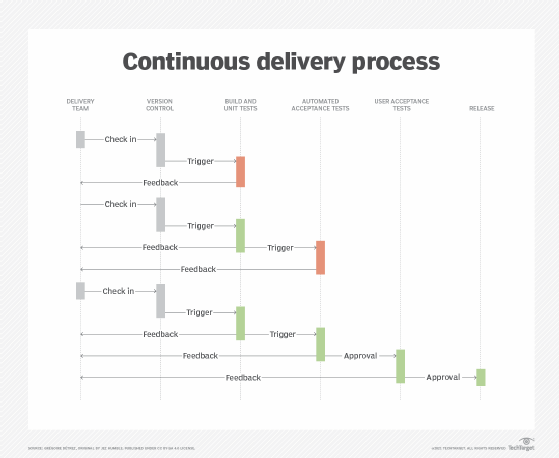

Continuous delivery is an extension of CI. Once code goes into version control, it gets tested, undergoes any needed fixes and rolls out. Steps along the way often include building tests, creating a virtual server, and then running more automated checks against the server. If any step fails, the build fails. The pipeline notifies the developer or team to fix the errors. Once everything passes the tests, the code goes to a final stage gate before the developer deploys it.

The delivery pipeline provides some intelligent automation required to advance software builds into the operational environment. Continuous delivery enables controlled and automated delivery, adding checks and balances so teams can roll back to a known viable state should anything go wrong. Administrators initiate continuous delivery manually, and automation takes over once the admin is sure the change is unlikely to cause any issues. This increases admins' control: They can hold releases back until there is a group of changes to roll out, or to fit business needs such as waiting until payroll runs or end-of-quarter activities are over.

In their DevOps book Continuous Delivery: Reliable Software Releases Through Build, Test, and Deployment Automation, Jez Humble and David Farley summarize the continuous delivery concept: "Automate the build, test and deploy process so that, if there are no problems with the software, it only takes one additional click to send it to production."

That final click triggers the actual deployment. In the illustration above, when the automated acceptance test is completed, it generates feedback to the delivery team for human acceptance tests. In this step, the system prompts a tester, product owner or customer service representative to explore and double-check the environment. When they approve, the code rolls to production.

Testing isn't the only reason for this step. A product owner styling the user interface might want to roll together a set of changes. The company might roll out all the changes in a sprint concurrently for a final regression burndown check, or perhaps target a specific day and time to mitigate business risk. In some cases, architectural constraints may prevent rollout of micro-features or micro-commits.

A team achieves continuous delivery when it advances the pipeline from code commit to ready, with deployments decoupled and happening several times per day. Replacing this final human check with automation is the key difference with the other CD model: continuous deployment.

Cl/CD in the cloud

Continuous integration tools can create a different test environment for each software version. These tools can live in the cloud and run through a web browser. In that environment, moving from continuous delivery to continuous deployment might be a matter of moving a workflow step -- only the testers or employees can see the change, which is then tested on production and promoted to everyone or reverted if need be.

Organizations that use CI/CD tools in the cloud may have the development maturity to choose a system that can do continuous deployment and build in the ability to fall back in light of quality problems if needed. Leave the crawl-walk-run cadence of deployment to the legacy systems if you can.

What is continuous deployment?

Continuous deployment aims to remove the human element as much as possible from the process. Once CI has checked and validated a code element, continuous deployment pushes it automatically to the operational environment. This ensures that the live software always has the latest capabilities. However, it also means that any problems the new release causes require automatic remediation -- through a rollback to a known position.

Anything new introduces risks, so organizations typically use various countermeasures against problems, such as the following:

- Configuration flags. When a change rolls out on a configuration flag or feature flag, it only affects a small subset of users, typically just employees or testers. A simple bit flip in a database or a text file can expand features to a larger user category.

- Deployment of isolated components. APIs and microservices mean that application components can be isolated more than in tightly coupled app architectures. Developers can configure a change to make it essentially plug-and-play. Should the change cause problems, the old component can be plugged back in, replacing the new functionality and reverting to a known position.

- Automated contracts. API and code specifications define what the software should do, and a basic expected level of operation. DevOps tools generally provide a means to set up such manifests and desired outcomes, with feedback loops that kick off automated rollback and remediation where required.

- Versioned interactions. Major version updates to an API can break changes. To avoid this, have the code call a specific version of the API. Minor version changes do not change the contract, interface or expected results. API management tools can also be implemented to maintain complex environments.

To make continuous deployment work, organizations need architecture and infrastructure that support frequent deployments. They must also have some of the de-risking self-support mechanisms above, as well as skilled staff to produce high-quality code quickly. The more automated the whole environment is, the more effective outcomes will be.

Continuous delivery vs. continuous deployment

Most organizations find it relatively straightforward to adopt a continuous delivery process -- perform a build step, run unit tests, and if the unit tests pass, push the build to a dev or test server. Organizations with isolated development, test and staging servers can deal with some instability in the development environment. But this involves human intervention at several stages: push to test upon tests passing, push to test after exploratory testing passes, and then push to production. This slows the entire process, but each step improves quality.

Legacy software teams that evaluate a continuous deployment strategy will likely find that it won't work for a variety of reasons. For example, the software deploys as one large, compiled program file, and could require thirty seconds or more of downtime for new code to roll out. In other examples, deploys are not isolated, or APIs are not versioned, or the system lacks automated contracts. These teams should start with continuous delivery and adjust the risk measures a bit at a time until continuous deployment is more viable.

Incremental modernization provides a pathway for teams to migrate legacy code to decoupled approaches suited to CI/CD. As new changes occur, admins can create them as add-on functions, where processes within the legacy software are brought out into an API-driven or microservices environment. As this goes on, fewer of the core processes are held within the legacy environment, which enables the development team to replace the core with new code that better meets a dynamic and responsive platform's needs.

Editor's note: Matt Heusser originally wrote this article and Clive Longbottom expanded it.

Matt Heusser is managing director at Excelon Development, where he recruits, trains and conducts software testing and development.

Clive Longbottom is an independent commentator on the impact of technology on organizations. He was a co-founder and service director at Quocirca.