3 questions to ask in a digital twin testing scenario

Is your team prepared to capture the right data so that your digital twin testing is accurate and effective? Here's what experts say about proper test approaches.

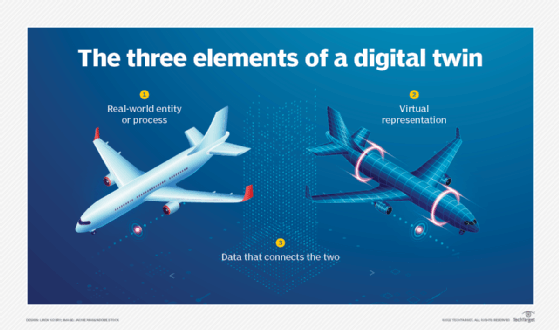

Digital twins are a popular way to model and test physical things, such as cars, factories and buildings, as well as unfixed entities, like business processes, supply chains and even city infrastructure. Teresa Tung, cloud-first chief technologist at Accenture, describes digital twins as a way for organizations to "transform physical product development in the same way software product development evolved from Waterfall to Agile."

The key difference between the traditional modeling workflows used for testing and the digital twin approach is that the latter runs on continuous updates received from real-world data. Systems designed to support digital twins typically come with a set of semantic schemas that help connect the dots between different views of both simulated and real-world components.

These types of schemas can help electrical, mechanical and other types of engineering teams explore design and performance tradeoffs through a single view rather than transferring data between separate tools and dashboards. But the digital twin approach is not foolproof, and organizations that want to implement it effectively need to ask themselves three important questions revolving around data integrity, business context and the accuracy of existing testing procedures.

Can the twin be trusted?

Bryan Kirschner, vice president of strategy at data management provider DataStax, recommended QA teams pay special attention to how the state of the real world and the digital twin might diverge. Testing teams need to test scenarios that define the range of conditions under which the twin can be trusted as an authoritative source of truth.

"The objective of testing shifts from: 'How well does this system work?' to 'Can it be trusted as an authoritative source of truth?'" Kirschner said, stressing the importance of identifying any specific scenarios where it's possible that data from the twin may not be an accurate representation of real-world conditions. To accomplish this, QA teams need to ensure that the underlying design of digital twins aligns with an organization's currently existing testing workflows.

For example, Jim Christian, chief product and technology officer at mCloud Technologies, said the company is using a digital twin approach to model and simulate high-volume data processing systems to test operational performance in real time. To accomplish this, the digital twins are designed using a modular architecture so that pieces can be tested both individually and collectively, enabling them to keep closer track of data integrity.

Is the context clear?

According to Siva Anna, senior director of enterprise QA at digital services provider Apexon, it's important to carefully consider which data points QA teams collect from real-world sources for use with digital twins. A successful digital twin implementation depends on capturing specific, vital parameters that eventually help calibrate performance and design improvements. Testing teams should keep in mind that the various sensors that feed data to digital twin systems may likely span multiple data formats and quality levels that need to be consolidated neatly into a single view.

Testing teams should also work with developers to understand the context, which is expressed through use cases, said Puneet Saxena, vice president of supply chain planning at Blue Yonder. For instance, integrated demand and supply planning for manufacturers uses a digital twin to represent a physical supply chain in a detailed software model that represents both physical things, such as warehouse items, geographic locations or automated machines, and abstract entities, such as bills of materials, cycle time averages and production rate goals.

"The more realistic the representation of physical reality in the software domain, the better the outcome," Saxena said. For instance, a digital twin of a supply chain might help organizations determine realistic production benchmarks for a specific manufacturing facility based on predictions of what that facility should be able to produce given existing demand, availability of raw materials, work-in-process schedules or other factors related to production capabilities.

Is testing comprehensive and accurate?

Scott Buchholz, emerging technology research director at Deloitte Consulting, believes QA teams should also design digital twin tests for new control systems that compare results across expected behavior and failure modes for the real thing and digital twin when used with the control software.

QA teams need to troubleshoot deviations between simulated and real-world behavior; these can indicate a failure in the twin, the software or both. The number, nature and complexity of the tests vary depending on the criticality of the system. A software control system for an autonomous train, for instance, likely carries much more direct liability than the software that controls a residential thermostat system. Ultimately, tests should ensure that the end results mirror the expected results within predefined tolerance levels -- and Buchholz believes that these tests should take specific priority over others.

In some ways, implementing a digital twin resembles the introduction of test-driven development (TDD), where software teams create automated test suites before code-writing procedures even begin. In TDD, defects can arise in both the test cases and in the software. Based on the complexity of the automated tests and the team size, they may be debugged and maintained by a QA team, core development team or other dedicated application maintenance team, depending on the sophistication and underlying technology of the digital twin itself.