Getty Images/iStockphoto

Choose a software testing model by weighing these 5 factors

Software development teams have options for their preferred software testing methodology. Consider these five key metrics before choosing a testing model for your team.

With software QA, a proper test model acts as the insurance policy. It allows an organization to find the right tradeoff of cost and value to the highest payoff for the software product. But what constitutes the right testing model?

Let's examine how teams can find their preferred software testing model, how it will align with the organization and its development style, and which approaches are available.

Factors that affect testing and value

When discussing software testing models and approaches, teams first need to determine what will provide the most value for their products. Important factors to consider include:

- feedback speed

- feedback accuracy

- feature-level testing

- regression approaches

- quantity of regression errors

Then, teams can discuss Scrum, Waterfall, Kanban and other software testing models to find the proper match.

For example, let's consider a team following Scrum. The team has testers who receive a build near the end of a sprint, and they perform a two-day regression burndown. In a two-week sprint timeline, the team reserves two "regression-test" cycles at the end, which culminates in four days, or 20% of the overall sprint time.

If the team discovers bugs on that fourth day, it means that the whole sprint will be late. At the same time, programmers are probably working on the next sprint in a different branch -- not just sitting around and waiting for bugs. Any bug fixes will need to merge into both branches, and any regression bugs they introduce won't be caught for at least two weeks. The tester will need to write up the bug, explain to someone how to reproduce it, argue whether it is a bug, argue whether it should be fixed, wait for a programmer to pick up the bug and eventually try to solve the bug problem. Sounds time-consuming and costly, doesn't it?

Now, let's compare the above scenario to one where a developer-tester pair working together finds a bug. The tester knows exactly who introduced the bug and can immediately address the problem. This second example significantly shortens the testing timeline and lowers the chance of an incomplete bug fix and/or creating another regression bug. This approach involves much less wasted time and a much lower chance the fix will be incomplete or create another regression bug.

Development teams can use a tool such as Jira to analyze the data and then learn how to shorten the timeframe. Once the team has this data, it becomes a lot easier to find the right software testing model for your organization.

Economic return on various software testing models

Specification by example (SBE). This software testing model aims to limit end-of-project arguments and rework that plague development teams who attempt to fix bugs by rewriting them at the end of the process. For example, teams can estimate how long the rework will take based on the amount of time it takes to write a new story and multiply by the number of people involved in the process and then again by the number of stories per sprint.

To be conservative, assume that only 50% of these arguments will be prevented by this estimation. Likewise, the team can also estimate the amount of extra time spent specifying. This simple math can show that five person-hours a week might actually save 20, which accounts for the only value of the SBE is time saved in arguments and rewrites during this sprint. Teams that refer to their documentation on what the software should do may find that value exponentially increases.

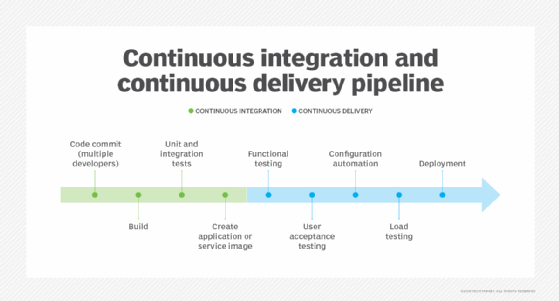

Continuous delivery. If your software components are isolated, can be deployed separately, and deployments in subsystem A don't create problems in subsystem B, then the team can stop doing regression testing entirely and deploy every feature as it's completed. The test strategy of these deployable targets (DTs) will largely depend on the feedback speed, feedback accuracy, feature-level testing, regression approaches and quantity of regression errors. API test tooling, for example, is relatively fast to write and fast to execute. Automated API tests act like a sort of contract -- given this input and database condition, expect this output.

Before a deployment, an API programmer can run a simple, quick check to see if their changes "broke" any of those expected results. On the other hand, GUI test tooling can be slow and brittle. For continuous delivery, it might make more sense to have a human run through the interface before deploying a small component. If, however, the component is not that small, requires a great deal of setup and needs to run on a dozen browser and mobile device combinations, then test tooling might save time.

Waterfall. According to Roger Ferguson, who taught my graduate courses in software management, testing in Waterfall has one huge advantage. That is, it is a phase that you must do only once. In every other software testing model, testing is an activity you repeatedly perform. In reference to the Scrum team example with the late sprint build request, regression testing has to happen every two weeks. Many teams that attempt continuous delivery try to test everything -- including the GUI -- each time a commit is pushed into version control. In Waterfall, testing is cheap for the first release if the regression-injection rate is high.

The longer developers go without regression tests, the more unknown defects they will create. A team that writes seven new bugs for every 10 that has 100 defects in the first build won't end up doing one test-fix cycle, they'll do seven before shipping with 11 bugs. Software development with Waterfall testing could work if there will be only one release, or if the software consists of a bunch of small modules that don't change that often. For example, companies that do machine learning batch applications and data science departments might have this style of develop, test, gather data and throw code away.

Kanban. Internal IT departments that work on tickets with small software changes and bug fixes might adopt a Waterfall-style approach to testing. That is, observe the bug, fix it, test around the fix and deploy. This can be particularly effective when the changes do not introduce regression bugs or when the systems are isolated, such as the IT department that supports a hundred different applications development independently, some for finance, some for HR, some for sales, and so on.