Agile testing quadrants: Guiding managers and teams in test strategies

Agile expert Lisa Crispin explains the four Agile testing quadrants and how they can guide managers and development teams in creating a test strategy.

Many software teams struggle with “fitting testing in” to the development lifecycle. For software managers and teams new to Agile development, the idea of planning and executing all the testing activities within short iterations and release cycles is daunting. I’m often asked questions such as: “When should we do performance testing? And who is going to do it? There aren’t any performance testing specialists on our cross-functional development team.” Substitute “user acceptance testing,” “exploratory testing,” “security testing,” or “usability testing” for “performance” – every Agile development organization faces similar challenges.

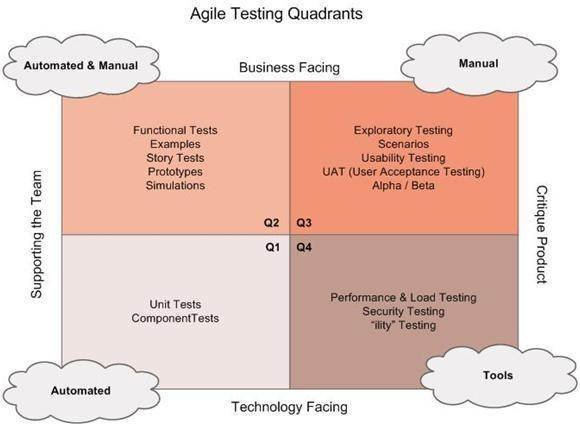

In my experience, a testing taxonomy such as the Agile testing quadrants (Figure 1) is a highly effective tool to help answer these questions.

Figure 1

How the quadrants work

The quadrants originated with Brian Marick's original posts on his Agile testing matrix. With his permission, Janet Gregory and I adapted this into the Agile testing quadrants, which form the heart of our Agile Testing book. The quadrants represent the many different purposes for different types of testing.

Tests on the left-hand quadrants help the team know what code to write, and know when they are done writing it. Tests on the right hand side help the team learn more about the code they’re written, and this learning often translates into new user stories and tests that feed back to the left-hand quadrants. Business stakeholders define quality criteria for the top two quadrants, while the bottom two quadrants relate more to internal quality and criteria.

The word “team” here includes both the customer and development teams. We need the business experts to provide examples that we turn into tests to drive development. We also need them to evaluate the resulting software as it is delivered in small increments, and give us fast feedback so we can make course corrections as we go.

The clouds at the quadrant corners denote whether tests in that quadrant generally require automation, manual testing or specialized tools. “Manual” doesn’t mean no specialized skills required – for instance, exploratory testing is a largely manual process but requires a high degree of expertise.

When to do which tests

The quadrant numbering system does not imply any order. You don’t work through the quadrants from Q1 to Q4 in a Waterfall style. Janet Gregory and I just chose an arbitrary numbering so that, in our book and when we are talking about the quadrants, we can say “Q1″ instead of “technology-facing tests that support the team.”

Most projects would start with Q2 tests, because those are where you get the examples that turn into specifications and tests that drive coding, along with prototypes and the like. However, I have worked on projects where we started out with performance testing (which is in Q4) on a spike of the architecture, because that was the most important criterion for the feature. If your customers are uncertain about their requirements, you might even do a spike and start with exploratory testing (Q3).

Q3 and Q4 testing pretty much require that some code be written and deployable, but most teams iterate through the quadrants rapidly, working in small increments. Write a test for some small chunk of a feature, write the code, once the test is passing, perhaps automate more tests for it, do exploratory testing on it, do security or load testing on it, whatever, then add the next small chunk and go through the whole process again.

How to use the quadrants

When you get the team together to plan a release or theme, go through each quadrant and identify which types of testing will be needed. Maybe this project doesn’t require usability testing, but reliability testing is critical. Talk with your customers about quality criteria. What absolutely has to work?

Next, figure out if the team (or teams) have people with the right skills to accomplish all the different types of testing, and if they already have the necessary hardware, software, data and test environments. If not, brainstorm ways to get what is needed in a timely manner.

Here are some examples. Does the team already have appropriate data for testing? If not, you may need a user story to obtain or create test data, or perhaps a business expert will arrange to provide it. If load testing is critical, but nobody on the team has experience with load testing, you could budget in time for programmers to experiment with developing a load testing harness, schedule time with a load testing expert from a different team within the company, or plan to contract with a load testing specialist. Identifying these issues early gives you time to find creative solutions.

If the team has decided to try a new practice such as using business-facing tests to drive development (known as “acceptance test-driven development (ATDD)” or “specification by example (SBE),” plan extra time for them to get up to speed with the practice. In the example of implementing ATDD, they need time to experiment with different approaches and create or adopt a testing framework. If nobody on the development team has experience with exploratory testing, you may want to plan training for that. The goal is to avoid suddenly getting stuck in mid-cycle due to lack of a particular testing skill, tool or infrastructure.

Repeat this process as you plan each iteration. For each user story, think through the testing requirements in each quadrant. How will tests be automated at various levels for a given story? When you start your planning by thinking about testing, you are likely to come up with technical implementations that make automation easier. If you lack some key testing component you may decide to postpone a theme or user story until that component can be obtained.

For example, my team had to rewrite the software to produce account statements for our financial services system into our new architecture. The legacy code had no automated regression tests, and the requirements were highly complex. We ran a huge risk of making mistakes that weren’t caught in testing, and mistakes on account statements are disastrous for the business. We decided to spend an entire sprint developing automated regression tests for the existing account statements, which required some creative experimentation. Armed with this safety net, we then developed the new code, confident that we had mitigated the risks.

It’s a tool, not a rule

Adapt and enhance the quadrants to suit your own needs. There are no hard and fast rules about which tests belong in which quadrant. Michael Hütterman added “outside-in, barrier-free, collaborative” to the middle of the quadrants in his book, Agile ALM. Mike Cohn’s Test Automation Pyramid is a good complement to help teams focus their automation efforts for maximum return on investment. Pascal Dufour integrates risk analysis with quadrants to decide what level of detail is needed in the specifications.

The quadrants are simply a taxonomy that helps teams plan their testing. Using the quadrants helps teams make sure they have all the people and resources they need to accomplish it. Think through them as you do your release, theme and iteration planning, so your whole team starts out by thinking about testing first. Focus on the purpose of each testing activity, and the quality you are building into your software.