What is synthetic monitoring?

Synthetic monitoring is a proactive monitoring approach that uses scripted simulations of user interactions to assess the performance and availability of websites, applications and services. The scripts mimic typical user behaviors, such as navigating through pages, completing transactions and interacting with APIs.

The data generated from the simulated transactions is analyzed to evaluate how the system behaves. For example, synthetic monitoring could determine whether a website achieves the desired page load, response times and uptimes.

Synthetic monitoring assesses how a system will likely respond to user requests before users interact with it. It provides an opportunity to validate whether systems meet performance and availability requirements before being put into production. In this respect, synthetic monitoring enables proactive monitoring. In addition, it's valuable for assessing a system's response to unusual or infrequent requests, which might not be represented in data collected from transactions real users initiate.

Synthetic monitoring can be used to test virtually any user transaction or request for any purpose. If a real user can initiate a request, that request can also be monitored synthetically. Synthetic monitoring is sometimes called active monitoring because it involves actively simulating transactions.

This article is part of

What is APM? Application performance monitoring guide

How does synthetic monitoring work?

Typically, synthetic digital experience monitoring follows these five steps:

- Script development. Developers or quality assurance (QA) engineers write scripts that issue requests to a website, application or other system.

- Script execution. The scripts are executed at scheduled intervals, typically every 15 minutes, from geographically diverse locations to simulate user activity across various scenarios.

- Data collection. Monitoring software collects data about transactions capturing critical performance metrics such as webpage load times, response times, transaction success rates and uptime.

- Data analysis. The data collected by synthetic monitoring tools is analyzed to assess whether the system meets performance or availability requirements. It also identifies network bottlenecks, anomalies and other areas that need improvement.

- System optimization. If necessary, the monitoring system is updated or tweaked to improve web performance and other performance metrics. Then another round of synthetic tests is run to determine whether the system now meets performance requirements.

These steps involve running automated synthetic tests using scripted requests. The scripts effectively act as bots that continuously test the website performance and availability of online services. It's possible to perform synthetic testing manually by triggering transactions by hand. However, that approach is difficult to scale, and it requires more effort to execute.

By writing scripts to kick off the synthetic testing process, developers and QA teams make the testing process faster and more efficient. Scripted tests can also be rerun whenever an update is applied to a website or application. This makes it easy to test the system consistently and gain confidence that the update didn't introduce an availability or performance issue. It also helps identify the root cause of a problem.

Synthetic monitoring vs. passive or real user monitoring (RUM)

Synthetic monitoring isn't the only way to monitor a website or application. Developers and QA engineers can also perform what's known as passive or real user monitoring.

Using RUM, data is collected and analyzed based on requests initiated by actual users. Companies usually add JavaScript code to their websites to use RUM, which gathers performance data as real visitors browse the pages. In other words, instead of measuring metrics such as page load times and response rates based on simulated requests, engineers monitor this data from systems inside a production environment.

With both types of monitoring, the data analyzed and the insights engineers seek are fundamentally the same. The main difference lies in how the data is generated -- whether it's based on simulated transactions or user transactions initiated by live, human end users.

Because of this difference, synthetic monitoring and RUM are typically used for different purposes. Synthetic monitoring helps to test or evaluate a system before it's placed into production. Real user monitoring helps to identify issues -- like slow response times or errors -- that users might experience once the system is live.

Types of synthetic monitoring

The following are several different approaches to synthetic monitoring:

- Performance monitoring. Simulated transactions can be monitored to determine whether a system meets performance requirements, such as completing a request within a given time frame.

- Load testing. By generating a large volume of simulated requests, synthetic monitoring lets engineers evaluate how a system behaves under a heavy load. Load testing lets them know if a website or application is likely to crash when there's a spike in user demand.

- Transaction monitoring. Developers or QA engineers use this approach to determine how a system handles a specific type of request, such as a newly introduced feature that hasn't yet been deployed to production. To do this, they initiate and evaluate transactions that simulate that request.

- Component monitoring. In distributed systems, synthetic monitoring is useful for testing certain parts of the system, such as a particular microservice. Requests are directed at the part being tested, and the response is measured.

- API monitoring. APIs handle data requests between different systems or system components and endpoints. Synthetic API tests let engineers assess whether APIs manage requests as required. For example, API monitoring is used when developers want to determine whether a third-party API integrated with an application behaves as required.

- Web performance monitoring. This type of browser monitoring proactively evaluates website performance by simulating user interactions. It measures page load speeds, assesses frontend and backend response times, and identifies issues such as slow third-party content, content delivery network (CDN) delays and errors.

- DNS monitoring. This approach verifies that DNS records point to the correct IP addresses, ensuring that the records resolve accurately. DNS monitoring helps prevent domain resolution issues that could result in website inaccessibility.

- Secure Sockets Layer monitoring. SSL monitoring verifies that SSL/Transport Layer Security certificates are valid and haven't expired. It prevents security warnings and potential website inaccessibility, such as when a certificate nears expiration.

Synthetic monitoring key use cases

Synthetic monitoring is essential in ensuring optimal performance and availability across diverse industries. The following are examples of synthetic monitoring use cases.

- E-commerce. Businesses rely heavily on synthetic monitoring to simulate user journeys in an e-commerce environment, such as browsing, adding items to the cart and the checkout process. For example, major retailers continuously test their checkout flow across global locations to detect and fix issues, such as slow payment processing and promo code errors, before they affect their real customers.

- Banking and finance. Financial institutions must ensure the reliability and security of their online banking platforms and transaction systems. A bank could use synthetic monitoring to replicate user actions involved in transferring funds between accounts to help identify any delays or glitches in the transaction process.

- Healthcare. Synthetic monitoring ensures that critical systems such as online portals and applications for patient record access and telemedicine services remain available and perform optimally. A hospital system could use synthetic monitoring to simulate interactions with its patient portal, such as accessing records and scheduling appointments to ensure seamless access to essential services.

- Logistics and supply chain management. In logistics and supply chain management, synthetic monitoring guarantees accurate package tracking, optimizes warehouse systems and verifies real-time inventory updates. Shipping companies use it to maintain precise tracking and timely notifications, while logistics firms test warehouse software to ensure smooth order fulfillment.

- Media and entertainment. Streaming services and online media platforms must deliver a high-quality user experience (UX) with minimal buffering and downtime. Synthetic monitoring helps them monitor the performance of their streaming infrastructure and content delivery networks. A streaming video service could use synthetic monitoring to simulate user sessions from various geographic locations to assess buffer times, video quality and the overall streaming experience.

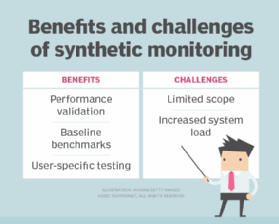

Synthetic monitoring benefits and challenges

There are several advantages to using synthetic monitoring. However, the technology also comes with its share of issues.

Benefits

The following are the main benefits of synthetic monitoring:

- Performance validation. The main benefit of synthetic monitoring is its ability to validate system performance and identify potential issues before they affect the actual end-user experience. It lets organizations proactively monitor and address performance and availability problems before they disrupt production applications.

- Baseline benchmarks. Synthetic monitoring helps teams establish a baseline for expected application behavior. By analyzing a series of simulated transactions, engineers can determine how the system should operate under normal conditions. Then, once it's in production, they can detect anomalies and troubleshoot by looking for patterns that deviate from the benchmarks.

- User-specific testing. Synthetic monitoring makes it possible to run tests from the perspective of specific users. For example, developers might want to evaluate how an application performs for users who require special accessibility features that aren't important to other users. Or they might want to test the experience of users in a certain geographic region that's farther away from the data center hosting the application. Developers and QA engineers can determine which types of requests a given user is likely to make, or they can initiate requests under certain conditions -- such as routing them in a way that reflects how they'd be routed to a specific geographic location. Then they can run simulated tests to determine how the system behaves for that type of user and their business transactions.

- Continuous global testing. Synthetic monitoring assesses application performance from various global locations. Running tests from multiple regions provides insights into performance variations, helping to optimize UX worldwide.

- Service-level agreement compliance. Synthetic monitoring facilitates SLA compliance by consistently measuring uptime and response times, ensuring performance commitments are met and data is furnished for audit purposes.

Challenges

The following are the main challenges of synthetic monitoring:

- Limited scope. Synthetic monitoring tests only transactions that engineers decide to simulate. As a result, it doesn't typically cover the full scope of request types that real users are likely to make. If a certain kind of transaction triggers an issue but isn't simulated during synthetic testing, the problem might be missed until the application is in production.

- Increased system load. Synthetic monitoring increases the workload placed on a system because it adds to the total number of requests a system has to handle. This typically isn't a major problem, especially if synthetic tests are run against a testing version of a system rather than against a production system. But if teams run synthetic tests against a website or web application that's also handling real user requests at the same time, there's a risk that the additional testing load will negatively affect the digital experience of real users.

- Script maintenance. Simulating complex user interactions requires detailed scripts. As applications evolve, these scripts demand regular updates to remain effective, but this process is typically time-consuming and resource-intensive.

- Geographic variability. Synthetic monitoring can be performed from various locations but ensuring comprehensive global coverage can be costly and complex. Variations in network conditions and infrastructure across different global regions can also make it difficult to obtain consistent and accurate results.

- Result analysis. Analyzing and interpreting the vast amount of data generated by synthetic monitoring can be challenging. Organizations must possess the necessary expertise and tools to identify and resolve performance issues effectively.

Synthetic monitoring tools

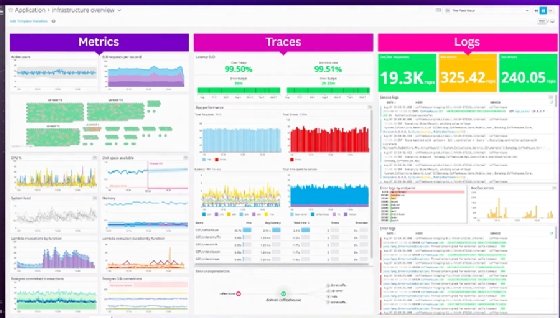

Most modern application performance monitoring (APM) tools and platforms include synthetic monitoring in their feature and functionality sets. They also have integrations with enterprise software and monitoring capabilities.

It's possible to build bespoke synthetic monitoring tools by writing scripts that initiate transactions and monitor the results. However, the synthetic monitoring process is more efficient when engineers use platforms designed to streamline the process of writing tests, deploying tests and analyzing the results.

Based on Informa TechTarget research and sources such as Better Stack and WP Speed Fix, the following is an alphabetical list of common synthetic monitoring tools used by organizations:

- Cisco ThousandEyes Network and Application Synthetics. This Cisco tool proactively simulates user experiences to assess network and application performance. It generates synthetic traffic to detect bottlenecks, diagnose issues across network layers and provide end-user insights to help resolve problems before they affect operations.

- Datadog. Datadog provides uptime and performance monitoring by simulating user requests from various global locations to get detailed insights into application performance. Key features of this tool include monitoring multiple network layers, integrating synthetic tests into continuous integration/continuous delivery (CI/CD) pipelines, automatic user interface change detection and step-by-step user flow visualization.

- Dynatrace. The Dynatrace platform provides AI-powered proactive testing by simulating user interactions across web applications, APIs and mobile apps. It goes beyond basic uptime checks, offering advanced capabilities such as business transaction monitoring and automated root cause analysis.

- New Relic Synthetics. This proactive monitoring tool in the New Relic observability platform simulates user interactions to test the performance and availability of websites, APIs and applications. It offers scripted browser tests, API tests and simple ping checks from a global network of locations. This enables users to identify and resolve performance issues before they affect real customers.

- Site24x7. This tool provides a range of synthetic monitoring capabilities, from basic availability checks using ping to advanced browser-based transaction monitoring across many global locations. It integrates with Site24x7's broader monitoring capabilities, providing a unified infrastructure and application performance view.

- SolarWinds Pingdom. This cloud-based service conducts uptime, response time and transaction monitoring from more than 100 locations worldwide. It includes both programmatic and browser-based checks to ensure comprehensive monitoring. The monitoring stats are presented in detailed reports and alerts.

- Uptrends. This tool suite proactively tests websites, APIs, and web application performance and availability. It simulates user interactions from a global network of 233 checkpoints, providing detailed insights into uptime, page load speeds and transaction success rates.

Which synthetic monitoring tool is best for you?

Selecting the appropriate synthetic monitoring tests and tools for an organization requires careful consideration of several factors. Some points and features to consider before selecting a provider include the following:

- Define monitoring objectives. Organizations should define their synthetic monitoring goals, such as tracking uptime, evaluating transaction performance and monitoring API endpoints. They should also identify key user flows that require monitoring, including login and checkout processes.

- Multistep transaction monitoring. The selected tool must accurately simulate multistep transaction processes and provide a comprehensive UX for effective monitoring. The multistep transaction simulations should include various user actions, such as logging in, searching for products, adding items to the cart and completing purchases. It's important to thoroughly test these processes to confirm that key user actions work correctly in the web application.

- Global monitoring coverage. For organizations with a global user base or those aiming to scale their business, the synthetic monitoring tool should provide monitoring from multiple locations worldwide. Since global monitoring collects performance data from various regions, it helps identify and resolve latency and regional issues. This, in turn, enables targeted optimizations, such as using CDNs or strategically placing servers to enhance the UX worldwide.

- Intuitive interface. Complex tools hinder adoption and create unnecessary frustration. To facilitate adoption, organizations should select a synthetic monitoring platform that's intuitive and user-friendly to enhance efficiency and reduce the user learning curve.

- Integration with existing systems. To ensure cohesive operations, the synthetic monitoring tool must seamlessly integrate with existing systems, including workflows, CI/CD pipelines, alerting mechanisms and other monitoring options.

- Reporting and alerting mechanisms. Organizations should look for tools that offer customizable dashboards, real-time alerts and comprehensive reporting features to facilitate timely issue resolution and informed decision-making.

- Cost and licensing evaluation. Organizations should evaluate the pricing options of a synthetic monitoring tool and compare it to their budget to find the best fit for their needs. Free trials let organizations test a tool's capabilities before committing.

Discover different types of IT monitoring capabilities that could help your business in this comprehensive guide.