What is threat hunting? Key strategies explained

If you are ready to take a more proactive approach to cybersecurity, threat hunting might be a tactic to consider. Here's what security teams should know.

Early detection is a proven way to minimize undesirable consequences. It is a truism in healthcare, where tests can identify problems while they are still treatable, and it applies in cybersecurity, too.

While there are hundreds of tools that attempt to detect and alert users to nefarious activity in a passive way, proactive investigation can also provide significant value. An early warning delivers a chance to disrupt the attacker's kill chain (i.e., their path to acting on their objectives) before it is too late.

So how do we accomplish this proactive detection? One primary mechanism is threat hunting.

What is threat hunting?

Threat hunting is the strategy of conducting active inquiry of your organization's technology environment to look for evidence of attacker activity.

While passive alerting is valuable, it has limitations. For example, sophisticated attackers can conduct their activities in a clandestine fashion, which might not trigger passive detection systems. Likewise, certain types of attacks are less likely to be detected than others. Bad actors can, for example, use what are called living-off-the-land techniques, such as fileless malware. These techniques are more difficult to detect since they don't produce artifacts on the file system, giving attackers room and time to act.

This article is part of

What is threat detection and response (TDR)? Complete guide

With proactive security measures, teams can build instrumentation to provide human engineers with a level of visibility that enables them to actively seek out attacker activity. They start with the presupposition that the organization could already be compromised. From there, they use tools to examine their IT environment for evidence that confirms or contradicts hypotheses about how and where such a compromise might have occurred.

It's worth noting that threat hunting work is distinct from the related concept of threat intelligence. Threat hunting is specific to the process of looking for threats in the environment. By contrast, threat intelligence refers to obtaining information about those threats in the first place. This intelligence might include details about criminal groups, nation states, hacking groups and other bad actors along with their motivations, tools and techniques they use, campaigns underway, and so on. It can also be specific information about things you can look for to evaluate if hackers have been present in your IT shop. Threat intelligence is a powerful tool and a useful supporting element of a threat hunting program, but the two should not be confused.

Types of threat hunting

So how does one go threat hunting? At a high level, there are a few different approaches.

Structured threat hunting. This involves a systematic examination of the environment based on attacker techniques. It requires a thorough understanding of the tactics, techniques and procedures (TTPs) that attackers use in their activities. In an approach like this, an organization might look to catalogs of attacker techniques, such as those inventoried in the Mitre ATT&CK framework, to find evidence of an intrusion. Structured threat hunting evaluates those common attacker toolkits and methodologies to determine if a bad actor has used those methods to compromise your environment.

Unstructured threat hunting. The unstructured threat hunting approach is anchored to a particular trigger. In this approach, the organization begins by searching for indicators of compromise (IOCs). An IoC refers to a specific, identifiable artifact left by an attacker; the remnant is a byproduct of the hacking activity. Look at the activities immediately before and after the generation of the IoC. For example, the presence of a suspicious file or script, alterations of a host's file system or registry, or the presence of known malicious IP addresses in log files are examples of such a marker.

Situational threat hunting. This refers to the application of increased scrutiny directed to resources at the highest risk. For example, if a given application or business process is assessed to be of higher risk, engineers using such an approach will focus on those riskiest assets. It can also be contextually aligned. For example, an organization that takes a strong stand on a political issue might face greater risks from hacktivists. A critical infrastructure provider might be at more or less risk depending on geopolitical events.

The threat hunting steps

Now, consider what the threat hunting process might look like.

There's no single authoritative process. Guidance you might encounter won't always align on how many steps there are, what those steps are, the order to conduct them in and so on. The steps will be very much at your discretion based on what makes sense for your team and your business.

For this discussion, let's look at the following basic steps to get an idea of what's involved:

- Hypothesize. Start by developing a hypothesis of how attackers could gain access to resources. Your hypothesis might be informed by attacker TTPs or the areas of the organization you suspect contain the most weaknesses.

- Test a hypothesis. Use logs, SIEM or log aggregators, traffic analyzers, extended threat detection and response (XDR) and other tools to gather signals about the underlying environment. With that information, test your hypothesis. This could be done in two phases -- a research phase and the identification of a triggering event -- but, in practice, these often happen together. For example, as you gather data about the underlying environment, you might consider -- and then discount -- a series of potential trigger points.

- Investigate. Next, trace the events leading up to and from the trigger point. Examine and validate whether the signals available support a conclusion of a malicious threat actor, something accidental carried out by a user, an admin or a rogue process. It might be something else entirely, such as normal behavior that on the surface appears suspicious.

- Respond. If the activity is determined to be malicious, move to remediate the threat and initiate the incident response or other governing process.

Evaluating for your threat hunting program

If your organization wants to proceed with threat hunting, consider whether you are positioned to do it effectively. Keys to this evaluation include the following:

- Staffing. Threat hunting isn't easy, and it requires well-trained, deeply technical and specialized resources. Does your program include an operations arm, such as a security operations center or dedicated operations personnel? If not, you might be at a significant disadvantage in employing threat hunting productively. Likewise, those personnel require technical skills to be able to accurately test a "Have we been hacked?" hypothesis. Unless your team is large, it can be difficult to specialize on individual systems; suspicious activity might need to be traced across multiple systems, applications and platforms.

- Threat intelligence. While threat hunting and threat intelligence are not the same, they do go well together. Information about attacker activity can be particularly helpful in the formulation of your hypotheses about attacks and in your research and investigation efforts. This can come in the form of generic, wide-reaching guidance about TTPs; the Mitre ATT&CK framework is comprehensive in this regard. This might also include more specialized information from commercial or specialized information feeds. Industry ISACs, for example, will often publish sector-specific threat intelligence information, TTPs and IoCs. These can help you develop a hypothesis and locate potential triggers.

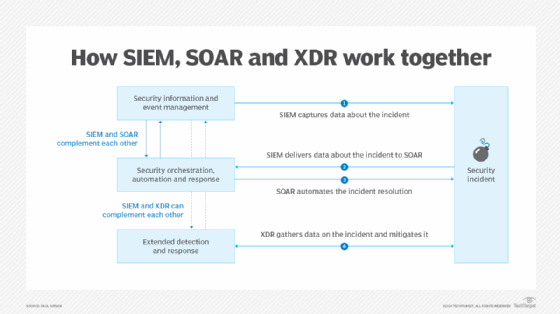

- Tooling. Tools can make threat hunting efforts more productive. Logging analysis and SIEM tools are necessary. Configuration management tools provide excellent information. XDR platforms and similar tools provide information about the running state of machines under examination. Other tools that provide information, to a greater or lesser degree, include cloud security posture management products; vulnerability scanners; dynamic application security testing; infrastructure as code security tools, particularly those that can let you query running state; and orchestration tools, such as Kubernetes. Security orchestration, automation and response (SOAR) tools can lend value to the process as well because they provide insights that might be difficult or time-consuming for engineers to glean without assistance.

As always, examine the benefits that the approach provides, your team's ability to execute and the organizational commitment to provide the ingredients necessary for success.

Ed Moyle is a technical writer with more than 25 years of experience in information security. He is currently the CISO at Drake Software.