Getty Images/iStockphoto

How AI is making phishing attacks more dangerous

Cybercriminals are using AI chatbots, such as ChatGPT, to launch sophisticated business email compromise attacks. Cybersecurity practitioners must fight fire with fire.

As AI's popularity grows and its usability expands, thanks to generative AI's continuous improvement model, it is also becoming more embedded in the threat actor's arsenal.

To mitigate increasingly sophisticated AI phishing attacks, cybersecurity practitioners must both understand how cybercriminals are using the technology and embrace AI and machine learning for defensive purposes.

What are AI-powered phishing attacks?

Phishing attacks have long been the bane of security's existence. These attacks that prey on human nature have evolved from the days of Nigerian princes and rich relatives looking for beneficiaries to increasingly sophisticated attacks that impersonate Amazon, the Postal Service, friends, colleagues and business partners, among others.

Often evoking fear, panic and curiosity, phishing scams use social engineering to get innocent users to click malicious links, download malware-laden files, and share passwords and business, financial and personal data.

While phishing attacks have always been difficult for users and security teams to detect and avoid, AI has increased their effectiveness and impact by making them harder to discern and appear more legitimate.

Following are examples of attacks made worse by AI and generative AI (GenAI).

General phishing attacks

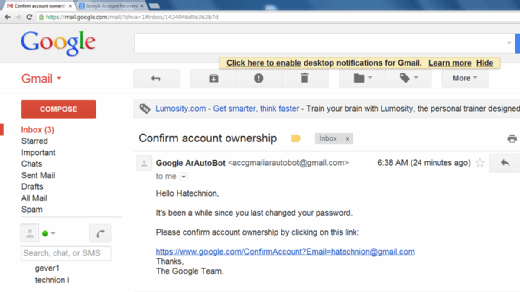

Traditional phishing attacks -- via emails, direct messages and spurious websites -- often contain spelling and grammatical errors, formatting issues, and incorrect names and return email addresses. AI has resolved many of these issues, removing mistakes and using more professional writing styles.

Phishing attacks are also becoming more timely. For example, large language models (LLMs) can absorb real-time information from news outlets, corporate websites and other sources to incorporate of-the-moment details into phishing emails. These details make the messages more believable and generate a sense of urgency that compels victims to act.

AI chatbots are also being used to create and spread business email compromise, whaling and other targeted phishing campaigns at a much faster rate than human attackers ever could on their own, increasing the scale and surface area of such attacks.

Spear phishing

Spear phishing attacks use social engineering to target specific individuals with information gleaned from social media sites, data breaches and other sources. AI-generated spear phishing emails are often even more convincing and more likely to trick recipients.

At Black Hat USA 2021, for example, Singapore's Government Technology Agency presented the results of an experiment in which the security team sent simulated spear phishing emails to internal users. Some were human-crafted, and others were generated by OpenAI's GPT-3 technology. More people clicked the links in the AI-generated phishing emails than in the human-written ones -- by a significant margin.

Fast-forward to today when LLM technology is more widely available and increasingly sophisticated. GenAI can -- in a matter of seconds -- collect and curate sensitive information about an organization or individual and use it to craft highly targeted and convincing messages and even deepfake phone calls and videos.

Vishing and deepfakes

Voice phishing (vishing) uses phone calls, voice messages and voicemails to trick people into sharing sensitive information. Like other types of phishing, vishing attacks try to create a sense of urgency, perhaps by referencing a major deadline or a critical customer issue.

In a traditional vishing scam, the cybercriminal collects information on a target and makes a call or leaves a message pretending to be a trusted contact. For example, a massive ransomware attack on MGM Resorts reportedly began when an attacker called the IT service desk and impersonated an MGM employee. The malicious hacker was able to trick the IT team into resetting the employee's password, giving the attackers network access.

AI is changing vishing attacks in the following ways:

- As previously discussed, AI technology can make the research stage more efficient and effective for attackers. An LLM such as GPT-3 can collect information for social engineering purposes from across the web, nearly instantly.

- Attackers can use GenAI to clone the voice of a trusted contact and create deepfake audio. Imagine, for example, an employee receives a voice message from someone who sounds exactly like the CFO, requesting an urgent bank transfer.

How to prevent and detect AI phishing attacks

AI and GenAI are already making life more difficult for cybersecurity practitioners and end users alike and will continue to do so.

To prevent and detect AI phishing attacks, it is critical to follow these best practices:

- Conduct security awareness training. Cover traditional and new phishing attack techniques during security awareness trainings to ensure employees know how to identify phishing scams.

- Know the warning signs. Check for classic phishing scam errors, including typos, incorrect email addresses and other mistakes, as well as suspicious emails that create a sense of urgency or that could be from an impersonator.

- Don't click links or download attachments. Scrutinize links and downloads from all senders, even trusted sources. Don't copy or paste links into browsers.

- Don't share data. Question any message that asks for personal, business or financial data.

- Require MFA and other password security best practices. Never share passwords, and follow password hygiene guidelines.

- Use email security and antiphishing tools. Email security gateways, email filters, antivirus and antimalware, firewalls, and web browser tools and extensions can catch many -- but not all -- phishing attempts. Use a layered security strategy.

- Adopt email security protocols. Email security protocols, such as SSL/TLS for HTTPS, SMTP Secure and Secure/Multipurpose Internet Mail Extensions, as well as email authentication protocols, including Sender Policy Framework, DomainKeys Identified Mail and Domain-based Message Authentication, Reporting and Conformance, boost email security and help ensure email authenticity.

Finally, use AI to detect AI threats. If it takes one to know one, unsurprisingly, AI tools are uniquely suited to detect AI-powered phishing attempts. Note, however, that, while using an AI model to monitor incoming messages could go a long way toward preventing AI phishing attacks, the cost of doing so could prove prohibitively high. In the future, models will likely become more efficient and cost-effective as they become increasingly curated and customized -- built on smaller data sets that focus on specific industries, demographics, locations and so on.

AI can improve phishing prevention and detection in the following ways:

- End-user training. GenAI models can make security awareness training more customized, efficient and effective. For instance, an AI chatbot could automatically adapt a training curriculum and phishing simulations on a user-by-user basis to address each individual's weak spots, based on historical or real-time performance data.

The technology could also identify the learning modality that best serves each employee – in person, audio, interactive, video, etc. -- and present the content accordingly. By maximizing security awareness training's effectiveness at a granular level, GenAI could significantly reduce overall cyber-risk. - Context-based defenses. AI and machine learning tools can quickly collect and process a vast array of threat intelligence to predict and prevent future attacks and detect active threats. For example, AI might analyze historical and ongoing incidents across a variety of organizations, types of cyberattacks, geographic regions targeted, organizational sectors targeted, departments targeted and types of employees targeted.

Using this information, GenAI could identify which types of attacks a given organization is most likely to experience and then automatically train security tools accordingly. For instance, AI might flag particular malware signatures for an antivirus engine, update a firewall's blocklist, or trigger mandatory secondary or tertiary authentication methods for high-risk access attempts.

Sharon Shea is executive editor of TechTarget Security.

Ashwin Krishnan is host and producer of StandOutIn90Sec, based in California. where he interviews tech leaders, employees and event speakers in short, high-impact conversations.