Does AI-powered malware exist in the wild? Not yet

AI sending out malware attacks may invoke images of movielike, futuristic technology, but it may not be too far from reality. Read up on the future of AI-powered malware.

AI is making its mark on the cybersecurity world.

For defenders, AI can help security teams detect and mitigate threats more quickly. For attackers, weaponized AI can assist with a number of attacks, such as deepfakes, data poisoning and reverse-engineering.

But, lately, it's AI-powered malware that has come into the spotlight -- and had its existence questioned.

AI-enabled attacks vs. AI-powered malware

AI-enabled attacks occur when a threat actor uses AI to assist in an attack. Deepfake technology, a type of AI used to create false but convincing images, audio and videos, may be used, for example, during social engineering attacks. In these situations, AI is a tool to conduct an attack, not create it.

AI-powered malware, on the other hand, is trained via machine learning to be slyer, faster and more effective than traditional malware. Unlike malware that targets a large number of people with the intention of successfully attacking a small percentage of them, AI-powered malware is trained to think for itself, update its actions based on the scenario, and specifically target its victims and their systems.

IBM researchers presented the proof-of-concept AI-powered malware DeepLocker at the 2018 Black Hat Conference to demonstrate this new breed of threat. WannaCry ransomware was hidden in a video conferencing application and remained dormant until a specific face was identified using AI facial recognition software.

Does AI-powered malware exist in the wild?

The quick answer is no. AI-powered malware has yet to be seen in the wild -- but don't rule out the possibility.

"Nobody has been hit with or successfully uncovered a truly AI-powered piece of offense," said Justin Fier, vice president of tactical risk and response at Darktrace. "It doesn't mean it's not out there; we just haven't seen it yet."

Pieter Arntz, malware analyst at Malwarebytes, agreed AI-malware has yet to be seen. "To my knowledge, so far, AI is only used at scale in malware circles to improve the effectiveness of existing malware campaigns," he said in an email to SearchSecurity. He predicted that cybercriminals will continue to use AI to enhance operations, such as targeted spam, deepfakes and social engineering scams, rather than rely on AI-powered malware.

Potential use cases for AI-powered malware

Just because AI-powered malware hasn't been seen in the wild, however, doesn't mean it won't in the future -- especially as enterprise defenses grow stronger.

"Most criminals will not spend time to invent a new or even improve an existing system when it is already working so well for them," Arntz said. "Unless they can get their hands on something that works better for them, maybe with a little tweaking, they will stick to what works."

But, as defenses get stronger, cybercriminals may have to take that step and build new attacks. Take ransomware, for example. Ransomware has dominated the threat landscape for years and been so successful that attackers haven't needed to create or use AI-powered malware. Defenders are slowly catching up and bolstering their security, however, as demonstrated by recent gradual declines in ransomware attacks.

While Fier said many reasons contribute to the decline, "you have to assume we're getting better at doing our jobs." But this means attackers may be pushed to make the investment in AI-powered malware if they haven't already, he added.

Beyond potentially strengthening ransomware attacks, Arntz described the following three use cases of AI-powered malware:

- computer worms capable of adapting to the next system they are trying to infect;

- polymorphic malware that changes its code to avoid detection; and

- malware that adapts social engineering attacks based on data it gathers, such as data scraped from social media sites.

He noted, however, that some steps need to be made before it is possible for attackers to practically implement AI-powered malware. For now, Arntz said, "it looks like the cybersecurity industry is making better use of AI than their malicious opponents."

How to prepare for attacks involving AI

Ninety-six percent of respondents to a 2021 MIT Technology Review Insights survey, in association with Darktrace, reported they've started to prepare for AI attacks.

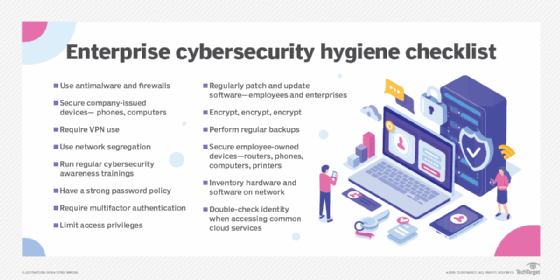

Jon France, CISO at (ISC)2, said the best way to prepare for AI-enabled attacks -- as well as the potential future threat of AI-powered malware -- is to practice basic cybersecurity hygiene best practices. In addition, he said, defenders should use AI to their advantage.

"It's foolish to think attackers wouldn't use AI for their gain as much as defenders," France said. Security teams can use AI to assist in threat hunting, malware discovery and phishing detection practices, for example. AI is also useful for containing threats through automated responses. Programmed responses via AI can provide the additional benefit of helping organizations manage burnout and the cybersecurity skills gap.

Arntz suggested organizations use tactics, techniques and procedures (TTPs) to detect traditional malware because they also help detect AI-driven malware and, down the line, AI-powered malware. TTPs, the strategies used by threat actors to develop and conduct attacks, have long been tracked by security teams to detect malware based on behaviors and patterns, rather than having to keep track of every new variant. As the prevalence of cyber attacks increases and the potential threat of AI-powered malware continues, this two-in-one strategy offers additional protection in the present and future.

"Defending and attacking has always been a cat-and-mouse game," France said.