kras99 - stock.adobe.com

Veracode highlights security risks of GenAI coding tools

At Black Hat USA 2024, Veracode's Chris Wysopal warned of the downstream effects of how generative AI tools are helping developers write code faster.

LAS VEGAS -- During a Black Hat USA 2024 session Wednesday, Veracode urged developers to put less trust in AI tools and more focus on sufficient application security testing.

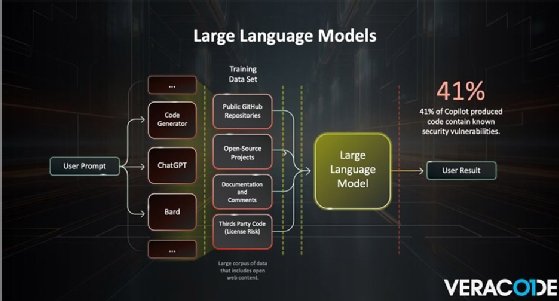

Chris Wysopal, CTO and co-founder at Veracode, led a session titled, "From HAL to HALT: Thwarting Skynet's Siblings in the GenAI Coding Era." Wysopal discussed how developers are increasingly using large language models (LLM) like Microsoft Copilot and ChatGPT to generate code. However, he warned that it presents many challenges, including code velocity, data poisoning and overconfidence in AI to produce secure code.

Wysopal spoke with TechTarget Editorial prior to the session and stressed that an influx of code is being produced with the help of generative AI (GenAI) tools, which often lack efficient security testing. However, he said he does believe that LLMs are beneficial for developers and discussed how AI can be used to fix vulnerabilities and security issues discovered in code.

"There's going to be more code produced by LLM, and developers are going to trust it more. We need to trust AI less, and make sure we're doing the proper amount of security testing," he said.

Wysopal's session highlighted two different Veracode studies that examined how LLM-generated code is changing the landscape. One study involved writing software and then asking the LLM to create it. In that case, the steps involved requesting code, then conducting a secure code review to see how many vulnerabilities it contained.

During the second study, researchers looked for GitHub code repositories where the comments read "generated by Copilot," or some other LLM. Wysopal cautioned that the GitHub study isn't 100% reliable because people can make mistakes in their comments or not always comment that it was LLM-generated.

In the end, the studies determined that AI-generated code is comparable with human-generated code. For example, 41% of Copilot-produced code contained known vulnerabilities.

"From the snippets of code they found, they looked at those from a security perspective and universally across both studies you got these numbers that 30% to 40% of the generated code had vulnerabilities. It ends up being quite similar to what human-generated code has," Wysopal said.

Those studies echoed concerns from other security vendors about GenAI coding assistants. For example, Snyk researchers earlier this year found that tools like GitHub Copilot frequently replicated existing vulnerabilities and security issues in users' codebases.

Enterprises already have a difficult time keeping up with the influx of vulnerabilities, particularly as attackers increasingly exploit zero-day flaws. To make matters worse, threat intelligence vendor VulnCheck found that 93% of vulnerabilities were unanalyzed by the National Vulnerability Database since February, following disruptions to the important resource, which helps enterprises prioritize patching.

While LLM-generated code contains a similar number of issues compared to human-generated code, GenAI tools present new problems, as well. Wysopal is concerned about coding velocity because the average developer is becoming significantly more productive using LLMs. He stressed the increase will start to put a strain on security teams and their ability to fix flaws.

"We see security bugs just build up. They're building up right now and we see this happening with the normal development processes, and we see this getting worse. One of the only solutions is using AI to fix all the code that has vulnerabilities in it," he said.

Another potential risk is poisoned data sets. Wysopal said he is concerned that if open source projects are used to train data sets, threat actors could create fake projects that contain insecure code to trick the LLMs. If LLMs are trained on insecure code, there could be more vulnerabilities in the wild for attackers to exploit, he warned.

While the threat hasn't manifested yet, Wysopal stressed that it would be difficult for LLMs to determine if someone is intentionally writing vulnerable software.

Another potential risk is an increase in new AI-generated attacks, which Wysopal said must be combatted with new AI defenses. During RSA Conference 2024, Tim Mackey, head of software supply chain risk strategy at Synopsys, told TechTarget Editorial that AI tools are enabling developers more than attackers, as of now.

Wysopal highlighted another challenge of GenAI code, which he referred to as a recursive learning problem.

"The LLMs start to learn by the output of other LLMs. If we switch to a world where a majority of code is written by LLMs, we're going to have that code starting to be learned by LLMs. It might make sense to mark code as generated by LLMs to help with this scenario," he said. "The way software is built fundamentally changes."

Arielle Waldman is a Boston-based reporter covering enterprise security news.