Defective CrowdStrike update triggers mass IT outage

A faulty update for CrowdStrike's Falcon platform crashed customers' Windows systems, causing outages at airlines, government agencies and other organizations across the globe.

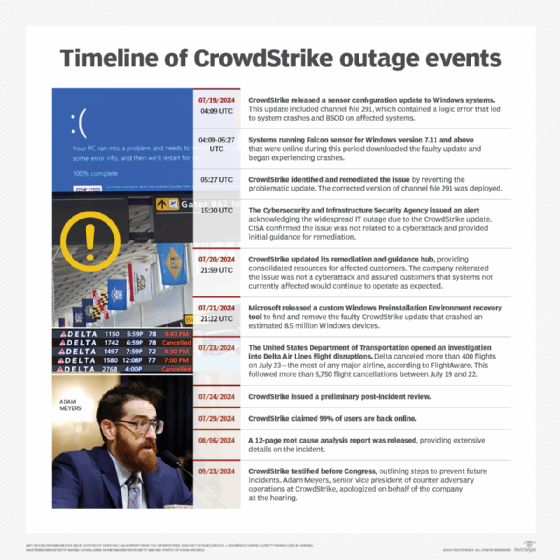

A massive IT outage that affected Windows systems across the globe was caused by a defective update for CrowdStrike's Falcon threat detection platform.

Reports of widespread outages across the globe emerged Friday morning as several major airlines, media companies, government agencies and other organizations experienced the blue screen of death (BSOD) across their Windows systems. While the Windows crashes initially stoked concerns of a potential cyber attack, security experts quickly determined the culprit was a botched update from CrowdStrike that caused a BSOD error in Windows systems running Falcon agents.

CrowdStrike CEO George Kurtz later posted a statement to X, formerly Twitter, confirming the update caused the Windows crashes. "CrowdStrike is actively working with customers impacted by a defect found in a single content update for Windows hosts. Mac and Linux hosts are not impacted," Kurtz wrote in the post. "This is not a security incident or cyberattack. The issue has been identified, isolated and a fix has been deployed."

Kurtz referred users to CrowdStrike's support portal for more information and urged customers to communicate with company representatives through official channels. "Our team is fully mobilized to ensure the security and stability of CrowdStrike customers," he wrote in the post.

Kurtz published a follow-up statement later Friday morning in which he apologized for the disruptions caused by the update. He also highlighted a blog post that contained information about the defective updates and workarounds for individual systems as well as cloud and virtual instances.

"We understand the gravity of the situation and are deeply sorry for the inconvenience and disruption. We are working with all impacted customers to ensure that systems are back up and they can deliver the services their customers are counting on," Kurtz wrote on X.

Resolving the BSOD error is apparently complicated, as multiple cybersecurity vendors have said CrowdStrike's workaround requires users to reboot impacted Windows systems in safe mode, removing the defective file and then restarting the system normally. However, this workaround must be applied manually to each machine, which could make recovery extremely complex and time-consuming for organizations.

John Hammond, principal security researcher at Huntress, told TechTarget Editorial that CrowdStrike's initial fix prevented the defective update from being delivered to additional endpoint devices.

"Unfortunately, this does not help the machines that are already affected and stuck in a boot loop," he said. "The mitigation and recovery workaround that is suggested is unfortunately a very manual process. It needs to be done at the physical location of the computer, by hand, for every computer impacted. It will be a very long and very slow recovery process."

Additionally, Hammond also said some in the infosec community have suggested other fixes, such as renaming the CrowdStrike driver folder structure in Windows systems. However, he said such fixes still need to be applied manually for each system, as there are no automatic updates or group policy deployments that can resolve the issue when devices are stuck in a boot loop.

Gabe Knuth, an analyst at TechTarget's Enterprise Strategy Group, noted additional hurdles for the recovery process, which involves manually deleting the defective file in safe mode. "This requires local admin rights, which, unless the admin is in front of the machine or the user is an administrator, would have to be told to the remote end user (then changed at a later time, hopefully)," Knuth wrote in an email. "Also, if the disk in the machine has been encrypted with BitLocker, that recovery key will need to be available. And some customers have said the server that hosts the recovery keys had also crashed, further complicating the issue."

In a blog post, Forrester Research analysts also noted challenges presented by BitLocker and recommended that affected organizations back up hard disk encryption keys whether they're using BitLocker or a third-party provider. "Some administrators have also stated that they've been unable to gain access to BitLocker hard-drive encryption keys to perform remediation steps," the blog post read.

Microsoft posted guidance on X via the company's Microsoft 365 Status account on how to restore Windows 365 cloud systems to a known good state prior to Friday's update. Additionally, Microsoft's Azure cloud status site said the company received reports from some affected customers that recovered after multiple restarts of their virtual machines. "We've received feedback from customers that several reboots (as many as 15 have been reported) may be required, but overall feedback is that reboots are an effective troubleshooting step at this stage," the status update read.

AWS published guidance for customers running affected Windows systems on the cloud giant's platforms. The company said it took steps to mitigate updates "for as many Windows instances, Windows WorkSpaces and Appstream 2.0 Applications as possible" but urged other customers to follow its recommended recovery processes. One option involves simply rebooting the instance, which AWS said in some cases might cause the Falcon agent to revert to an earlier, unaffected version.

It's unclear how the defective Falcon update was issued. However, Brody Nisbet, director of CrowdStrike Overwatch, posted on X that there was a "faulty channel file, so not quite an update."

TechTarget Editorial contacted CrowdStrike for additional comment.

UPDATE: A company spokesperson responded to TechTarget Editorial with a blog post published Friday evening that contained additional technical details about the defective update. According to the blog post, CrowdStrike released a sensor configuration update just after midnight EST on July 19. "Sensor configuration updates are an ongoing part of the protection mechanisms of the Falcon platform. This configuration update triggered a logic error resulting in a system crash and blue screen (BSOD) on impacted systems," the company said.

Additionally, CrowdStrike's blog post explained the sensor configuration files are referred to as "channel files" in the company's documentation, which are issued to Falcon sensors. "Updates to Channel Files are a normal part of the sensor's operation and occur several times a day in response to novel tactics, techniques, and procedures discovered by CrowdStrike," the blog post said. "This is not a new process; the architecture has been in place since Falcon's inception."

CrowdStrike said in this case, the defective channel file was released in response newly observed malicious activity involving commonly used command-and-control frameworks. However, the channel file contained a logic error that triggered the Windows system crashes. CrowdStrike said it is conducting a thorough root cause analysis regarding how the logic error occurred and will release additional information as its investigation continues.

Maxine Holt, Omdia's senior director of cybersecurity, said the incident might have serious and long-term consequences for CrowdStrike, one of the world's biggest and most well-known companies in the infosec industry.

"This is very bad for CrowdStrike from a business perspective. The best outcome for them is that it fades into memory. But given that CrowdStrike states that its 'customers benefit from superior protection, better performance, reduced complexity and immediate time-to-value,' the opposite is clearly true today. And customer performance, for some, is at zero," Holt said. "The events of today are highly likely to follow CrowdStrike for some time and could do even more damage to the business. Furthermore, it will encourage plenty of CISOs and CIOs to re-evaluate their approach to tool consolidation and vendor selection."

Knuth said the defective update is a bad look for CrowdStrike, but the outages raise larger questions for the industry regarding security products that have access to the operating system's kernel.

"The reality is that these types of platforms operate at extremely low levels in the operating system, and a problem like this -- a corrupt driver, in this case -- can cause serious problems if something goes awry," he said. "We've built up automation and software-defined services all around us that are amazing until they're not. Yes, the scope of this was immense, along with the impact on businesses. But incidents like this are always a possibility."

Knuth added that the long-term reputational damage for CrowdStrike will depend, in part, on how the cybersecurity company handles the response effort going forward. "From my perspective, they took accountability and are doing whatever it takes to get things resolved. That's a good first step," he said.

Dave Gruber, principal analyst at Enterprise Strategy Group, said CrowdStrike's defective update will have repercussions for other cybersecurity vendors. IT leaders within customer organizations may play a stronger role in the future for purchasing process, which could delay buying decisions, he said.

"This event will alter how IT organizations think about the purchase and deployment of security solutions moving forward. With a high-profile event like this that interrupts much of the world's operating infrastructure, IT orgs will be called on to put risk mitigation plans in place to protect against possible future service disruptions," Gruber said. "This will directly impact security solution providers who will now need to put buyers at ease in understanding how to mitigate this potential risk when they purchase security solutions."

This article was updated on 7/20/2024.

Senior news writer Alex Culafi and news writer Arielle Waldman contributed to this article.

Rob Wright is a longtime reporter and senior news director for TechTarget Editorial's security team. He drives breaking infosec news and trends coverage. Have a tip? Email him.