kras99 - stock.adobe.com

Microsoft launches AI-powered Security Copilot

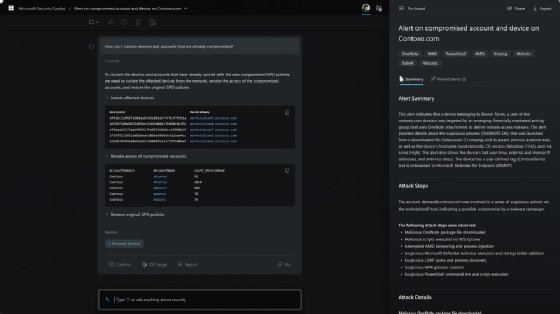

Microsoft Security Copilot is an AI assistant for infosec professionals that combines OpenAI's GPT-4 technology with the software giant's own cybersecurity-trained model.

Microsoft expanded its AI-powered offerings Tuesday with the launch of Security Copilot, a new digital assistant powered by OpenAI's GPT-4.

Microsoft Security Copilot is the latest edition of AI assistants designed for specific products and tasks. The software giant previously unveiled Microsoft 365 Copilot for Word, Teams and other Office applications as well as Microsoft Dynamics Copilot for CRM and CX products.

Security Copilot is similar to the previous offerings in that it uses large language model technology from OpenAI to assist security teams in detecting, identifying and prioritizing threats. The launch marks the latest development in Microsoft's partnership with OpenAI, which was bolstered earlier this year with a $10 billion investment in the AI startup.

The new tool also features Microsoft's own cybersecurity-specific training model, which includes the company's global threat intelligence as well as 65 trillion daily signals. Based on prompts and queries from infosec professionals, Security Copilot can provide guidance and context about potential threats in minutes instead of hours or days, Microsoft said.

"Our cyber-trained model adds a learning system to create and tune new skills," said Vasu Jakkal, Microsoft's corporate vice president of security, compliance, identity and management, in a blog post. "Security Copilot then can help catch what other approaches might miss and augment an analyst's work. In a typical incident, this boost translates into gains in the quality of detection, speed of response and ability to strengthen security posture."

Jakkal emphasized that Security Copilot was not designed to replace human info professionals but instead provide them with more speed and scale for everything from incident response investigations to vulnerability scanning and patching. She also touted the AI assistant's ability to learn on the job and increase its effectiveness.

"Security Copilot doesn't always get everything right. AI-generated content can contain mistakes," Jakkal said. "But Security Copilot is a closed-loop learning system, which means it's continually learning from users and giving them the opportunity to give explicit feedback with the feedback feature that is built directly into the tool."

Microsoft also addressed potential privacy concerns for Security Copilot, which runs on Azure hyperscale cloud infrastructure. While the AI assistant will have access to sensitive information about customers' security postures, Jakkal said such data will be protected and that organizations will be able to determine how they want to use and monetize it.

"We've built Security Copilot with security teams in mind -- your data is always your data and stays within your control," she said. "It is not used to train the foundation AI models, and in fact, it is protected by the most comprehensive enterprise compliance and security controls."

Jon Oltsik, an analyst at Enterprise Strategy Group, said that while there have been cognitive computing and AI products for security in the past, such as IBM Watson for Cyber Security, most of them have been geared toward threat detection. Microsoft Security Copilot is different, he said, because it's focused more on security operations within a customer organization, and it's integrated with other Microsoft products.

"It can act as an automated security analyst in a Microsoft environment, which is key," Oltsik said. "Analysts already use ChatGPT, but this is integrated into your environment. So it's giving you more specific results about your tooling, your vulnerabilities and other aspects."

The big question, Oltsik said, is how Security Copilot will function when organizations add telemetry data from other technology providers. But for now, he said the AI assistant looks to strengthen Microsoft's position as a leading cybersecurity player, which should be an "existential threat" to standalone security vendors.

Eric Parizo, managing principal analyst at Omdia, credited Microsoft for being one of the first major vendors to launch a generative AI offering as part of its cybersecurity product portfolio.

"Much remains to be seen, but it has strong potential to help security operations center teams with one of their biggest challenges, namely finding the "needle in the haystack" as it relates to threat detection, he said. "Even more powerful is the potential to reduce the amount of time needed not only to bring new security analysts up to speed, but also in completing hard-to-automate tasks like building custom response orchestration templates."

Parizo also gave credit to Microsoft for emphasizing AI data protection and privacy with Security Copilot and ensuring that customer data is not used to train foundational AI models. "As more generative AI solutions emerge within cybersecurity, this may prove to be an important differentiator going forward," he said.

Rob Wright is a longtime technology reporter who lives in the Boston area.