Zero-trust network policies should reflect varied threats

Role-based access systems create enormous pools of responsibility for administrators. Explore how to eliminate these insecure pools of trust with zero-trust network policies.

The zero-trust model flips the outdated perimeter-based security model on its head, but successful implementation isn't always easy. Zero trust requires security be embedded into the OS. To do this, organizations must understand how zero-trust network policies, technologies and concepts -- whether new or existing -- can be used to establish trust among actors in the network.

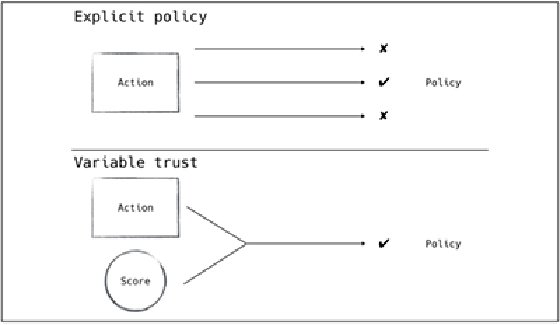

In their book Zero Trust Networks, published by O'Reilly, authors Evan Gilman and Doug Barth described the concept of variable trust as one of the "most exciting ideas" of the zero-trust approach to network security. This numeric value designates the level of trust of a component, which can be used to create policies against it. This is a significant upgrade from traditional, binary network policies that are commonly too loosely or too rigidly defined. Variable trust enables zero-trust network policies to facilitate informed authorization decisions, and the result is an authorization system that is adjustable and equipped to handle novel threats.

In the following excerpt from Chapter 2 of Zero Trust Networks, Gilman and Barth outline how to define zero-trust network policies by switching to a trust score model, thus eliminating pools of trust that weaken the alternative role-based access approach.

Read a Q&A with authors Gilman and Barth for more insights on zero-trust implementation.

Download a PDF of Chapter 2 to read about critical systems and concepts necessary to manage trust in a zero-trust network.

Variable Trust

Managing trust is perhaps the most difficult aspect of running a secure network. Choosing which privileges people and devices are allowed on the network is time consuming, constantly changing, and directly affects the security posture the network presents. Given the importance of trust management, it's surprising how underdeployed network trust management systems are today.

Defining trust policies is typically left as a manual effort for security engineers. Cloud systems might have managed policies, but those policies provide only basic isolation (e.g., super user, admin, regular user) which advanced users typically outgrow. Perhaps in part due to the difficulty of defining and maintaining them, requests to change existing policies can be met with resistance. Determining the impact of a policy change can be difficult, so prudence pushes the administrators toward the status quo, which can frustrate end users and overwhelm system administrators with change requests.

Policy assignment is also typically a manual effort. Users are granted policies based on their responsibilities in the organization. This role-based policy system tends to produce large pools of trust in the administrators of the network, weakening the overall security posture of the network. These pools of trust have created a market for hackers to "hunt sys admins", seeking out and compromising system administrators. Perhaps the gold standard for a secure network is one without highly privileged system administrators.

These pools of trust underscore the fundamental issue with how trust is managed in traditional networks: policies are not nearly dynamic enough to respond to the threats being leveled against the network. Mature organizations will have some sort of auditing process in place for activity on their network, but audits can be done too infrequently, and are frankly so tedious that doing them well is difficult for humans. How much damage could a rogue sysadmin do on a network before an audit discovered their behavior and mitigated it? A more fruitful path might be to rethink the actor/trust relationship, recognizing that trust in a network is ever evolving and based on the previous and current actions of an actor within the network.

This model of trust, considering all the actions of an actor and determining their trustworthiness, is not novel. Credit agencies have been performing this service for many years. Instead of requiring organizations like retailers, financial institutions, or even an employer to independently define and determine one's trustworthiness, a credit agency can use actions in the real world to score and gauge the trustworthiness of an individual. The consuming organizations can then use their credit score to decide how much trust to grant that person. In the case of a mortgage application, an individual with a higher credit score will receive a better interest rate, which mitigates the risk to the lender. In the case of an employer, one's credit score might be used as a signal for a hiring decision. On a case-by-case basis, these factors can feel arbitrary and opaque, but they serve a useful purpose; providing a mechanism for defending a system against arbitrary threats by defining policy based not only on specifics, but also on an ever-changing and evolving score.

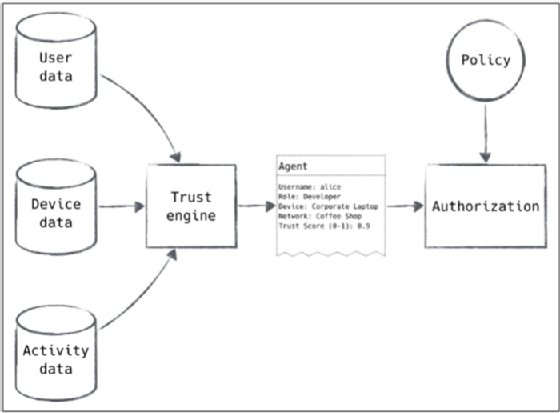

A zero trust network utilizes this insight to define trust within the network, as shown in Figure 2-3. Instead of defining binary policy decisions assigned to specific actors in the network, a zero trust network will continuously monitor the actions of an actor on the network to update their trust score. This score can then be used to define policy in the network based on the severity of breach of that trust (Figure 2-4). A user viewing their calendar from an untrusted network might require a relatively low trust score. However, if that same user attempted to change system settings, they would require a much higher score and would be denied or flagged for immediate review. Even in this simple example, one can see the benefit of a score: we can make fine-grained determinations on the checks and balances needed to ensure trust is maintained.

Monitoring Encrypted Traffic

Since practically all flows in a zero trust network are encrypted, traditional traffic inspection methods don't work as well as intended. Instead, we are limited to inspecting what we can see, which in most cases is the IP header and perhaps the next protocol header (like TCP in the case of TLS). If a load balancer or proxy is in the request path, however, there is an opportunity for deeper inspection and authorization, since the application data will be exposed for examination.

Clients begin sessions as untrusted. They must accumulate trust through various mechanisms, eventually accruing enough to gain access to the service they're requesting. Strong authentication proving that a device is company-owned, for instance, might accumulate a good bit of trust, but not enough to allow access to the billing system. Providing the correct RSA token might give you a good bit more trust, enough to access the billing system when combined with the trust inferred from successful device authentication.

Strong Policy as a Trust Booster

Things like score-based policies, which can affect the outcome of an authorization request based on a number of variables like historical activity, drastically improve a network's security stance when compared to static policy. Sessions that have been approved by these mechanisms can be trusted more than those that haven't. In turn, we can rely (a little bit) less on user-based authentication methods to accrue the trust necessary to access a resource, improving the overall user experience.

Switching to a trust score model for policies isn't without its downsides. The first hurdle is whether a single score is sufficient for securing all sensitive resources. In a system where a trust score can decrease based on user activity, a user's score can also increase based on a history of trustworthy activity. Could it be possible for a persistent attacker to slowly build their credibility in a system to gain more access?

Perhaps slowing an attacker's progress by requiring an extended period of "normal" behavior would be sufficient to mitigate that concern, given that an external audit would have more opportunity to discover the intruder. Another way to mitigate that concern is to expose multiple pieces of information to the control plane so that sensitive operations can require access from trusted locations and persons. Binding a trust score to device and application metadata allows for flexible policies that can declare absolute requirements yet still capture the unknown unknowns through the computed trust score.

Loosening the coupling between security policy and a user's organizational role can cause confusion and frustration for end users. How can the system communicate to users that they are denied access to some sensitive resource from a coffee shop, but not from their home network? Perhaps we present them with increasingly rigorous authentication requirements? Should new members be required to live with lower access for a time before their score indicates that they can be trusted with higher access? Maybe we can accrue additional trust by having the user visit a technical support office with the device in question. All of these are important points to consider. The route one takes will vary from deployment to deployment.

About the authors

Evan Gilman

Evan Gilman

Evan Gilman is an engineer at VMware with a background in computer networks. With roots in academia and currently working in the public internet, he has been building and operating systems in hostile environments throughout his professional career. An open source contributor, speaker and author, Gilman is passionate about designing systems that strike a balance with the networks they run on.

Doug Barth

Doug Barth

Doug Barth is a site reliability engineer at Stripe who loves to learn and share his knowledge with others. He has worked on systems of various sizes at companies including Orbitz and PagerDuty. Barth has built and spoken about monitoring systems, mesh networks and failure injection practices.

https://theintercept.com/2014/03/20/inside-nsa-secret-efforts-hunt-hack-system-administrators/