alphaspirit - Fotolia

Machine learning in cybersecurity: How to evaluate offerings

Vendors are pitching machine learning for cybersecurity applications to replace traditional signature-based threat detection. But how can enterprises evaluate this new tech?

Is machine learning a must-have for security analytics, or is it window dressing that is irrelevant to a security manager's purchasing decision? The answer, much like the outputs derived through machine learning algorithms, is neither black nor white.

The promise of machine learning in cybersecurity lies in its ability to detect as-yet-unknown threats, particularly those that may lurk in networks for long periods of time seeking their ultimate goals. Machine learning technology does this by distinguishing atypical from typical behavior, while noting and correlating a great number of simultaneous events and data points.

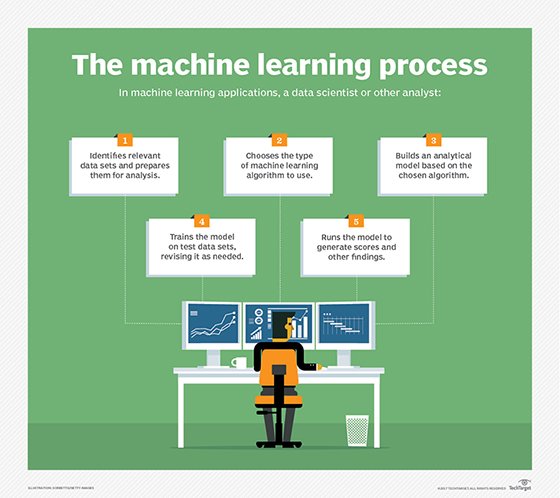

But in order to know what constitutes typical activity on a website, endpoint or network at any given time, the machine learning algorithms must be trained on large volumes of data that have already been properly labelled, identified or categorized with distinguishing features that can be assigned and reassigned relative weights.

While this may sound logical, machine learning technology is a darker black box than most. Among all the different kinds of technology out there, how does a manager in search of machine learning in cybersecurity distinguish added value from a meaningless bullet point? Must one be -- or take along -- a data scientist to evaluate machine learning claims, or is this within the abilities of mere IT security professionals? Here's a look at what some security professionals recommend.

Evaluate outcomes, not ingredients

Sam Curry is the CISO of Cybereason, a threat detection and defense company with technology roots in the Israel Defense Forces. Curry said security managers should be grilling vendors not in terms of machine learning tools, but the outcomes.

"I'm much more interested in knowing what kind of data you collect, where you put it and how you store it," he said. "How do you slice it? How do you render it? How does someone interact with it? How do you use it?"

The answers to these questions should be clearly expressed in the language of an enterprise's particular IT and application landscape.

Equally important, the vendor should be able to specify how its offering exceeds or meets the pace of your adversary's innovation.

"Since bad actors are so focused on the problem, it becomes a content race," Curry said. "If they claim to use Bayesian analysis, for example, will they switch to another tool if that doesn't work over time?"

Bayesian analysis is one of about a dozen machine learning methods typically used; other methods are logistical regression, simple linear regression, K-means clustering, decision trees and random forests. You can make a study of them -- look up Data Science on Coursera -- but you won't learn enough in time to prevent the next malware attack.

On a continuum between deep understanding of machine learning models and viewing it as a black box, Larry Lunetta, vice president of security solutions marketing for Hewlett Packard Enterprise's Aruba Networks, finds a middle ground. He says that security analysts should understand how machine learning in cybersecurity works in general, and specifically that its results are not binary.

Training the bloodhound

The practice of giving a bloodhound a scent to track by providing the dog with an article of the target's clothing is a common one. It's also far from a perfect analogy for security-applied machine learning.

But, in both cases, the training has a specific target in mind. Here's how Larry Lunetta, vice president of security solutions marketing for Aruba Networks, describes trained and supervised machine learning:

A ransomware attack, for example, typically does one of two things after it gains access to a network. It establishes a command-and-control channel back to the attacker, and then it 'beacons,' or spreads, laterally.

The payoff for ransomware isn't necessarily the first system that gets infected and encrypted; it's the high-value assets that would be very painful for the organization to lose. It's that searching process that has a specific set of behaviors. We use trained models to look for both of those activities. The idea is not to stop the initial infection, but to block the expansion of the attack before it does serious damage.

The models initially are trained by our data science team. Once the algorithms are set up, the training data is marked good or bad.

Think of a bag of marbles that are a range of all white to all black. You want to force a decision that a marble is black or white. So you'll show the model a million marbles; those that are off-white, you'll tell the model are white, and those that are closer and closer to middle gray, you'll decide whether each is black or white. If you show the model enough marbles, once it gets into the wild and sees the marbles, it will decide for itself.

This is called labeled data. One of the challenges in security with machine learning is to get enough labeled data to go into the wild and make the correct decisions.

Alerts to threats are expressed as probabilities, which must be put in context with additional evidence. He adds that Aruba's IntroSpect product, the result of its February 2017 acquisition of Niara, presents this underlying evidence along with attack probability scores.

"What kind of network traffic did we see? What kind of log information was relevant? What was the history of the user or device in question? These are all types of evidence that the security analyst would already be well-versed in," Lunetta explained. "We look upon machine learning as a force multiplier."

Simpler tools may suffice

Dennis Chow is the CISO at SCIS Security, a Houston-based reseller that was formed a year and a half ago by longtime IT security veterans. He cautions against concentrating on the latest buzzwords for machine learning in cybersecurity when simpler analytical tools and SIEM systems, if properly set up, will prevent most attacks.

Chow also agrees that enterprises don't need a doctorate in data science to evaluate products.

"Security managers can simply ask the questions that address the highest risks to their organization," based on careful risk assessments, Chow said.

"If we do a professional services deployment for a customer, we go over their top use cases," he said. "Say you're a healthcare entity; you might be more worried about HIPAA-based data exfiltration, or about biomedical devices being hacked through MS08-067 -- one of many old vulnerabilities common to the industry. We'll go over the top three, four, five, and create modeling based on that."

One thing Chow does believe managers should know is the difference between trained, or supervised, and untrained model sets. In a supervised model set -- all he has deployed thus far -- "we give the system training data and tell it how it should be classified or identified."

An unsupervised or untrained model isn't trained for anything, in theory. The algorithms figure out their own baselines out of raw data, then determine clusters of outlying data points and assign these clusters labels. The solution vendor then maps those labels to things that have meaning for security; for example, a brute force attack.

"The issue is the unknown," says Chow. "If the model comes back and says something the user doesn't recognize, he'll have to sit there and dig through it just like any other security analyst without the tool."

While Chow can roughly explain the two machine learning algorithms they use most often in their work -- K-means clustering and simple linear regression -- he recommends leaving the front-end research to a value-added reseller (VAR).

"Any good account manager is going to have presales engineers to help you choose." And again, frame questions in terms of outcomes: "Can this technology tell me when there's anomalous user activity at an odd hour, or it's coming from a country we don't do business with?"

In addition to leaning on a VAR, buyers often get recommendations from peers in similar companies, as well as input from industry analysts.

The proof is in the POC pudding

In the end, an in-house security engineer or a trusted third party should validate security vendor claims in a proof of concept (POC) trial. Chow recommends testing for advanced persistent threats at all points along the kill chain, from initial reconnaissance to uncovering vulnerabilities to payload delivery, all the way to data exfiltration.

Testing should also establish a baseline of tool effectiveness in detecting known signatures -- something that doesn't require machine learning.

"Then switch it up with a different exploit kit, a different piece of malware, a different infection point -- say a USB flash drive instead of an infected URL," Chow said.

After exercising all the rule-based detection, Chow suggests testing the machine learning aspects with variations on expected bad behavior. See if the system still sees enough similarity to catch it without additional programming.

A thorough POC trial can help enterprises determine the technology that best suits their needs. Machine learning tools may offer superior security for enterprises, but they must be properly researched, vetted and tested before being deployed.