Linux Malware Incident Response

In this excerpt from Linux Malware Incident Response, authors Cameron Malin, Eoghan Casey and James Aquilina discuss volatile data collection methodology, steps and preservation.

The following is an excerpt from the book Linux Malware Incident Response written by Cameron Malin, Eoghan Casey and James Aquilina and published by Syngress. This section discusses volatile data collection methodology and steps as well as the preservation of volatile data.

VOLATILE DATA COLLECTION METHODOLOGY

VOLATILE DATA COLLECTION METHODOLOGY

- Prior to running utilities on a live system, assess them on a test computer to document their potential impact on an evidentiary system.

- Data should be collected from a live system in the order of volatility, as discussed in the introduction. The following guidelines are provided to give a clearer sense of the types of volatile data that can be preserved to better understand the malware.

Documenting Collection Steps

- The majority of Linux and UNIX systems have a script utility that can record commands that are run and the output of each command, providing supporting documentation that is cornerstone of digital forensics.

- Once invoked,

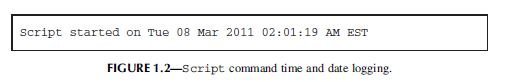

scriptlogs the time and date, as shown in Fig. 1.2.

- Once invoked,

-

Scriptcaches data in memory and only writes the full recorded information when it is terminated by typing “exit.” By default, the output of thescriptcommand is saved in the current working directory, but an alternate output path can be specified on the command line.

Linux Malware Incident Response

Authors: Cameron Malin, Eoghan Casey and James Aquilina

Learn more about Linux Malware Incident Response from publisher Syngress.

At checkout, use discount code PBTY14 for 25% off

Volatile Data Collection Steps

- On the compromised machine, run a trusted command shell from a toolkit with statically compiled binaries (e.g., on older nonproprietary versions of the Helix CD or other distributions).

- Run

scriptto start a log of your keystrokes. - Document the date and time of the computer and compare them with a reliable time source.

- Acquire contents of physical memory.

- Gather host name, IP address, and operating system details.

- Gather system status and environment details.

- Identify users logged onto the system.

- Inspect network connections and open ports and associated activity.

- Examine running processes.

- Correlate open ports to associated processes and programs.

- Determine what files and sockets are being accessed.

- Examine loaded modules and drivers.

- Examine connected host names.

- Examine command-line history.

- Identify mounted shares.

- Check for unauthorized accounts, groups, shares, and other system resources and configurations.

- Determine scheduled tasks.

- Collect clipboard contents.

- Determine audit configuration.

- Terminate script to finish logging of your keystrokes by typing exit.

Analysis Tip

File Listing

In some cases it may be beneficial to gather a file listing of each partition during the live response using The Sleuthkit (e.g., /media/cdrom/Linux-IR/fls/dev/hda1-lr-m/>.body.txt). For instance, comparing such a file listing with a forensic duplicate of the same system can reveal that a rootkit is hiding specific directories or files. Furthermore, if a forensic duplicate cannot be acquired, such a file listing can help ascertain when certain files were created, modified, or accessed.

Read the full excerpt

Download the PDF of the excerpt here!

Preservation of Volatile Data

- First acquire physical memory from the subject system, then preserve information using live response tools.

- Because Linux is open source, more is known about the data structures within memory. The transparency of Linux data structures extends beyond the location of data in memory to the data structures that are used to describe processes and network connections, among other live response items of interest.

- Linux memory structures are written in C and viewable in

includefiles for each version of the operating system. However, each version of Linux has slightly different data structures, making it difficult to develop a widely applicable tool. For a detailed discussion of memory forensics, refer to Chapter 2 of the Malware Forensics Field Guide for Linux Systems. - After capturing the full contents of memory, use an Incident Response tool suite to preserve information from the live system, such as lists of running processes, open files, and network connection, among other volatile data.

- Some information in memory can be displayed by using Command Line Interface (CLI) utilities on the system under examination. This same information may not be readily accessible or easily displayed from the memory dump after it is loaded on a forensic workstation for examination.

- Linux memory structures are written in C and viewable in

Investigative Considerations

- It may be necessary in some cases to capture some nonvolatile data from the live subject system and perhaps even create a forensic duplicate of the entire disk. For all preserved data, remember that the Message Digest 5 (MD5) and other attributes of the output from a live examination must be documented independently by the digital investigator.

- To avoid missteps and omissions, collection of volatile data should be automated. Some commonly used Incident Response tool suites are discussed in the Tool Box section at the end of this book.

About the authors:

Cameron H. Malin is Special Agent with the Federal Bureau of Investigation assigned to a Cyber Crime squad in Los Angeles, California, where he is responsible for the investigation of computer intrusion and malicious code matters. Special Agent Malin is the founder and developer of the FBI’s Technical Working Group on Malware Analysis and Incident Response. Special Agent Malin is a Certified Ethical Hacker (C|EH) as designated by the International Council of E-Commerce Consultants, a Certified Information Systems Security Professional (CISSP), as designated by the International Information Systems Security Consortium, a GIAC certified Reverse-Engineering Malware Professional (GREM), GIAC Certified Intrusion Analyst (GCIA), GIAC Certified Incident Handler (GCIH), and a GIAC Certified Forensic Analyst (GCFA), as designated by the SANS Institute.

Eoghan Casey is an internationally recognized expert in data breach investigations and information security forensics. He is founding partner of CASEITE.com, and co-manages the Risk Prevention and Response business unit at DFLabs. Over the past decade, he has consulted with many attorneys, agencies, and police departments in the United States, South America, and Europe on a wide range of digital investigations, including fraud, violent crimes, identity theft, and on-line criminal activity. Eoghan has helped organizations investigate and manage security breaches, including network intrusions with international scope. He has delivered expert testimony in civil and criminal cases, and has submitted expert reports and prepared trial exhibits for computer forensic and cyber-crime cases. In addition to his casework and writing the foundational book Digital Evidence and Computer Crime, Eoghan has worked as R&D Team Lead in the Defense Cyber Crime Institute (DCCI) at the Department of Defense Cyber Crime Center (DC3) helping enhance their operational capabilities and develop new techniques and tools. He also teaches graduate students at Johns Hopkins University Information Security Institute and created the Mobile Device Forensics course taught worldwide through the SANS Institute. He has delivered keynotes and taught workshops around the globe on various topics related to data breach investigation, digital forensics and cyber security. Eoghan has performed thousands of forensic acquisitions and examinations, including Windows and UNIX systems, Enterprise servers, smart phones, cell phones, network logs, backup tapes, and database systems. He also has information security experience, as an Information Security Officer at Yale University and in subsequent consulting work. He has performed vulnerability assessments, deployed and maintained intrusion detection systems, firewalls and public key infrastructures, and developed policies, procedures, and educational programs for a variety of organizations. Eoghan has authored advanced technical books in his areas of expertise that are used by practitioners and universities around the world, and he is Editor-in-Chief of Elsevier's International Journal of Digital Investigation.

James M. Aquilina is the Managing Director and Deputy General Counsel of Stroz Friedberg, LLC, a consulting and technical services firm specializing in computer forensics; cyber-crime response; private investigations; and the preservation, analysis and production of electronic data from single hard drives to complex corporate networks. As the head of the Los Angeles Office, Mr. Aquilina supervises and conducts digital forensics and cyber-crime investigations and oversees large digital evidence projects. Mr. Aquilina also consults on the technical and strategic aspects of anti-piracy, antispyware, and digital rights management (DRM) initiatives for the media and entertainment industries, providing strategic thinking, software assurance, testing of beta products, investigative assistance, and advice on whether the technical components of the initiatives implicate the Computer Fraud and Abuse Act and anti-spyware and consumer fraud legislation. His deep knowledge of botnets, distributed denial of service attacks, and other automated cyber-intrusions enables him to provide companies with advice to bolster their infrastructure protection.