Industrial Network Security

In this excerpt from chapter 3 of Industrial Network Security, authors Eric D. Knapp and Joel Langill discuss the history and trends of industrial cybersecurity.

The following is an excerpt from Industrial Network Security by authors Eric D. Knapp and Joel Langill and published by Syngress. This section from chapter 3 explores the history of industrial cybersecurity.

Securing an industrial network and the assets connected to it, although similar in many ways to standard enterprise information system security, presents several unique challenges. While the systems and networks used in industrial control systems (ICSs) are highly specialized, they are increasingly built upon common computing platforms using commercial operating systems. At the same time, these systems are built for reliability, performance, and longevity. A typical integrated ICS may be expected to operate without pause for months or even years, and the overall life expectancy may be measured in decades. Attackers, on the contrary, have easy access to new exploits and can employ them at any time. In a typical enterprise network, systems are continually managed in an attempt to stay ahead of this rapidly evolving threat, but these methods often conflict with an industrial network's core requirements of reliability and availability.

Doing nothing is not an option. Because of the importance of industrial networks and the potentially devastating consequences of an attack, new security methods need to be adopted. Industrial networks are being targeted as can be seen in real-life examples of industrial cyber sabotage (more detailed examples of actual industrial cyber events will be presented in Chapter 7, "Hacking Industrial Systems"). They are the targets of a new threat profile that utilizes more sophisticated and targeted attacks than ever before. An equally disturbing trend is the rise in accidental events that have led to significant consequences caused when an authorized system user unknowingly introduces threats into the network during their normal and routine interaction. This interaction may be normal local system administration or via remote system operation.

IMPORTANCE OF SECURING INDUSTRIAL NETWORKS

The need to improve the security of industrial networks cannot be overstated. Most critical manufacturing facilities offer reasonable physical security preventing unauthorized local access to components that form the core of the manufacturing environment. This may include physically secured equipment rack rooms, locked engineering work centers, or restricted access to operational control centers. The only method by which an ICS can be subjected to external cyber threats is via the industrial networks and the connections that exist with other surrounding business networks and enterprise resources.

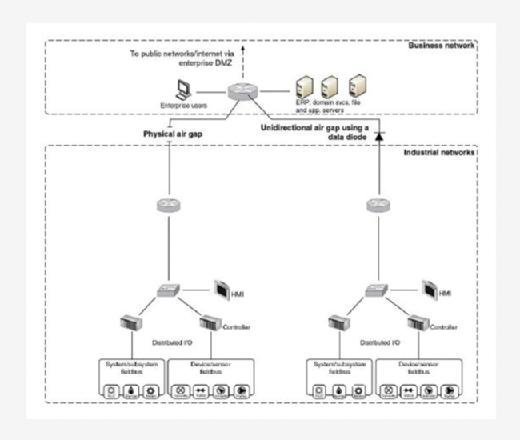

Many industrial systems are built using legacy devices, and in some cases run legacy protocols that have evolved to operate in routable networks. Automation systems were built for reliability long before the proliferation of Internet connectivity, web-based applications, and real-time business information systems. Physical security was always a concern, but information security was typically not a priority because the control systems were air-gapped - that is, physically separated with no common system (electronic or otherwise) crossing that gap, as illustrated in Figure 3.1.

Ideally, the air gap would still remain and would still apply to digital communication, but in reality it rarely exists. Many organizations began the process of reengineering their business processes and operational integration needs in the 1990s. Organizations began to perform more integration between not only common ICS applications during this era, but also the integration of typical business applications like production planning systems with the supervisory components of the ICS. The

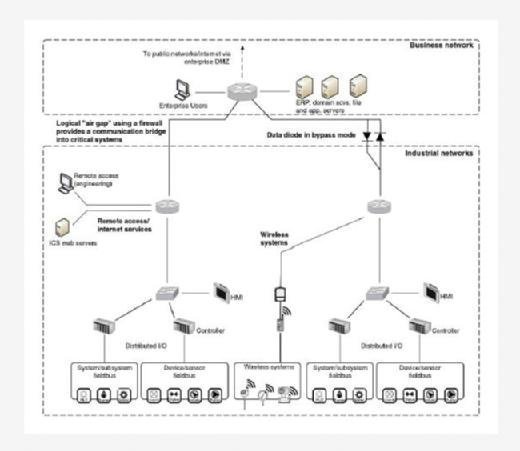

need for real-time information sharing evolved as well as these business operations of industrial networks. A means to bypass the gap needed to be found because the information required originated from across the air gap. In the early years of this integration "wave," security was not a priority, and little network isolation was provided. Standard routing technologies were initially used if any separation was considered. Firewalls were then sometimes deployed as organizations began to realize the basic operational differences between business and industrial networks, blocking all traffic except that which was absolutely necessary in order to improve the efficiency of business operations.

The problem is that -- regardless of how justified or well intended the action -- the air gap no longer exists, as seen in Figure 3.2. There is now a path into critical systems, and any path that exists can be found and exploited.

Security consultants at Red Tiger Security presented research in 2010 that indicates the current state of security in industrial networks. Penetration tests were performed on approximately 100 North American electric power generation facilities, resulting in more than 38,000 security warning and vulnerabilities.1 Red Tiger was then contracted by the US Department of Homeland Security (DHS) to analyze the data in search of trends that could be used to help identify common attack vectors

and, ultimately, to help improve the security of these critical systems against cyberattack.

The results were presented at the 2010 Black Hat USA conference and implied a security climate that was lagging behind other industries. The average number of days between the time a vulnerability was disclosed publicly and the time the vulnerability was discovered in a control system was 331 days - almost an entire year. Worse still, there were cases of vulnerabilities that were over 1100 days old, nearly 3 years past their respective "zero-day."

What does this mean? It says that there are known vulnerabilities that can allow hackers and cyber criminals entry into control networks. Many of these vulnerabilities are converted into reusable modules using open source penetration testing utilities, such as Metasploit and Kali Linux, making exploitation of those vulnerabilities fairly easy and available to a wide audience. This says nothing of the numerous other testing utilities that are not available free-of-charge, and that typically contain exploitation capabilities against zero-day vulnerabilities as well. A more detailed look at ICS exploitation tools and utilities will be discussed in Chapter 7, "Hacking Industrial Systems."

Industrial Network Security

Authors: Eric D. Knapp and Joel Langill

Learn more about Industrial Network Security from publisher Syngress

At checkout, use discount code PBTY25 for 25% off this and other Elsevier titles

It should not be a surprise that there are well-known vulnerabilities within control systems. Control systems are by design very difficult to patch. By intentionally limiting (or even better, eliminating) access to outside networks and the Internet, simply obtaining patches can be difficult. Actually applying patches once they are obtained can also be difficult and restricted to planned maintenance windows because reliability is paramount. The result is that there are almost always going to be unpatched vulnerabilities. Reducing the window from an average of 331 days to a weekly or even monthly maintenance window would be a huge improvement. A balanced view of patching ICS will be covered later in Chapter 10, "Implementing Security and Access Controls."

THE EVOLUTION OF THE CYBER THREAT

It is interesting to look at exactly what is meant by a "cyber threat." Numerous definitions exist, but they all have a common underlying message: (a) unauthorized access to a system and (b) loss of confidentiality, integrity, and/or availability of the system, its data, or applications. Records dating back to 1902 show how simple attacks could be launched against the Marconi Wireless Telegraph system.3 The first computer worm was released just over 25 years ago. Cyber threats have been evolving ever since: from the Morris worm (1988), to Code Red (2001), to Slammer (2003), to Conficker (2008), to Stuxnet (2010), and beyond. When considering the threat against industrial systems, this evolution is concerning for three primary reasons. First, the initial attack vectors still originate in common computing platforms - typically within level 3 or 4 systems. This means that the initial penetration of industrial systems is getting easier through the evolution and deployment of increasingly complex and sophisticated malware. Second, the industrial systems at levels 2, 1, and 0 are increasingly targeted. Third, the threats continue to evolve, leveraging successful techniques from past malware while introducing new capabilities and complexity. A simple analysis of Stuxnet reveals that one of the propagation methods used included the exploitation of the same vulnerabilities used by the Conficker worm that was identified and supposedly patched in 2008. These systems are extremely vulnerable, and can be considered a decade or more behind typical enterprise systems in terms of cyber security maturity. This means that, once breached, the result is most likely a fait accompli. The industrial systems as they stand today simply do not stand a chance against the modern attack capability. Their primary line of defense remains the business networks that surround them and network-based defenses between each security level of the network. Twenty percent (20%) of incidents are now targeting energy, transportation, and critical manufacturing organizations according to the 2013 Verizon Data Investigations Report.

NOTE

It is important to understand the terminology used throughout this book in terms of "levels" and "layers." Layers are used in context of the Open Systems Interconnection (OSI) 7-Layer Model and how protocols and technologies are applied at each layer.5 For example, a network MAC address operates at Layer 2 (Data Link Layer) and depends on network "switches," while an IP address operates at Layer 3 (Network Layer) and depends on network "routers" to manage traffic. The TCP and UDP protocols operate at Layer 4 (Transport Layer) and depend on "firewalls" to handle communication flow.

Levels on the other hand are defined by the ISA-956 standard for the integration of enterprise and production control systems, expanding on what was originally described by the Purdue Reference Model for Computer Integrated Manufacturing (CIM)7 most commonly referred to as the "Purdue Model." Here the term Level 0 applies to field devices and their networks; Level 1 basic control elements like PLCs; Level 2 monitoring and supervisory functions like SCADA servers and HMIs; Level 3 for manufacturing operations management functions; and Level 4 for business planning and logistics.

Incident data have been analyzed from a variety of sources within industrial networks. According to information compiled from ICS-CERT, the Repository for Industrial Security Incidents (RISI), and research from firms including Verizon, Symantec, McAfee, and others, trends begin to appear that impact the broader global market:

- Most attacks seem to be opportunistic. However, not all attacks are opportunistic (see the section titled "Hacktivism, Cyber Crime, Cyber Terrorism, and Cyber War" in this chapter).

- Initial attacks tend to use simpler exploits; thwarted or discovered attacks lead to increasingly more sophisticated methods.

- The majority of cyber-attacks are financially motivated. Espionage and sabotage have also been identified as motives.

- Malware, Hacking, and Social Engineering are the predominant methods of attack amongst those incidents classified as "espionage." Physical attacks, misuse, and environmental methods are common in financially motivated attacks, but are almost completely absent in attacks motivated by espionage.

- New malware samples are increasing at an alarming rate. New samples have slowed somewhat in late 2013, but there are still upwards of 20 million new samples being discovered each quarter.

- The majority of attacks originate externally, and leverage weak or stolen credentials. The pivoting that follows once the initial compromise occurs can be difficult to trace due to the masquerading of the "insider" that occurs from that point. This further corroborates a high incidence of social engineering attacks, and highlights the need for cyber security training at all levels of an organization.

- The majority of incidents affecting industrial systems are unintentional in nature, with control and software bugs accounting for the majority of unintentional incidents.

- New malware code samples are increasingly more sophisticated, with an increase in rootkits and digitally signed malware.

- The percentage of reported industrial cyber incidents is high (28%), but has been steadily declining (65% in the last 5 years).

- AutoRun malware (typically deployed via USB flash drive or similar media) has also risen steadily. AutoRun malware is useful for bypassing network security perimeters, and has been successfully used in several known industrial cyber security incidents.

- Malware and "Hacking as a Service" is increasingly available, and has become more prevalent. This includes an increasing market of zero-day and other vulnerabilities "for sale."

- The number of incidents that are occurring via remote access methods has been steadily increasing over the past several years due to an increasing number of facilities that allow remote access to their industrial networks.

The attacks themselves tend to remain fairly straightforward. The most common initial vectors used for industrial systems include spear phishing, watering hole, and database injection methods. Highly targeted spear phishing (customized emails designed to trick readers into clicking on a link, opening an attachment, or otherwise triggering malware) is extremely effective when using Open Source Intelligence (OSINT) to facilitate social engineering. For example, spear phishing may utilize knowledge of the target corporation's organization structure (e.g. a mass email sender that masquerades as legitimate e-mail from an executive within the company), or of the local habits of employees (e.g. a mass e-mail promising discounted lunch coupons from a local eatery). The phishing emails often contain malicious attachments, or direct their targets to malicious websites. The phished user is thereby infected, and becomes the initial infection vector to a broader infiltration.

The payloads (the malware itself) range from freely available kits, such as Webattacker and torrents, to commercial malware, such as Zeus (ZBOT), Ghostnet (Ghostrat), Mumba (Zeus v3), and Mariposa. Attackers prevent detection by antivirus and other detection mechanisms by obfuscating malware. This accounts for the large rate at which new malware samples are discovered. Many new samples are code variants of existing malware, created as an evasion against common detection mechanisms, such as anti-virus and network intrusion protection systems. This is one reason that Conficker, a worm initially discovered in 2008, remained one of the top threats facing organizations infecting as many as 12 million computers until it began to decline in the first half of 2011.

Once a network is infiltrated and a system infected, malware will attempt to propagate to other systems. When attacking industrial networks, this propagation will include techniques for pivoting to new systems with increasing levels of authorization, until a system is found with access to lower integration "levels." That is, a system in level 4 will attempt to find active connectivity to level 3; level 3 to level 2, and so on. Once connectivity is discovered between levels, the attacker will use the first infected system to attack and infiltrate the second system, burrowing deeper into the industrial areas of the network in what is called "pivoting." This is why strong defense-in-depth is important. A firewall may only allow traffic from system A to system B. Encryption between the systems may be used. However, if system A is compromised, the attacker will be able to communicate freely across the established and authorized flow. This method can be thought of as the "exploitation of trust" and requires additional security measures to protect against such attack vectors.

APTs AND WEAPONIZED MALWARE

More sophisticated cyber-attacks against an industrial system will most likely take steps to remain hidden because a good degree of propagation may be needed to reach the intended target. Malware attempts to operate covertly and may try to deactivate or circumvent anti-malware software, install persistent rootkits, delete trace files, and perform other means to stay undetected prior to establishing backdoor channels for remote access, open holes in firewalls, or otherwise spread through the target network. Stuxnet, for example, attempted to avoid discovery by bypassing host intrusion detection (using zero-day exploits that are not detectable by traditional IDS/ IPS prior to its discovery, and by using various autorun and network-based vectors), disguised itself as legitimate software (through the use of stolen digital certificates), and then covered its tracks by removing trace files from systems if they are no longer needed or if they are resident on systems that are incompatible with its payload. As an extra precautionary measure, and to further elude the ability to detect the presence of the malware, Stuxnet would automatically remove itself from a host if it were not the intended target once it had infected other hosts a specific number of times.

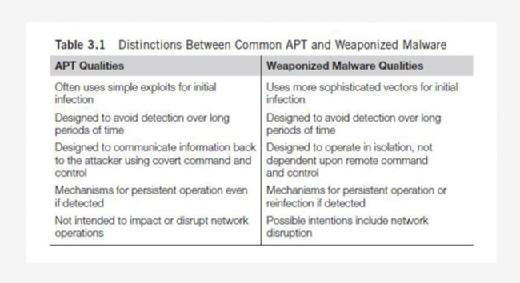

By definition, Stuxnet and many other modern malware samples are considered "Advanced Persistent Threats" (APT). One aspect of an APT is that the malware utilized is often difficult to detect and has measures to establish persistence, so that it can continue to operate even if it is detected and removed or the system is rebooted. The term APT also describes cyber campaigns where the attacker is actively infiltrating systems and exfiltrating data from one or more targets. The attacker could be using persistent malware or other methods of persistence, such as the reinfection of systems and use of multiple parallel infiltration vectors and methods, to ensure broad and consistent success. Examples of other APTs and persistent campaigns against industrial networks include Duqu, Night Dragon, Flame, and the oil and natural gas pipeline intrusion campaign.

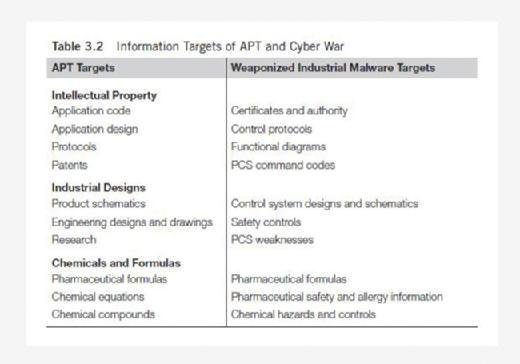

Malware can be considered "weaponized" when it obtains a certain degree of sophistication, and shows a clear motive and intent. The qualities of APTs and weaponized malware differ, as does the information that the malware targets, as can be seen in Tables 3.1 and 3.2. While many APTs will use simple methods, weaponized malware (also referred to as military-grade malware) trend toward more sophisticated delivery mechanisms and payloads. Stuxnet is, again, a useful example of weaponized malware. It is highly sophisticated - the most sophisticated malware by far when it was first discovered - and also extremely targeted. It had a clear purpose: to discover, infiltrate, and sabotage a specific target system. Stuxnet utilized multiple zero-day exploits for infection. The development of one zero-day requires considerable resources in terms of either the financial resources to purchase commercial malware or the intellectual resources with which to develop new malware. Stuxnet raised a high degree of speculation about its source and its intent at least partly due to the level of resources required to deliver the worm through so many zero-days. Stuxnet also used "insider intelligence" to focus on its target control system, which again implied that the creators of Stuxnet had significant resources and that they either had access to an industrial control system with which to develop and test their malware, or they had enough knowledge about how such a control system was built that they were able to develop it in a simulated environment.

The developers of Stuxnet could have used stolen intellectual property - which is the primary target of the APT - to develop a more weaponized piece of malware. In other words, a cyber-attack that is initially classified as "information theft" may seem relatively benign, but it may also be the logical precursor to weaponized code. Some other recent examples of weaponized malware include Shamoon, as well as previously mentioned Duqu and Flame campaigns.

Details surrounding the Duqu and Pipeline Intrusion campaigns remain restricted at this time, and are not appropriate for this book. A great deal can be learned from

Night Dragon and Stuxnet, as they both have components that specifically relate to industrial systems.

Night Dragon

In February 2011, McAfee announced the discovery of a series of coordinated attacks against oil, energy, and petrochemical companies. The attacks, which originated primarily in China, were believed to have commenced in 2009, operating continuously and covertly for the purpose of information extraction,30 as is indicative of an APT.

Read an excerpt

Download the PDF of chapter 3 in full to learn more!

Night Dragon is further evidence of how an outside attacker can (and will) infiltrate critical systems once it can successfully masquerade as an insider. It began with SQL database injections against corporate, Internet-facing web servers. This initial compromise was used as a pivot to gain further access to internal, intranet servers. Using standard tools, attackers gained additional credentials in the form of usernames and passwords to enable further infiltration to internal desktop and server computers. Night Dragon established command and control (C2) servers as well as Remote Administration Toolkits (RATs), primarily to extract e-mail archives from executive accounts. Although the attack did not result in sabotage, as was the case with Stuxnet, it did involve the theft of sensitive information, including operational oil and gas field production systems (including industrial control systems) and financial documents related to field exploration and bidding of oil and gas assets. The intended use of this information is unknown at this time. The information that was stolen could be used for almost anything, and for a variety of motives. None of the industrial control systems of the target companies were affected; however, certain cases involved the exfiltration of data collected from operational control systems - all of which could be used in a later, more targeted attack. As with any APT, Night Dragon is surrounded with uncertainty and supposition. After all, APT is an act of cyber espionage - one that may or may not develop into a more targeted cyber war.

Stuxnet

Stuxnet is largely considered as a "game changer" in the industry, because it was the first targeted, weaponized cyber-attack against an industrial control system. Prior to Stuxnet, it was still widely believed that industrial systems were either immune to cyber-attack (due to the obscurity and isolation of the systems), and were not being targeted by hackers or other cyber-threats. Proof-of-concept cyber-attacks, such as the Aurora project, were met with skepticism prior to Stuxnet. The "threat" pre-Stuxnet was largely considered to be limited to accidental infection of computing systems, or the result of an insider threat. It is understandable, then, why Stuxnet was so widely publicized, and why it is still talked about today. Stuxnet proved many assumptions of industrial cyber threats to be wrong, and did so using malware that was far more sophisticated than anything seen before.

Today, it is obvious that industrial control systems are of interest to malicious actors, and that the systems are both accessible and vulnerable. Perhaps the most important lesson that Stuxnet taught us is that a cyber-attack is not limited to PCs and servers. While Stuxnet used many methods to exploit and penetrate Windows-based systems, it also proved that malware could alter an automation process by infecting systems within the ICS, overwriting process logic inside a controller, and hiding its activity from monitoring systems. Stuxnet is discussed in detail in Chapter 7, "Hacking Industrial Control Systems."

Advanced Persistent Threats and Cyber Warfare

One can make two important inferences when comparing APT and cyber warfare. The first is that cyber warfare is higher in sophistication and in consequence, mostly due to available resources of the attacker and the ultimate goal of destruction versus profit. The second is that in many industrial networks, there is less profit available to a cyber-attacker than from others and so it requires a different motive for attack (i.e. socio-political). If the industrial network you are defending is largely responsible for commercial manufacturing, signs of an APT are likely evidence of attempts at intellectual theft. If the industrial network you are defending is critical and could potentially impact lives, signs of an APT could mean something larger, and extra caution should be taken when investigating and mitigating these attacks.

About the author: Eric D. Knapp is a globally recognized expert in industrial control systems cyber security, and continues to drive the adoption of new security technology in order to promote safer and more reliable automation infrastructures. He firsst specialized in industrial control cyber security while at Nitrosecurity, where he focused on the collection and correlation of SCADA and ICS data for the detection of advanced threats against these environments. He was later responsible for the development and implementation of end-to-end ICS cyber security solutions for McAfee, Inc. in his role as Global Director for Critical Infrastructure Markets. He is currently the Director of Strategic Alliances for Wurldtech Security Technologies, where he continues to promote the advancement of embedded security technology in order to better protect SCADA, ICS and other connected, real-time devices. He is a long-time advocate of improved industrial control system cyber security and participates in many Critical Infrastructure industry groups, where he brings a wealth of technology expertise. He has over 20 years of experience in Infromation Technology, specializing in industrial automation technologies, infrastructure security, and applied Ethernet protocols as well as the design and implementation of Intrusion Prevention Systems and Security Information and Event Management systems in both enterprise and industrial networks. In addition to his work in information security, he is an award-winning author of cition. He studied at the University of New Hampshire and the University of London. He can be found on Twitter @ericdknapp

Joel Langill brings a unique perspective to operational security with over three decades field experience exclusively in industrial automation and control. He has deployed ICS solutions covering most major industry sectors in more than 35 countries encompassing all generations of automated control from pneumatic to cloud-based services. He has been directly involved in automation solutions spanning feasibility, budgeting, front-end engineering design, detailed design, system integration, commissioning, support and legacy system migration. Joel is currently an independent consultant providing a range of services to ICS end-users, system integrators, and governmental agencies worldwide. He works closely with suppliers in both consulting and R&D roles, and has developed a specialized training curriculum focused on applied operational security. Joel founded and maintains the popular ICS security website SCADAhacker.com which offers visitors extensive resources in understanding, evaluating, and securing control systems. He developed a specialized training curriculum that focuses on applied cyber security and defenses for industrial systems. His website and social networks extends to readers in more than 100 countries globally. Joel devotes time to independent research relating to control system security, and regularly blogs on the evaluation and security of control systems. His unique experience and proven capabilities have fostered business relationships with several large industry firms. Joel serves on the Board of Advisors for Scada Fence Ltd., works with venture capital companies in evaluating industrial security start-up firms, and is an ICS research focal point to CERT organizations around the world. He has contributed to multiple books on security, and was the technical editor for “Applied Cyber Security and the Smart Grid”. Joel is a voting member of the ISA99 committee on industrial security for control systems, and was a lead contributor to the ISA99 technical report on the Stuxnet malware. He has published numerous reports on ICS-related campaigns including Heartbleed, Dragonfly, and Black Energy. His certifications include: Certified Ethical Hacker (CEH), Certified Penetration Tester (CPT), Certified SCADA Security Architect (CSSA), and TU¨V Functional Safety Engineer (FSEng). Joel has obtained extensive training through the U.S. Dept. of Homeland Security FEMA Emergency Management Institute, having completed ICS-400 on incident command and crisis management. He is a graduate of the University of Illinois-Champaign with a BS (Bronze Tablet) in Electrical Engineering. He can be found on Twitter @SCADAhacker