Implement API rate limiting to reduce attack surfaces

Rate limiting can help developers prevent APIs from being overwhelmed with requests, thus preventing denial-of-service attacks. Learn how to implement rate limiting here.

APIs, which connect applications to other applications and services, present attackers with a juicy target in their relentless search for vulnerable attack surfaces.

With the push toward DevSecOps, API security cannot be an afterthought. Developers must account for security during the API development lifecycle. To help developers out, Neil Madden, security director at ForgeRock, wrote API Security in Action. It covers all the techniques needed to secure APIs from a variety of attacks, including ones directed at IoT APIs.

In this excerpt from Chapter 3, Madden explained how to implement API rate limiting as one security measure. Rate limiting can help prevent denial-of-service (DoS) attacks and ensure availability. Download a PDF to read the rest of the chapter, which covers additional security methods, including authentication, learning how to prevent spoofing, HTTP Basic authentication, and password saving and database creation.

Check out an interview with Madden, where he explained why he wrote the book specifically for developers, how to retrofit security for existing APIs and more.

In this chapter you'll go beyond basic functionality and see how proactive security mechanisms can be added to your API to ensure all requests are from genuine users and properly authorized. You'll protect the Natter API that you developed in chapter 2, applying effective password authentication using Scrypt, locking down communications with HTTPS, and preventing denial of service attacks using the Guava rate-limiting library.

3.1 Addressing threats with security controls

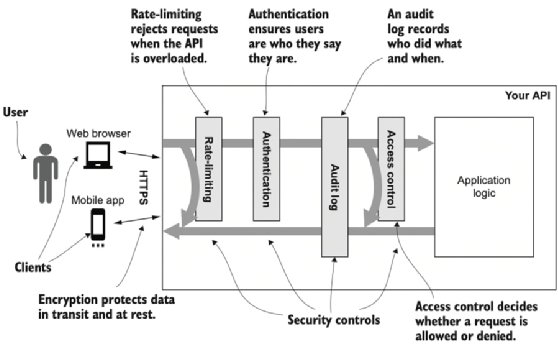

You'll protect the Natter API against common threats by applying some basic security mechanisms (also known as security controls). Figure 3.1 shows the new mechanisms that you'll develop, and you can relate each of them to a STRIDE threat (chapter 1) that they prevent:

- Rate-limiting is used to prevent users overwhelming your API with requests, limiting denial of service threats.

- Encryption ensures that data is kept confidential when sent to or from the API and when stored on disk, preventing information disclosure. Modern encryption also prevents data being tampered with.

- Authentication makes sure that users are who they say they are, preventing spoofing. This is essential for accountability, but also a foundation for other security controls.

- Audit logging is the basis for accountability, to prevent repudiation threats.

- Finally, you'll apply access control to preserve confidentiality and integrity, preventing information disclosure, tampering and elevation of privilege attacks.

NOTE An important detail, shown in figure 3.1, is that only rate-limiting and access control directly reject requests. A failure in authentication does not immediately cause a request to fail, but a later access control decision may reject a request if it is not authenticated. This is important because we want to ensure that even failed requests are logged, which they would not be if the authentication process immediately rejected unauthenticated requests.

Together these five basic security controls address the six basic STRIDE threats of spoofing, tampering, repudiation, information disclosure, denial of service, and elevation of privilege that were discussed in chapter 1. Each security control is discussed and implemented in the rest of this chapter.

3.2 Rate-limiting for availability

Threats against availability, such as denial of service (DoS) attacks, can be very difficult to prevent entirely. Such attacks are often carried out using hijacked computing resources, allowing an attacker to generate large amounts of traffic with little cost to themselves. Defending against a DoS attack, on the other hand, can require significant resources, costing time and money. But there are several basic steps you can take to reduce the opportunity for DoS attacks.

DEFINITION A Denial of Service (DoS) attack aims to prevent legitimate users from accessing your API. This can include physical attacks, such as unplugging network cables, but more often involves generating large amounts of traffic to overwhelm your servers. A distributed DoS (DDoS) attack uses many machines across the internet to generate traffic, making it harder to block than a single bad client.

Many DoS attacks are caused using unauthenticated requests. One simple way to limit these kinds of attacks is to never let unauthenticated requests consume resources on your servers. Authentication is covered in section 3.3 and should be applied immediately after rate-limiting before any other processing. However, authentication itself can be expensive so this doesn't eliminate DoS threats on its own.

NOTE Never allow unauthenticated requests to consume significant resources on your server.

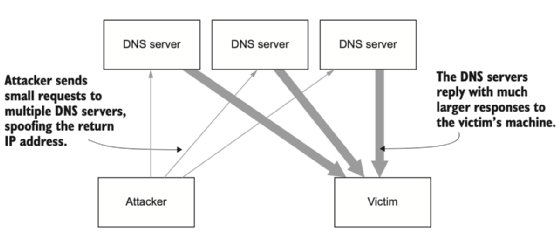

Many DDoS attacks rely on some form of amplification so that an unauthenticated request to one API results in a much larger response that can be directed at the real target. A popular example are DNS amplification attacks, which take advantage of the unauthenticated Domain Name System (DNS) that maps host and domain names into IP addresses. By spoofing the return address for a DNS query, an attacker can trick the DNS server into flooding the victim with responses to DNS requests that they never sent. If enough DNS servers can be recruited into the attack, then a very large amount of traffic can be generated from a much smaller amount of request traffic, as shown in figure 3.2. By sending requests from a network of compromised machines (known as a botnet), the attacker can generate very large amounts of traffic to the victim at little cost to themselves. DNS amplification is an example of a network-level DoS attack.

These attacks can be mitigated by filtering out harmful traffic entering your network using a firewall. Very large attacks can often only be handled by specialist DoS protection services provided by companies that have enough network capacity to handle the load.

TIP Amplification attacks usually exploit weaknesses in protocols based on UDP (User Datagram Protocol), which are popular in the Internet of Things (IoT). Securing IoT APIs is covered in chapters 12 and 13.

Network-level DoS attacks can be easy to spot because the traffic is unrelated to legitimate requests to your API. Application-layer DoS attacks attempt to overwhelm an API by sending valid requests, but at much higher rates than a normal client. A basic defense against application-layer DoS attacks is to apply rate-limiting to all requests, ensuring that you never attempt to process more requests than your server can handle. It is better to reject some requests in this case, than to crash trying to process everything. Genuine clients can retry their requests later when the system has returned to normal.

DEFINITION Application-layer DoS attacks (also known as layer-7 or L7 DoS) send syntactically valid requests to your API but try to overwhelm it by sending a very large volume of requests.

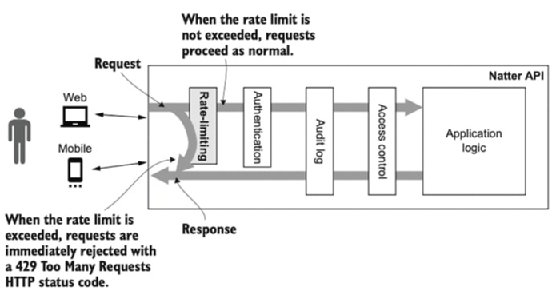

Rate-limiting should be the very first security decision made when a request reaches your API. Because the goal of rate-limiting is ensuring that your API has enough resources to be able to process accepted requests, you need to ensure that requests that exceed your API's capacities are rejected quickly and very early in processing. Other security controls, such as authentication, can use significant resources, so rate- limiting must be applied before those processes, as shown in figure 3.3.

TIP You should implement rate-limiting as early as possible, ideally at a load balancer or reverse proxy before requests even reach your API servers. Rate-limiting configuration varies from product to product. See https://medium.com/faun/understanding-rate-limiting-on-haproxy-b0cf500310b1 for an example of configuring rate-limiting for the open source HAProxy load balancer.

3.2.1 Rate-limiting with Guava

Often rate-limiting is applied at a reverse proxy, API gateway, or load balancer before the request reaches the API, so that it can be applied to all requests arriving at a cluster of servers. By handling this at a proxy server, you also avoid excess load being generated on your application servers. In this example you'll apply simple rate-limiting in the API server itself using Google's Guava library. Even if you enforce rate-limiting at a proxy server, it is good security practice to also enforce rate limits in each server so that if the proxy server misbehaves or is misconfigured, it is still difficult to bring down the individual servers. This is an instance of the general security principle known as defense in depth, which aims to ensure that no failure of a single mechanism is enough to compromise your API.

DEFINITION The principle of defense in depth states that multiple layers of security defenses should be used so that a failure in any one layer is not enough to breach the security of the whole system.

As you'll now discover, there are libraries available to make basic rate-limiting very easy to add to your API, while more complex requirements can be met with off-the-shelf proxy/gateway products. Open the pom.xml file in your editor and add the following dependency to the dependencies section:

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>29.0-jre</version>

</dependency>

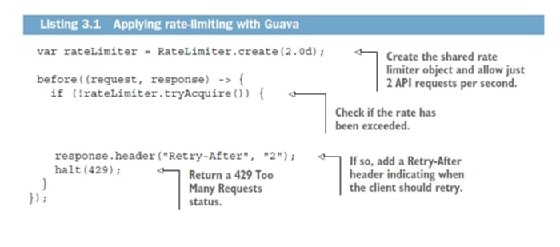

Guava makes it very simple to implement rate-limiting using the RateLimiter class that allows us to define the rate of requests per second you want to allow. You can then either block and wait until the rate reduces, or you can simply reject the request as we do in the next listing. The standard HTTP 429 Too Many Requests status code can be used to indicate that rate-limiting has been applied and that the client should try the request again later. You can also send a Retry-After header to indicate how many seconds the client should wait before trying again. Set a low limit of 2 requests per second to make it easy to see it in action. The rate limiter should be the very first filter defined in your main method, because even authentication and audit logging may consume resources.

TIP The rate limit for individual servers should be a fraction of the overall rate limit you want your service to handle. If your service needs to handle a thousand requests per second, and you have 10 servers, then the per-server rate limit should be around 100 request per second. You should verify that each server is able to handle this maximum rate.

Open the Main.java file in your editor and add an import for Guava to the top of the file:

import com.google.common.util.concurrent.*;

Then, in the main method, after initializing the database and constructing the controller objects, add the code in the listing 3.1 to create the RateLimiter object and add a filter to reject any requests once the rate limit has been exceeded. We use the non-blocking tryAcquire() method that returns false if the request should be rejected.

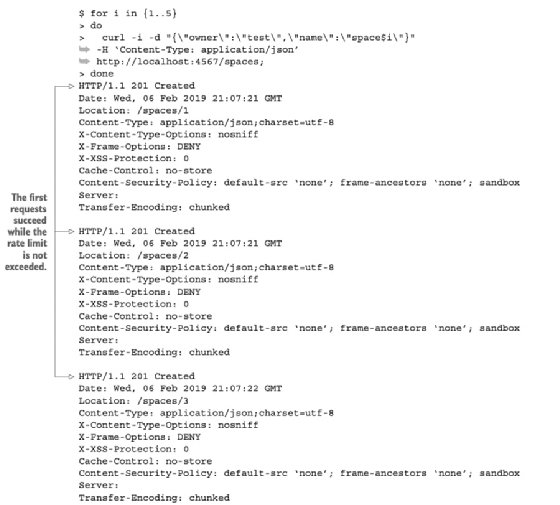

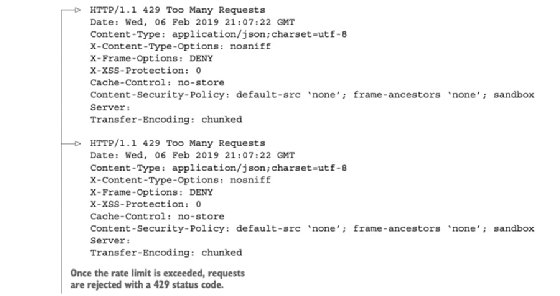

Guava's rate limiter is quite basic, defining only a simple requests per second rate. It has additional features, such as being able to consume more permits for more expensive API operations. It lacks more advanced features, such as being able to cope with occasional bursts of activity, but it's perfectly fine as a basic defensive measure that can be incorporated into an API in a few lines of code. You can try it out on the command line to see it in action:

By returning a 429 response immediately, you can limit the amount of work that your API is performing to the bare minimum, allowing it to use those resources for serving the requests that it can handle. The rate limit should always be set below what you think your servers can handle, to give some wiggle room.

About the author

Neil Madden is security director at ForgeRock and has an in-depth knowledge of applied cryptography, application security and current API security technologies. He has worked as a programmer for 20 years and holds a Ph.D. in computer science.

https://www.manning.com/books/api-security-in-action?a_aid=api_security_in_action&a_bid=6806e3b6