Getty Images

The role of container networking in DevOps

Containerization isn't just for DevOps teams. Network engineers often set up container networks, ensure connectivity between containers and work with container networking tools.

Containerization revolutionized how developers design, deploy and maintain applications in modern software systems. As more organizations adopt microservices architecture, containerization tools have become staples in DevOps practices. This has led to a growing number of network engineers working with container networking environments to manage the networks that support those containers.

Before containers, DevOps engineers tasked with deploying an application to a production server faced several challenges, such as the following:

- OS compatibility.

- Inconsistency with library versions.

- Insufficient permissions.

- The "works on my machine" dilemma.

These challenges resulted in slow deployment cycles, increased Opex and unpredictable behaviors.

Containers package everything required to run applications into a single executable package -- the container. This process ensures the application runs consistently across any environment, such as a developer's machine, on-premises server or the cloud, minimizing the risks of unexpected behaviors and platform-specific issues.

Even though containers simplify deployment, they don't function in isolation. They must communicate over internal networks -- between different containers -- and external services across multiple environments. This is where container networking plays a crucial role.

Core concepts of container networking

The following concepts are critical to understanding container networking.

1. Network namespaces

Containers run in isolated spaces known as namespaces. Each namespace has its own routing tables, network interfaces and firewall rules. This prevents different containers from accessing each other unless configured to do so. A namespace creates distinct network environments per pod or container.

2. Virtual Ethernet interfaces

Virtual Ethernet (veth) pairs create links between namespaces and the host network. Each pair has two ends: one end attached to the container namespace and the other to the host network bridge. This enables data to pass between the host and container for external connectivity.

3. Service discovery

Service discovery facilitates container-to-container communication by mapping container names to IP addresses or DNS names for scalability.

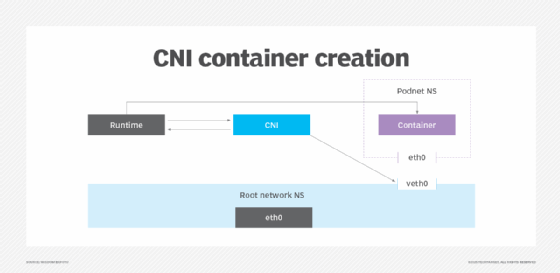

4. Container Network Interface

Container Networking Interface (CNI) is a Cloud Native Computing Foundation (CNCF) project. It consists of a specification and a set of libraries used for writing plugins and for Linux and Windows container interface configurations. CNI allocates networking resources when containers are created. It then removes them when teams no longer need those resources and delete them.

CNI standardizes how a container runtime interacts with various Kubernetes network plugins to create and enable networking configurations for containers. CNI also provides standardization for container orchestrators, such as Kubernetes, to integrate with various networking tools and plugins. This standardization ensures consistent networking.

When networks need a container, the container runtime invokes CNI, which configures interfaces, routes and network namespaces. CNI then provides configurations back to the container runtime. The container runtime launches the container when it's finished or deleted and again invokes CNI for resource cleanup.

Common container networking models

The following are common container networking models that teams can use:

- Bridge networking, or local namespaced network.

- Host networking, or direct host interface.

- Overlay networking, or multihost networking.

Bridge networking (local namespaced network)

In this model, a host isolates a container network by creating a bridge. This model is ideal for local development, single-host environments and situations where intercontainer traffic doesn't need to route across multiple hosts. Port mapping can also extend the bridge to externally expose the services.

In Kubernetes, the bridge networking model isn't used directly. Instead, it operates at a higher abstraction and is mostly managed by CNI plugins. Thus, the following example explores how to create an isolated bridge network in Docker.

Example

Docker creates a virtual bridge -- docker0 -- by default. This enables internal communication through IP addresses from a private network range and Network Address Translation (NAT) for outbound traffic.

# Create a custom bridge network

docker network create --driver bridge my_bridge_network

# Run containers on the bridge

docker run -d --network my_bridge_network --name container1 nginx

docker run -d --network my_bridge_network --name container2 nginx

# Inspect the bridge network details

docker network inspect my_bridge_networks

2. Host networking (direct host interface)

Host networking eliminates the need for isolation found in bridge networking. Host networking maps the container network directly to the host's network namespace, thereby passing NAT. This model is suitable for high-performance applications where low latency is crucial and isolation isn't a major concern.

Example 1

Here's how to use host networking in Docker.

# Run container with host networking

docker run -d --network host --name web nginx

Example 2

In Kubernetes, set host networking in the pod spec. Setting hostNetwork: true maps the pod's network directly to the node.

apiVersion: v1

kind: Pod

metadata:

name: host-network-pod

spec:

hostNetwork: true

containers:

- name: nginx

image: nginx

3. Overlay networking (multihost networking)

Overlay networking enables communication between containers across multiple hosts and encapsulates traffic using Virtual Extensible LAN. Kubernetes and Docker Swarm clusters use this model for intercontainer communications across nodes.

This model is best suited for highly distributed applications. For Kubernetes, CNI plugins, such as Cilium, manage overlay networking often at Layer 3.

Designing container networks

The process of designing container networks aligns traditional networking elements with the scalable needs of containerized environments. These elements include the following:

- Existing network infrastructure.

- Software-defined networking (SDN).

- Network policies.

Existing network infrastructure

In container network design, it's crucial to ensure smooth integration with the preexisting network infrastructure. This is especially critical for legacy systems and other external services.

When applications need to communicate with legacy databases or applications hosted in on-premises data centers, engineers must configure a more effective pathway technique to ensure high performance. Some techniques include VPN tunneling or direct interconnects.

SDN

SDN abstracts the networking layer, making it easier for teams to have a programmable and centralized control plane. The centralized control plane manages data flow between containers, both within a cluster or across multiple clusters. It decouples the control plane from the data plane. This separation enables DevOps and network engineers to control the network traffic programmatically and respond to network demand changes as they occur.

SDN controllers define network behavior through high-level policies. They dynamically assign IP addresses, enforce policies and manage routes throughout the containers. In hybrid and multi-cloud environments, SDN manages networking across infrastructures by abstracting the hardware layer.

Network policies

Network policies specify how pods communicate with one another and external services. They are also crucial to network security and are especially necessary when services are in the same physical network infrastructure.

In Kubernetes, network engineers apply policies at the namespace or pod level. Network policies help engineers control the flow of traffic between pods in the cluster and achieve a zero-trust architecture in containerized environments. Tools like Cilium or Istio can help containers achieve zero trust, so components must verify and authorize to communicate, even internally.

Example

This example shows how to enable traffic from specific pods only. Assume there are two applications: app-frontend and app-backend. Both run in the same Kubernetes namespace. Below is a sample Cilium network policy that allows traffic to app-backend only from app-frontend.

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "allow-frontend-to-backend"

spec:

description: "Allows traffic from app-frontend to app-backend."

endpointSelector:

matchLabels:

app: app-backend

ingress:

- fromEndpoints:

- matchLabels:

app: app-frontend

toPorts:

- ports:

- port: "80"

protocol: TCP

Container networking challenges and solutions

While containers provide a certain level of flexibility, they also introduce new networking challenges. The following are some of the most common challenges and their solutions:

- IP address sprawl.

- Ensuring network isolation.

- Dynamic containerized workloads.

IP address sprawl

A host's network namespace assigns unique IP addresses to containers after creation. As the application grows in complexity, it might need more containers. Thus, it requires more IP addresses. Containers are dynamic in nature -- they're frequently created and destroyed -- which leads to rapid IP allocation and release. This can quickly spiral out of control, causing IP exhaustion and subnet overlap.

These challenges make it difficult for network engineers to keep track of and manage containers, especially in large-scale infrastructures. Ultimately, the result is poor networking performance and communication failure.

Solution

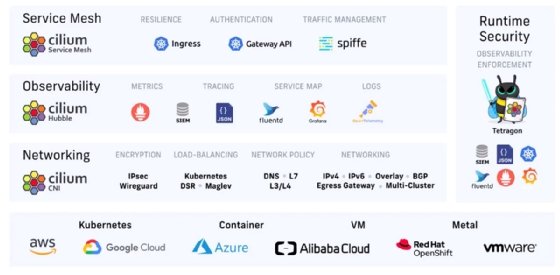

One way to address this challenge is to use Cilium, which supports IP address management. Cilium provides IP pooling, cluster-wide IP addressing and subnet-aware allocation, all of which minimize potential fragmentation. Doing so prevents IP exhaustion, subnet conflicts and IP overlap in high-churn, multi-cluster Kubernetes environments. Cilium also integrates virtual private cloud IP ranges, real-time monitoring and automated IP recycling.

Ensuring network isolation

All containers running on the same host network share the underlying network resources and infrastructure. If not configured correctly, users can accidentally access other containers' data, which is supposed to be isolated. This poses a lateral movement threat in which malicious actors might compromise a container. Network engineers must configure properly so only intended communications between containers occur -- without compromising on performance.

Solution

For Kubernetes environments, implementing Kubernetes Network Policies at the container level helps isolate workloads. Cilium can take it a step further and extend the standard Kubernetes network policy spec via its own policies.

These policies are identity-based and implement fine-grained rules at Layers 3, 4 and 7 of the OSI model. Once implemented, they enable control over intercontainer communication by explicitly specifying which pod, group of pods (with the same labels), namespaces or cluster can communicate. Consider integrating policies with a service mesh if the application layer requires service-to-service isolation.

Dynamic containerized workloads

Due to the flexible and ephemeral nature of containers, it's challenging to maintain stable networking connections between services. Services might not know the current IP addresses of other devices, which disrupts communication.

Solution

Introduce service discovery mechanisms if a container's IP changes so other services can still reach it using a stable DNS name. Load balancers can also help route traffic to the correct container, regardless of IP address.

Container networking tools

The following are container networking tools available for network engineers to use.

Cilium

Cilium is an eBPF-based container network interface for Kubernetes built by Isovalent, now part of Cisco, in 2015. It is a part of the CNCF landscape and has an open source version and a paid subscription. It has many different use cases, including the following:

- Works as a service mesh.

- Provides load balancing between services, including Express Data Path-based load balancing for low latency.

- Provides encryption.

- Provides a Layer 3 network that is also Layer 7 protocol-aware and enforces network policies on Layers 3, 4 and 7.

- Replaces kube-proxy.

- Manages bandwidth.

Calico

Calico is a Kubernetes networking and security tool that supports multiple network models. Due to its Layer 3 networking approach, Calico is highly scalable in large distributed systems where Layer 2 broadcast traffic is unmanageable. It also uses eBPF to reduce overhead and increase throughput for faster networking.

Calico's network policy can support other container orchestrators, such as OpenShift. For security, Calico supports IPsec and WireGuard protocols for data encryption while in transit.

Flannel

Flannel is a lightweight Kubernetes overlay network tool that requires only minimal setup and low operational complexity. Designed with simplicity in mind, its basic networking features are an ideal starting point for small to medium-sized deployments. Therefore, it lacks advanced features necessary for large-scale environments, such as network policies and encryption.

As their needs evolve, organizations might later scale into more feature-rich options.

Istio

Istio is an open source service mesh for managing service-to-service communications in microservices. Istio focuses on traffic management, security and observability. Features include the following:

- Automatic load balancing.

- Traffic management.

- A pluggable policy layer.

- Comprehensive observability and monitoring with minimal or no changes to the service code.

Istio is ideal for complex architectures and works in tandem with other container network interfaces for a more comprehensive networking strategy.

Container networking performance

Monitoring traffic is critical to the reliability and performance of container networks. Visibility is necessary to identify bottlenecks and potential network failures that might affect production. Tools like Prometheus and Grafana can monitor traffic and track latency and bandwidth usage.

To ensure quality of service, apply policies that prioritize critical services over less important traffic. With efficient resource allocation, teams can avoid performance degradation.

Wisdom Ekpotu is a DevOps engineer and technical writer focused on building infrastructure with cloud-native technologies.