JRB - Fotolia

Assessing performance bottlenecks in virtualized networking

Virtual or soft switches can add measurable overhead to network-intensive workloads, but implementing PCI devices offers one option to reduce excess overhead.

Spectre and Meltdown illuminated networking vulnerabilities that are still being assessed, but it's obvious the attacks affected network capacity planning. While administrators hunt for ways to mitigate the effect of these attacks, one specification -- single-root I/O virtualization, or SR-IOV -- may be worth considering.

SR-IOV is a peripheral component interconnect (PCI) standard that has important performance implications for virtualized networking. However, before we get too deep into why this is the case, it is worth understanding the problem SR-IOV addresses.

Virtualized networking abstracts software from hardware. Taking into account a few assumptions over the host, virtualized applications -- or containers, for that matter -- should behave identically on desktop-class hardware, a platform-as-a-service provider or anything in between. This enables application vendors to standardize on a handful of operating-system images. The drawback is hypervisor functions and other applications generate a measurable overhead, compared with the same workloads running on bare-metal hardware.

For most workloads, most of the time, this is not a problem. However, there are bottlenecks unique to public and private clouds that do not really crop up in bare-metal deployments. One example is how the host figures out which guest to send a packet.

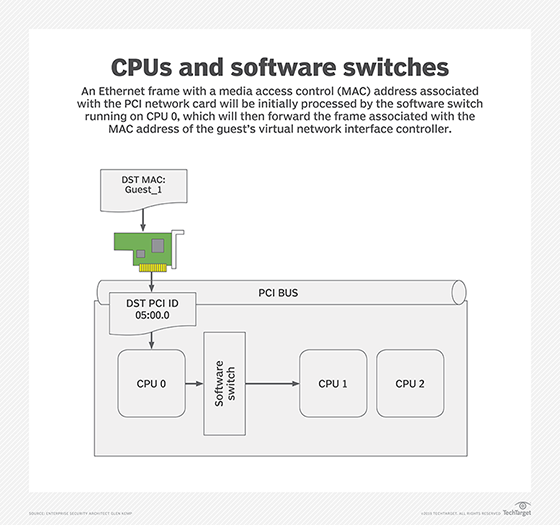

Whenever a physical host receives an Ethernet frame, it passes the data across the PCI bus to the host CPU. In a virtualized networking environment, the CPU emulates an Ethernet switch to direct the frame to the appropriate guest. This soft switch literally interrupts a CPU core from doing useful work, such as servicing a guest.

The relative effect of using the CPU to make a forwarding decision differs according to the workload. A database transaction may require a bunch of disk I/O and spawn a number of worker threads. As a result, the CPU cycles lost to software switching will form a relatively small part of the overall transaction time.

In the above diagram, an Ethernet frame with a media access control address associated with the PCI network card will be initially processed by the software switch running on CPU 0, which will then forward the frame associated with MAC address of the guest's virtual network interface controller (NIC).

Network functions -- such as firewall filtering, content inspection and load balancing -- are particularly network I/O-intensive. In a virtualized networking environment, they may be at a particular disadvantage. These workloads can be summarized as, "Perform this RegEx and put the packet back on the wire," or, "Rewrite these fields in each packet."

The performance for these transactions is much more dependent on the CPU; only a handful of cycles may be required to accept, process and forward each frame. Furthermore, this type of transaction is less dependent on disk I/O. As a result, interrupting a CPU core to perform a basic switch function has a greater effect on the total transaction time.

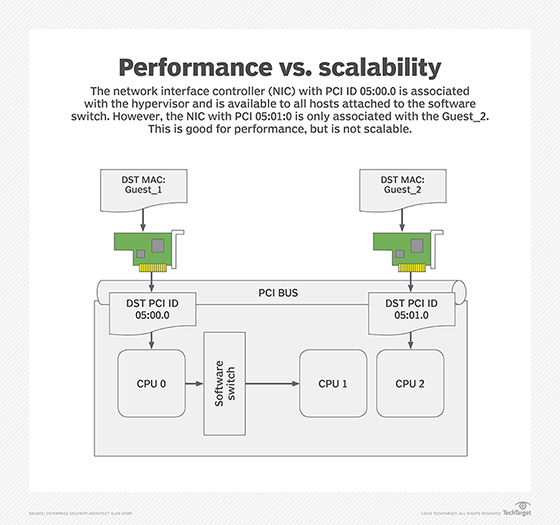

One solution to resolve the issue of required network capacity is to use DirectPath I/O -- attaching a physical NIC directly to an individual guest over the PCI bus. This is fine for a lab environment and some specialized cases; it is not particularly scalable or efficient. Installing dozens of physical network interfaces into each host negates a significant advantage of virtualization, which is an infrastructure that requires less cabling and fewer switches.

In the above example, the NIC with PCI ID 05:00.0 is associated with the hypervisor and is available to all hosts attached to the software switch. However, the NIC with PCI 05:01:0 is only associated with the Guest_2. This is good for performance, but is not scalable.

Editor's note: In the next installment of this series, Glen Kemp explains SR-IOV's benefits and shortfalls.