encoding and decoding

What is encoding and decoding in a computer?

Encoding and decoding are used in many forms of communications, including computing, data communications, programming, digital electronics and human communications. These two processes involve changing the format of content for optimal transmission or storage.

In computers, encoding is the process of putting a sequence of characters (letters, numbers, punctuation, and certain symbols) into a specialized format for efficient transmission or storage. Decoding is the opposite process -- the conversion of an encoded format back into the original sequence of characters.

These terms should not be confused with encryption and decryption, which focus on hiding and securing data. (We can encrypt data without changing the code or encode data without deliberately concealing the content.)

What is encoding and decoding in data communications?

Encoding and decoding processes for data communications have interesting origins. For example, Morse code emerged in 1838 when Samuel Morse created standardized sequences of two signal durations, called dots and dashes, for use with the telegraph. Today's amateur radio operators still use Q-signals, which evolved from codes the British Postmaster General created in the early 1900s to ease communication among British ships and coast stations.

Manchester encoding was developed for storing data on magnetic drums of the Manchester Mark 1 computer, built in 1949. In that encoding model, each binary digit, or bit, is encoded low then high, or high then low, for equal time. Also known as phase encoding, the Manchester process of encoding is used in consumer infrared protocols, radio frequency identification and near-field communication.

What is encoding and decoding in programming?

Internet access relies on encoding. A Uniform Resource Locator (URL), the address of a webpage, can only be sent over the internet using the American Standard Code for Information Interchange (ASCII), which is the code used for text files in computing.

In an ASCII file, a 7-bit binary number represents each character, which can be uppercase or lowercase letters, numbers, punctuation marks and other common symbols. However, URLs cannot contain spaces and often have characters that aren't in the ASCII character set. URL encoding, also called percent encoding, addresses this through the conversion of spaces -- to a + sign or with %20 -- and non-ASCII characters into a valid ASCII format.

Other commonly used codes in programming include BinHex, Multipurpose Internet Mail Extensions, Unicode and Uuencode.

Some ways encoding and decoding are used in various programming languages include the following.

In Java

Encoding and decoding in Java is a method of representing data in a different format to efficiently transfer information through a network or the web. The encoder converts data into a web representation. Once received, the decoder converts the web representation data into its original format.

In Python

In the Python programming language, encoding represents a Unicode string as a string of bytes. This commonly occurs when you transfer an instance over a network or save it to a disk file. Decoding transforms a string of bytes into a Unicode string. This happens when you receive a string of bytes from a disk file or the network.

In Swift

In the Apple Swift programming language, encoding and decoding models typically represent a serialization of object data from a JavaScript Object Notation string format. In this case, encoding represents serialization, while decoding signifies deserialization. Whenever you serialize data, you convert it into an easily transportable format. Once transported, it converts back into its original format. This approach standardizes the protocol and enables interoperability between different programming languages and platforms.

What is encoding and decoding in digital electronics?

In electronics, the terms encoding and decoding reference analog-to-digital conversion and digital-to-analog conversion. These terms can apply to any form of data, including text, images, audio, video, multimedia and software, and to signals in sensors, telemetry and control systems.

What is encoding and decoding in human communication?

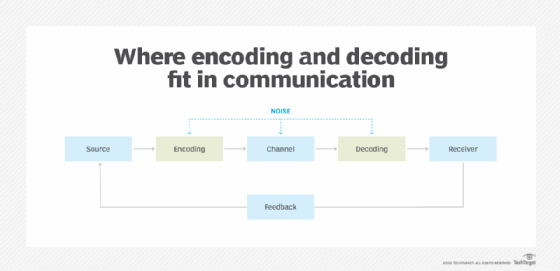

People don't think about it as an encoding or decoding process, but human communication begins when a sender formulates (encodes) a message. They choose the message they will convey and a communication channel. People do this every day with little thought to the encoding process.

The receiver must make sense of (decode) the message by deducing the meaning of words and phrases to interpret the message correctly. They then can provide feedback to the sender.

Both the sender and receiver in any communication process must deal with noise that can get in the way of the communication process. Noise involves the various ways that messages get disrupted, distorted or delayed. These can include actual physiological noise, technical problems or semantic, psychological and cultural issues that get in the way of communication.

These processes occur almost instantly in any of these three models:

- Transmission model. This model of communication is a linear process where a sender transmits a message to a receiver.

- Interaction model. In this model, participants take turns as senders and receivers.

- Transaction model. Here, communicators generate social realities within cultural, relational and social contexts. They communicate to create a relationship, engage with communities and form intercultural alliances. In this model, participants are labeled as communicators, not senders and receivers.

Decoding messages in your native tongue feels effortless. When the language is unfamiliar, however, the receiver may need a translator or tools like Google Translate for decoding the message.

Beyond the basics of encoding and decoding, machine translation capabilities have made significant progress of late. Find out more about machine translation technology and tools.