Getty Images

How to deploy Kubernetes on VMware with vSphere Tanzu

Instead of working with Kubernetes with your regular platform, it might be time to give VMware vSphere with Tanzu a try. Follow this tutorial to get started.

VMware vSphere with Tanzu can help organizations that are already working with VMware to centralize their Kubernetes cluster management and provide IT teams with an alternative -- but still familiar -- method to work with clusters.

Although it's known as a hypervisor to run VMs, vSphere can also work as a Kubernetes cluster to run container workloads. Let's look at what vSphere with Tanzu offers and how to get it up and running.

How vSphere with Tanzu works as a Kubernetes cluster

VMware vSphere with Tanzu consists of two main features: It enables IT admins to run container workloads on a hypervisor and to deploy Kubernetes clusters in VMs. This lets it work as a Kubernetes cluster that deploys clusters.

Those familiar with Kubernetes know that a deployment consists of control plane nodes responsible for management and worker nodes where the containers run. To set up vSphere with Tanzu, three virtual appliances are deployed in a regular vSphere cluster called a supervisor cluster.

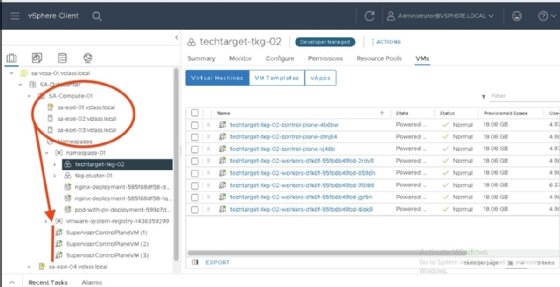

Figure 1 displays a vSphere cluster named SA-Compute-01 with its three supervisor control plane VMs. These three VMs form the cluster's master nodes.

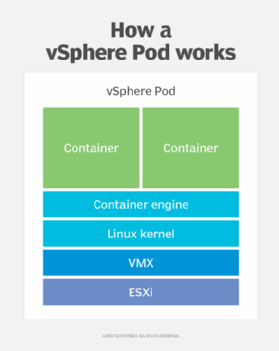

The ESXi hosts in the cluster are the worker nodes, so there is no need to deploy other physical nodes or VMs to run container workloads. The ESXi hosts run directly on the hypervisor -- or technically, inside a small dedicated VM with a customized, trimmed-down Photon OS Linux distribution that contains the container engine to run the containers. As usual with Kubernetes, containers run in pods, and with vSphere with Tanzu, they run in vSphere Pods.

Some might say using vSphere Pods adds extra overhead and is the same type of deployment as when Kubernetes is installed in VMs. But there is a difference: The Linux kernel is trimmed down and loaded directly into the virtual machine executable (VMX) process without having to go through the same boot cycle as a regular VM. Therefore, containers spin up just as fast with vSphere Pods as on any other Kubernetes worker nodes.

In a Kubernetes cluster, each worker node runs a process named kubelet that follows the scheduler running in the control plane nodes to pick up work to do. In vSphere with Tanzu, each ESXi host runs a process named spherelet that does the same thing: If the supervisor cluster control VMs schedule a container to run on a host, the spherelet downloads the container image and launches it in a vSphere Pod.

How to set up vSphere with Tanzu

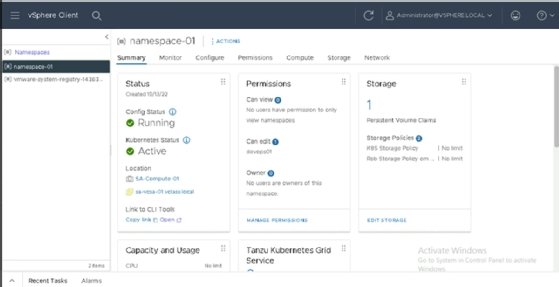

The vSphere with Tanzu setup starts with the deployment of the three control plane appliances. Next, IT admins create a container in the vCenter inventory of the namespace type. This is similar to a Kubernetes namespace, which sets boundaries for where developers and IT admins using the cluster can run their workloads. Figure 3 displays a namespace configuration panel with a storage policy that dictates where container storage can go and who has permission to use the namespace.

With this setup, IT admins can run containerized workloads the same way they run VMs normally. The vSphere Pods show up in the vCenter server inventory. The only real difference is that they are not controlled from the vSphere Client, but instead through kubectl commands like any other Kubernetes cluster. Developers can work with the cluster in the manner they would for other Kubernetes clusters.

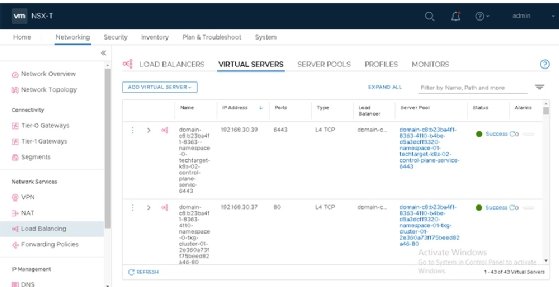

For network virtualization, vSphere Pods require VMware NSX because the virtual network interface cards connect to overlay segments that take care of communication within and across physically separated pods on vSphere hosts. This functionality is the same as in regular Kubernetes clusters, where, for example, admins might use Calico or Antrea for that purpose.

NSX also offers the possibility to deploy load balancers automatically as a service in a deployment as seen in Figure 4. The image displays an automatically deployed load balancer virtual server from the NSX user interface. NSX admins can see this, but YAML files processed with the kubectl command control access to this page.

VMware vSphere with Tanzu can also deploy Kubernetes clusters running in VMs, which does not use vSphere Pods. It does use the three supervisor control plane VMs. Through these machines, the ESXi hosts deploy the VMs running Linux. Those VMs will then run the Kubernetes control plane and worker nodes. This feature is named Tanzu Kubernetes Grid (TKG).

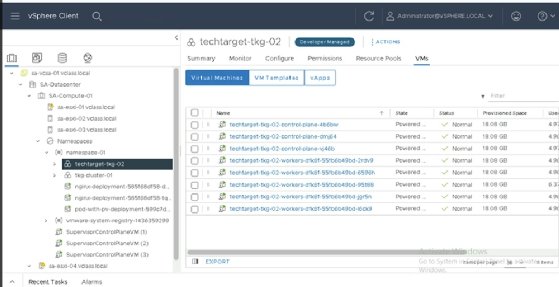

Figure 5 displays a TKG cluster named techtarget-tkg-02 with three control plane VMs that form the control plane nodes and five worker VMs. These machines run a native Kubernetes cluster without any modifications from VMware. This enables complete root-level access to the cluster and makes it possible to deploy any service type into Kubernetes.

The TKG cluster doesn't require NSX for network virtualization, but it does require a deployed load balancer before vSphere with Tanzu can run the Kubernetes clusters in VMs. At time of publication, the two supported load balancers are HAProxy and VMware NSX Advanced Load Balancer. The latter option doesn't mean a full NSX deployment is necessary; this load balancer is an evolution of the Avi Networks load balancer that VMware acquired several years ago and is a standalone product. Customers who want to deploy the HAProxy load balancer can download the virtual appliance created by VMware from GitHub to deploy on an ESXi host.

Benefits of vSphere with Tanzu

With vSphere with Tanzu, the VMware software stack can manage Kubernetes cluster deployments, and the vSphere Client can provide updates. This enables centralized Kubernetes cluster management.

It's also easy to deploy and destroy clusters as needed, making it simple and straightforward to provide clusters for testing and development. In addition, developers can work with clusters through the kubectl commands and YAML files as they would in other Kubernetes environments.