Getty Images

Manage application storage with Kubernetes and CSI drivers

Container Storage Interface drivers offer IT ops teams increased autonomy and flexibility in deploying and managing persistent storage on Kubernetes clusters.

Moving beyond dedicated workload infrastructures has many benefits, including modernized applications and faster development cycles, but can also add complexity. The Container Storage Interface offers IT teams much-needed flexibility in working with container environments.

Containers accelerate and streamline the development process, offering a lightweight approach to virtualizing resources and establishing stable performance environments. Kubernetes and similar container orchestrators can scale these deployments, load balance, and automate rollouts and rollbacks.

When managing container environments, DevOps and IT teams rely on Container Storage Interface (CSI) drivers for increased autonomy, flexibility and compatibility with a variety of storage devices. Today, more than 60 CSI drivers are available for a variety of file, block and object storage types in hardware and cloud formats. Using a CSI driver, IT admins can deploy their preferred type of persistent storage independent of their chosen orchestrator, I/O and related capabilities, such as snapshots and cloning.

Advanced persistent storage, Kubernetes and CSI

Kubernetes is a descendant of Borg, an internal container orchestrator that was developed by Google engineers and paved the way for open sourcing Kubernetes in 2014. This portable, extensible platform follows a client-server architecture that consists of a control plane and clusters of nodes that run containerized tasks. Kubernetes orchestrates workloads by placing containers into pods and running them on virtual or physical nodes.

For storage providers, early support for Kubernetes integration required considerable effort because the platform used in-tree volume plugins built directly into the Kubernetes binary. This approach created obstacles -- for example, adding new application storage required checking code into the core Kubernetes repository and posed challenges related to integration complexity and storage limitations.

Along with these practical hurdles to plugin integrations, the in-tree approach limited bug fixes and workarounds. It also increased the possibility of introducing code-based flaws that could destabilize the Kubernetes platform.

Ultimately, integration complexities and the lack of uniform standards limited Kubernetes' feature set. Users needed the ability to design plugins based on simplified specifications that weren't reliant on the Kubernetes lifecycle. As a result, Google introduced CSI to enable users to expose new storage systems without ever having to touch the core Kubernetes code.

Assessing CSI driver implementations for Kubernetes

Today, storage providers use CSI to write their plugins. Using simple specifications, they can eliminate dependence on the Kubernetes release cycle and update their drivers at any time.

These CSI drivers provide three main services:

- identify specific plugins and capabilities;

- control cluster operations for external processes; and

- define node and container operations.

The primary use for CSI is storage abstraction, so administrators can use it to work with Kubernetes' PersistentVolumeClaims (PVCs), PersistentVolumes (PVs) and storage classes.

The PVC framework denotes storage requests from users and defines the specific storage requirements for a pod, such as capacity or data sharing. To run a pod, developers install a node agent called a kubelet that can launch and maintain pods within that node.

Administrators then assign a storage class indicating the external storage and use CSI drivers to provision for that class. After a storage class is specified, the PVC triggers dynamic provisioning. External storage relies on the CSI driver to invoke volume creation. That specific PV is then bound to the PVC.

The communications protocol between the orchestrator and plugins that executes all of these processes is gRPC, an open source remote procedure call framework. The CSI controller directs low-level functions, such as provisioning storage on defined hardware and creating volume snapshots.

How to build a CSI driver for Kubernetes

Developers can mount the controller plugin on any node within a cluster as either a deployment -- Kubernetes' versioning system for rollbacks and rollouts -- or a StatefulSet for pod scaling.

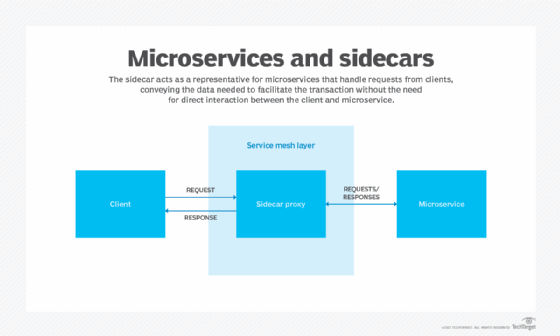

The plugin interacts with Kubernetes objects as a sidecar container. It makes calls to the CSI controller service and then executes all operations through the Kubernetes API and the specified cluster's external control plane. These types of automatic exchanges help developers concentrate on programming rather than solving storage complexity.

For IT teams, the Kubernetes platform offers recommendations for simplifying deployments of containerized CSI drivers. However, keep in mind that driver installation is different for every vendor, particularly for cloud deployments using Amazon Elastic Kubernetes Service, Azure Kubernetes Service or Google Kubernetes Engine. Once a team has decided on an approach, it's crucial to thoroughly understand the makeup of the hosting environment.

In terms of cloud deployments, developers can base their comparisons on the most suitable platform for the tasks they want to accomplish. In general, how development teams use CSI drivers remains uniform across all deployments -- for example, creating a StorageClass inside a Kubernetes cluster that points to the CSI.