Getty Images

Kubernetes networking 101: Best practices and challenges

Before jumping into the landscape of CNI plugins for Kubernetes, it's important to understand the basic elements of Kubernetes networking. Follow this guide to get started.

Networking plays a critical role in Kubernetes environments, connecting all containers and services. The network is central to workload access, enabling Kubernetes to seamlessly move container-based workloads between on-premises, public and private cloud infrastructures.

Before working with popular Container Network Interface (CNI) plugins such as Calico, Flannel and Cilium, it's important to get familiar with the basics of Kubernetes networking. Explore networking's role in Kubernetes deployments, best practices and potential challenges.

How Kubernetes networking works

Kubernetes is a container orchestration platform that manages scheduling, deployment, monitoring and ongoing operation of virtualized containers across computer clusters, on premises or in the cloud. Kubernetes' open source code has become the foundation for many container management platforms, such as Amazon Elastic Kubernetes Service, Red Hat OpenShift and SUSE Rancher.

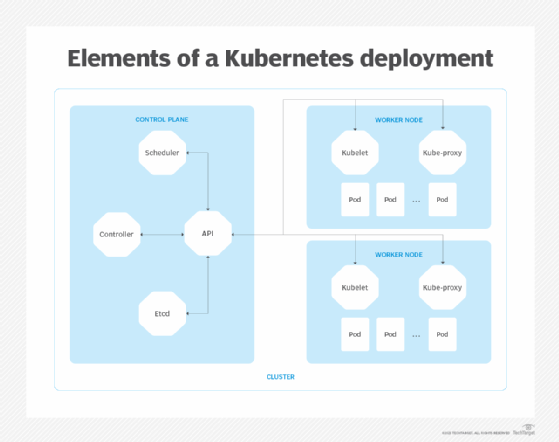

A Kubernetes deployment consists of the following major elements:

- Pod. A pod is a fundamental logical object in Kubernetes that organizes related containers into functional groups. Each pod has its own IP address and can contain one or more containers.

- Services. Kubernetes provides several dedicated services, including a proxy and load balancer, for traffic management between pods.

- Worker node. A worker node is a server that hosts pods and runs services such as kubelet, etcd and kube-proxy. A worker node can host one or more pods.

- Control node. The control or primary node is a server that manages all the worker nodes and pod deployments across a Kubernetes cluster.

The elements of a Kubernetes deployment depend on networking for control and communication. Kubernetes relies on software-defined functionality to configure and manage network communication across the cluster.

Kubernetes networking typically involves four main communication operations:

- Container-to-container. Communication between containers that run within the same pod is quick and efficient; all containers in a pod share the same network namespace, including resources such as IP addresses and ports.

- Pod-to-pod. This category covers communication between pods on the same worker node, or intra-node communication, as well as across different worker nodes, or inter-node communication. Kubernetes manages the details necessary to find pods and handle traffic flows between them.

- Pod-to-service. Kubernetes services track pods and their details, such as IP addresses, address changes, and creation or termination of containers and pods. This ensures an application only needs to know the IP address of the service and not the details of individual pods.

- External-to-service. Kubernetes rules and policies control inbound communication to the Kubernetes cluster, usually from the internet and secured with HTTPS.

All these operations depend on three basic Kubernetes network requirements: Pods must be able to communicate with one another without using network address translation; similarly, nodes must be able to communicate with all pods without NAT. Finally, a pod's IP address must also be the same address other pods and nodes refer to when communicating with that pod.

Kubernetes networking services

A variety of Kubernetes networking services enable organizations to tailor a containerized application to suit business needs. For example, a business might want to make an application available to outside users or restrict access to a cluster.

There are four common Kubernetes networking services to help define and tailor workloads:

- ClusterIP. This service creates a virtual IP address in a cluster that enables communication among different services within the cluster. ClusterIP is often used to restrict resource access to a specific application, such as a database.

- ExternalName. This service enables a workload to use a name rather than a ClusterIP.

- LoadBalancer. This service enables external load balancers to route inbound traffic and is commonly used to externally access workloads with front ends, letting outside users interact with the workload. Kubernetes automatically creates LoadBalancer, NodePort and ClusterIP services to facilitate the external load balancer.

- NodePort. Similar to ClusterIP, the NodePort service listens to a port on one node and forwards traffic to the port of the pod running the workload. However, this is not a secure method and is often overlooked in favor of ClusterIP.

Network traffic policies in Kubernetes

Kubernetes networking also supports the use of network policies, which control the flow of traffic for cluster applications and detail how pods and services can communicate across the network.

Several major criteria define pod communication. Pods can communicate with other allowed pods and with allowed namespaces. They can also communicate with allowed IP address blocks based on Classless Inter-Domain Routing ranges and network plugins designed to support policies.

Network policies that involve pods and namespaces can also stipulate traffic between pods to allow only approved traffic -- an important function because, by default, pods are not isolated and accept traffic from any source. Once a policy is implemented, the pod becomes isolated and only handles traffic the policy allows, rejecting all other traffic.

Kubernetes network policies are cumulative, meaning that if multiple policies impose different restrictions on a pod, the pod will see and obey the combined restrictions. There is no recognized order or sequence for how policies are applied.

Potential Kubernetes networking challenges

Although Kubernetes is the de facto standard for container orchestration and automation, its networking framework poses potential challenges for adopters.

Frequent changes

Traditional networks tend to embrace static IP addressing. For example, once an instance such as a VM or client computer is configured on a network, it usually runs for long periods and ideally never needs to change.

Containers are different, often requiring dynamic network addressing for container migrations and ephemeral container durations. This can raise issues related to container addressing and management, and changing network policies dynamically can be problematic for Kubernetes network management.

Security vulnerabilities

Containers must exchange considerable network traffic. However, by default, traffic is neither encrypted nor restricted with network policies.

This requires Kubernetes adopters to add security layers to Kubernetes deployments. Managed Kubernetes platforms, cloud-based Kubernetes services and other third-party Kubernetes platforms can provide additional security measures, but it's vital that enterprises ensure their security needs are met.

Need for automation

Kubernetes deployments depend on significant automation to manage countless dynamic, often short-lived containers. Automation is critical in defining container traffic policies, security policies and load balancing.

Because human administrators can't manage all those details dynamically, Kubernetes requires careful implementation of the automation layer. Managed Kubernetes platforms, cloud-based Kubernetes services and other third-party Kubernetes platforms can help by providing a well-supported automation mechanism.

Connectivity and communication requirements

Kubernetes environments can become large and complex. This places enormous pressure on container-based networking in terms of reliability and performance demands.

Any disruption in service or pod access can seriously disrupt workload performance. A Kubernetes service mesh can help handle service discovery, application visibility, routing and failure management, but good Kubernetes network management still requires careful monitoring and alerting to ensure reliable network operation.

Kubernetes networking plugins

Kubernetes fundamentally relies on software-defined network interfaces. However, the process of manually defining and creating network interfaces can be problematic and error-prone.

To address this challenge, container platforms have adopted a standardized specification to configure network interfaces through network plugins. The most popular specification is CNI, a project created and maintained by the Cloud Native Computing Foundation. Kubernetes is not the only container platform to embrace CNI. Other container runtimes that support CNI include Amazon Elastic Container Service, Apache Mesos, Cloud Foundry, OpenShift, OpenSVC and rkt.

Kubenet vs. CNI

Kubenet is a common Linux-only Kubernetes network plugin that does not implement advanced features, such as network policies or cross-node networking. Thus, kubenet is rarely used beyond establishing basic inter-node routing rules or deployments in single-node, Linux-only environments. Organizations that require more complex features and functionality should deploy more comprehensive CNI plugins.

Once created, a CNI plugin can generate a network definition that establishes pod-to-pod and other communication requirements. CNI uses the plugin to establish connectivity, allocate network resources and release those resources when the container is destroyed.

There are currently dozens of established third-party CNI plugins designed to handle network configuration and security in container-based environments. Popular options include Calico, Weave, Flannel and Cilium. Organizations can also develop and deploy their own CNI-compliant plugins to handle their specific needs.