everythingpossible - Fotolia

Know when to implement serverless vs. containers

Containers save resources and reduce deploy times. So does serverless. Containers are a great host for microservices. So is serverless. But the deployment options are more different than they are alike.

Serverless computing is either the perfect IT strategy to resolve an application deployment problem or an expensive disaster waiting to happen.

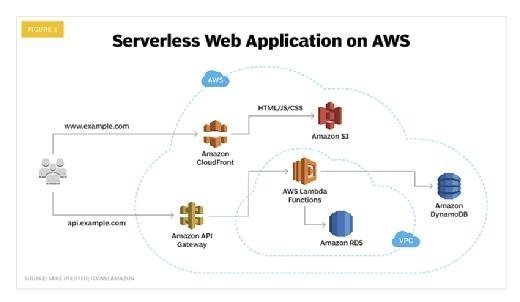

Serverless means application functions run on a cloud-based service as-needed. Serverless computing is a misnomer, as servers still host the application, but the user does not handle provisioning, configuration, maintenance, debugging or monitoring of those hosting IT resources. Serverless products include OpenWhisk, AWS Lambda, Microsoft Azure Functions, IBM Cloud Functions and Google Cloud Functions.

VMs, containers and serverless architecture all have distinct pros and cons, but serverless might break everything if the applications aren't suited for that deployment architecture. To prevent an implosion in IT, give developers an educated assessment of serverless vs. containers for new deployments.

To determine the suitability of containers or serverless, contrast what each architecture type does, the user base for the application it will host and what is required for successful deployment.

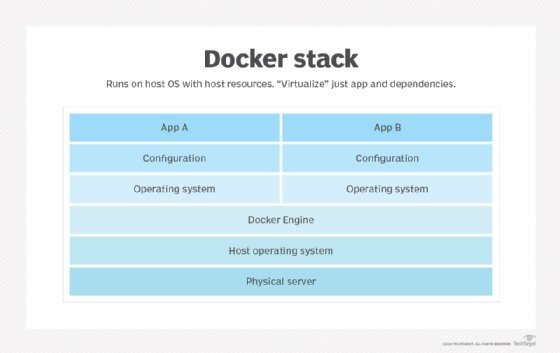

VMs, while not within the scope of this article, are the most commonly used and understood application hosting architecture in IT organizations. They are the most general approach to infrastructure abstraction, but with the expense of complexity and resource overhead. This article primarily compares serverless, which abstracts away resources entirely, with containers, which are a lightweight and fast isolation layer atop the OS.

What an app needs

The economic benefit of serverless cloud computing is that an organization pays for the compute resources and application execution only when the application runs; idle time incurs no cost. Serverless is best for applications and components that must be always-ready, but not always-running.

For example, an internet of things (IoT) farming application generates an event when a moisture sensor indicates that soil is dry and needs watering. This application in a traditional deployment could sit idle for days of rainy weather, waiting to be activated and consuming unnecessary resources. A container system, such as Docker containers managed with Kubernetes orchestration, could reduce the quantity of resources the application consumes, but serverless computing would eliminate them during idle periods. The more time an application is idle, the better serverless looks. Simple, right?

As always, it's more complicated than it sounds. The benefits of a serverless architecture include that it has this example IoT app ready in milliseconds instead of the tens of seconds a containerization system requires, but the longer spin-up time of a containerized deployment is unlikely to affect the IoT app's ability to fulfill its purpose. And not every application that spends more time idle than active is a candidate for serverless vs. containers. Serverless systems logic not only costs more than it would in a container system, but it is also technically different.

Serverless vs. container technology

When you dig into the details of serverless, you'll find terms such as:

- microservices: applications broken into smaller, independently scalable and deployable components;

- functional computing: on-demand execution of app functions, as a service, by the cloud hosting it;

- lambda functions: anonymous C++ functions that transform the data collections passed to them and subsequently produce new collections; and

- stateless: an application that does not use or require data from previous use events.

Serverless cloud computing aims for context-independent processing, for stateless applications. When serverless apps are activated on an event, they start with a fresh copy of the code that remembers nothing of the past. Any event that relies on events or requests that precede its occurrence are stateful. If you spin up a second serverless architecture copy to handle more work, that second copy won't automatically know what the first copy had been doing.

It's possible to make stateful applications work in serverless deployments through client-side or back-end state control, but this has to be written into the application's code. That option likely won't exist in third-party software. Even if an organization's applications are written in-house, to implement stateful behavior in serverless deployments requires potentially complicated and expensive changes.

Containers can run nearly any application or application component without significant change to the code. Serverless cannot. Most business applications perform transaction processing wherein one or more databases are updated, which presents even more challenges to stateless behavior. Making transaction processing applications stateless can result in colliding transactions: two inquiries on an inventory or account balance that independently report a sale or withdrawal, only to collide and result in a below-zero balance. Organizations can redesign or retrofit applications with a mixture of stateless and transactional behaviors, but it's a complicated process that requires experience and significant work. Containers don't have this requirement.

Connect to reality

Developers approach serverless vs. containers as a question of what is best for the application, but they also must weigh the deployment concerns for each. Container networking is explicit and based on the IP subnetwork, the most common app deployment model. Container systems use a private IP address space within an application, but administrators can selectively expose component addresses to provide a service to users or other applications on a virtual private network or the internet. With serverless computing, the load balancing and network addressing, as well as other operational aspects, are handled by the serverless cloud framework, and you may have to take special steps to integrate serverless components with application components that are hosted in a more traditional cloud or in your data center.

Containers require a long-term hosting location, while serverless does not. Containers can support a widespread community accessing applications and services with good response times, but only if you deploy copies in each geographic area. That deployment model divides the work, creates more idle time among the various application copies and increases costs. By contrast, serverless apps can run any number of copies of the workload anywhere where there are available resources.

How to host microservices

Microservices -- self-contained components of distributed application code that can be shared and scaled independently -- suit deployment in containers and on serverless clouds. The differentiator comes down to what kind of microservices you have. Because microservices are stateless, they suit serverless deployment. However, microservices are often generalized components shared by multiple applications. When microservices are composed into several different applications, container hosting is a better deployment choice than serverless. Serverless microservices, when used regularly, can result in significant increases in application response time, due to requests to deploy serverless components on demand.

Serverless will evolve, but there are clear differences in serverless vs. containers at both the technical and cost management levels. In some cases, those differences will make it easy to choose one over the other, but in others, application planners must adopt a mixed model, even though it adds a bit of complexity. One size doesn't always fit all.