Getty Images/iStockphoto

How to effectively use DORA metrics in DevOps

DORA metrics can help organizations standardize their DevOps approach. Explore the DORA framework's benefits and limitations and how to evaluate performance against its metrics.

DevOps Research and Assessment, a long-running project by Google Cloud, identifies five core metrics that organizations using a DevOps approach can follow to evaluate their workflows. Known for its research analysis and annual "Accelerate State of DevOps" report, DORA offers a uniform set of guidelines for DevOps organizations.

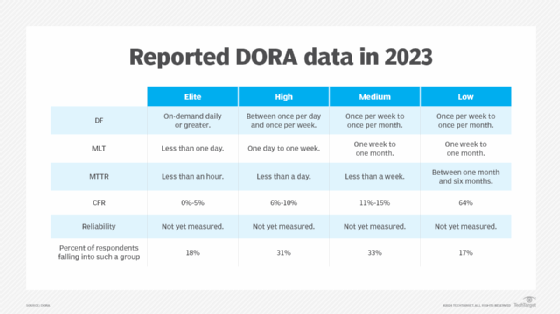

The DORA guidelines categorize organizations' performance levels as Elite, High, Medium or Low, facilitating comparisons across organizations. However, DORA does not define success for all metrics -- and while each metric plays an important role, DevOps teams must be aware of their limitations.

Metrics alone cannot solve performance issues. However, they can serve as a guide to successful adoption of DevOps practices when paired with other tools. Understanding why each DORA metric is important and how to use DORA's guidelines can help organizations effectively evaluate their own performance.

The 5 DORA metrics

The five DORA metrics lay the groundwork for organizations using a DevOps approach.

1. Deployment frequency

Deployment frequency (DF) measures how often teams push changes to the operational environment as well as the number of successful deployments. Failures do not count. To evaluate DF, teams can use technical tools to monitor and report changes within the operational environment and tie post-deployment assessment into the help desk.

2. Mean lead time for changes

Mean lead time (MLT) for changes measures the time required for a change to reach the operational environment. In the context of DevOps, this means measuring the duration between a commit -- which has undergone developmental testing but has yet to be deployed -- to the code's release into the operational environment.

3. Mean time to recovery

Mean time to recovery (MTTR) measures how long it takes for an environment to become operational again after a disruption. This requires a clear definition of a working state, which might not mean returning the system to its previous state but rather to a level where operations can resume.

It is important to take two measurements: the time to resume operations, and the time to fully restore the system to its original state. By using rigorous monitoring systems and making predictive changes, organizations can avoid downtime and potentially bring this metric down to zero.

4. Change failure rate

Change failure rate (CFR) is the percentage of deployed changes that result in a failure. The ideal rate is zero, although it is unlikely that organizations will reach this point. By monitoring performance at a granular level and learning from what has happened in any failure, DevOps teams can gradually drive the CFR down. In conjunction with MTTR, CFR helps define an organization's DevOps stability.

5. Reliability

Measuring reliability can help organizations assess their site reliability engineering (SRE) efforts. Reliability is a broad metric that covers a range of areas, including the following:

- User-facing behavior.

- Service-level indicators and service-level objectives.

- Use of defined playbooks to deal with issues.

- Use of automation to reduce alert fatigue and the amount of manual work required.

- Level of reliability built into the overall DevOps process.

How to use the DORA metrics in DevOps

For each metric, DORA labels organizations' performance as Elite, High, Medium or Low, as shown in Figure 1. These levels are not based on predefined targets but instead on reported data from other organizations using the DORA framework. Various measures are available on the internet, but the following is taken from the "Accelerate State of DevOps Report" 2023.

The DORA framework is not a hard-and-fast rulebook like ITIL used to be. Rather, it provides a way for organizations to self-monitor, measuring against what teams view as their own requirements.

The problem with DORA metrics

DORA assigns organizations a level -- Elite, High, Medium or Low -- based on self-reported data, which introduces variability regarding how the submitting organization labels its own measurements. For example, an organization might identify itself as Elite overall, but under the criteria of a company with a stricter base model, it might only qualify as High.

To use evaluated metrics effectively, teams must keep in mind that changes in one DORA area can affect outcomes in others. For example, upping the pace of deployments so that teams push changes daily might move the organization into the Elite DORA class for the DF metric. But this might negatively impact CFR, downgrading the organization from Elite to High on that metric.

Using mean measurements can also lead to issues. If a team can remediate minor issues in seconds but one large problem takes months to solve, its MTTR could still rank in the Elite to High range. To mitigate this problem, the DORA team advises that changes should be kept as small as possible, with any large change broken up into a set of smaller sub-tasks.

DORA works best with others

Using DORA alongside other frameworks, such as ITIL, can provide better insight into how DevOps is performing within the organization. DORA metrics offer a look into how well DevOps is operating, while ITIL focuses on audited, automated processes in response to metric evaluations.

It remains to be seen whether DevOps management tools such as those from Atlassian and ServiceNow -- which have already embraced ITIL v4 -- will also embrace DORA metrics. A fusion of these methodologies in popular DevOps tools could increase efficiency for DevOps practitioners by enabling simultaneous monitoring and management of DORA metrics alongside main DevOps streams.

Clive Longbottom is an independent commentator on the impact of technology on organizations. He was a co-founder and service director at Quocirca as well as an ITC industry analyst for more than 20 years. Trained as a chemical engineer, he worked on anti-cancer drugs, car catalysts and fuel cells before moving to IT.