Sikov - stock.adobe.com

Find the right service mesh tool for Kubernetes environments

Kubernetes is an industry must-have, but still lacks in certain areas -- that's where service mesh comes in. Discover four service mesh tools to use with your Kubernetes platform.

Kubernetes has become a major tool in the implementation and management of container-based microservices environments.

Initially released by Google in 2014, it was donated to the Cloud Native Computing Foundation (CNCF) in 2015, which now maintains the open source Kubernetes platform. As Kubernetes has improved and shown its strengths in container orchestration within container systems, particularly Docker, many organizations have adopted Kubernetes.

However, Kubernetes still needs support in certain areas to maintain an organization's container-based platform at an optimum level. This is particularly the case when the underlying platform is hybridized: split over a mix of different cloud -- and sometimes non-cloud -- platforms.

How a service mesh combats Kubernetes' limitations

Kubernetes is not set up to provide a high degree of management for inter-container data processing. For a full mesh topography, each service has two connections with each other service. This then leads to the number of connections calculated with the equation s(s-1), where s is the number of services. For example, a two-service Kubernetes environment will only have two data connections to manage -- one in each direction between the two services. With three services, this expands to six connections. For 100 services, this becomes 9,900 connections to manage -- something that Kubernetes is not set up to do.

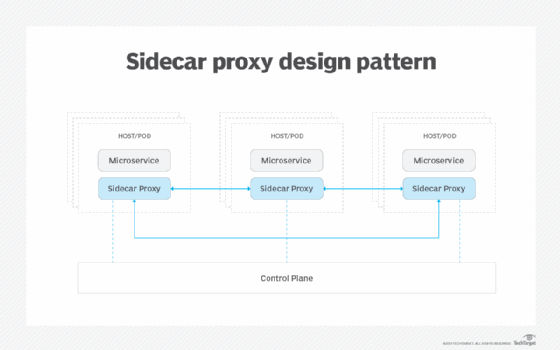

If the architecture is broken up into two main areas -- a data layer and a control plane -- then it's possible to abstract many actions and introduce greater flexibility.

This is where a service mesh is useful, because it provides the additional capabilities needed alongside Kubernetes to ensure that a highly performing and manageable platform is in place.

A service mesh provides a series of services that enable various actions, such as service discovery, data encryption and load balancing, as well as real-time capabilities, such as observability, authentication, authorization and tracing.

To do this, a service mesh uses a sidecar to enable the data layer. This is a proxy used for each service instance. Sidecars abstract as many actions as possible, such as data communication protocols, monitoring and security. Such abstraction enables developers to work against the functional code without having to worry about specific inter-service communications. This enables operations staff to work against instance implementation, monitoring and adaptation without worry about how the services change. A service mesh also provides greater control of A/B and canary testing, making the deployment of new code or services more predictable.

One sidecar that is used across many service meshes is Envoy. This tool was created by Lyft to abstract data communications and break down its monolithic architecture. The different service meshes on the market that use Envoy can count on the data it provides to meet an established standard, and that any actions handed back to Envoy will be handled predictably.

The control plane enables operations staff to define, in exact terms, how they want an individual or collection of services to run. The control plane then communicates with Envoy or other proxies to ensure that the services perform as required to reach the desired outcome. Such control is dynamic: As the platform changes -- for example, with a service crashing or malicious activity being noted -- the control plane can adapt and redirect the implementation of the overall composite application to maintain performance and data security.

Whether an organization has a mature container-based architecture, a complex container environment or is just starting their container journey and wants a greater degree of futureproofing, implementing a service mesh makes sense.

Service mesh tool options

There are a few options on the market.

- Istio is an open source service mesh originally backed by Google, IBM and Lyft. Now it operates under its own banner but remains open source. It uses the Envoy sidecar proxy to create its data layer. It is also extensible: Although it currently only operates within a Kubernetes environment, it can be extended to embrace other environments. As of time of writing, Istio plans to make it more platform agnostic.

- Linkerd is another open source service mesh tool. Originally written by Buoyant -- which went on to write Conduit, which was then absorbed back into Linkerd -- this service mesh is a highly secure, pared-back option that is under the CNCF banner. A more lightweight service mesh than Istio, Linkerd is said to be a faster option than Istio and provides a simpler on-ramp for new adopters, while focusing on security as a default approach. Although missing some of the advanced functions found within Istio, Linkerd is still a strong contender as a service mesh option.

- Nginx Service Mesh (NSM) is another lightweight contender. However, NSM can be integrated with other aspects of the Nginx portfolio to provide greater overall functionality. For example, mixing NSM with the Nginx Ingress Controller creates a unified data plane across a complex environment that enables management from a single configuration.

- Consul, from HashiCorp, is another option. Again, as HashiCorp offers a large portfolio of cloud-based and service-based services, adopting Consul as part of a broader overall HashiCorp-based environment can provide additional overall benefits.

Other options include Open Service Mesh, another lightweight but extensible offering, and Anypoint Service Mesh from MuleSoft.

The service mesh market is maturing, bringing in many capabilities that original container environments, such as Docker and orchestration platforms such as Kubernetes, failed to provide. Adopting a service mesh -- particularly those that accept extensibility for futureproofing as a necessity -- is something organizations planning a microservices-based future must look to.